Website Scraping Techniques: Tips for Effective Data Extraction

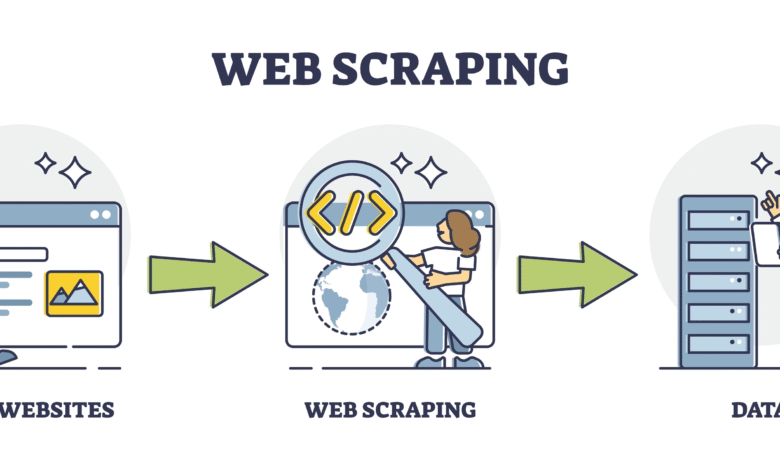

Website scraping techniques form the backbone of data extraction in our increasingly digital world. By using these innovative methods, we can efficiently gather valuable information from websites, allowing marketers, researchers, and developers alike to unlock insights hidden within the vast Internet. With the right web scraping tips, you can scrape articles and other HTML content seamlessly, turning raw data into actionable intelligence. Employing advanced data extraction techniques not only enhances productivity but also fosters informed decision-making based on real-time data. As data evolves, mastering website scraping techniques is essential for anyone serious about leveraging the power of online information.

When it comes to gathering information from the web, understanding online content harvesting methods is crucial. This practice, commonly referred to as web data extraction, allows users to pull relevant information from various sites effectively. With robust strategies in place, individuals can systematically capture articles and conduct thorough HTML content analysis to reveal patterns and insights. Exploring these alternative methods can significantly enhance how we understand digital landscapes and process information. By adopting efficient content collection strategies, we can stay ahead in the data-driven world.

Essential Web Scraping Techniques for Beginners

Web scraping is a powerful tool that allows you to automatically collect data from various websites. To start your journey, it’s crucial to understand some of the basic techniques involved. One of the primary web scraping techniques is utilizing libraries such as Beautiful Soup and Scrapy in Python, which enable users to extract information from HTML and XML files seamlessly. These libraries simplify the extraction process, making it easier for beginners to scrape articles and gather relevant information from desired web pages.

Additionally, mastering HTML content analysis is vital for effective web scraping. Understanding how web page elements are structured allows scrapers to pinpoint and extract specific pieces of data. Familiarity with CSS selectors, XPath queries, and regular expressions can significantly enhance your scraping capabilities. By employing these methods, you can efficiently collect data, ensuring that you capture what you need while minimizing errors in the process.

Advanced Data Extraction Techniques for Proficient Scrapers

Once you’ve grasped the basics, it’s time to delve into advanced data extraction techniques that can elevate your scraping projects. Working with APIs is one such method that allows you to collect data more systematically and ethically than traditional scraping. APIs often provide structured data, making extraction cleaner and easier while reducing the risk of merely scraping HTML content that may change frequently. Utilizing APIs ensures that you are not violating a website’s terms of service, a crucial aspect to consider for any serious web scraper.

In addition to APIs, implementing techniques like data normalization and cleaning during the extraction process is essential for maintaining data integrity. Scraped data often comes in various formats and structures, which can muddy the analysis process. This is where data cleaning techniques come in handy, such as removing duplicates and standardizing formats. These practices not only make analyzing your data simpler but also help in drawing meaningful insights from the information collected through your scraping endeavors.

Tips for Effective HTML Content Analysis

Analyzing HTML content is a critical step in web scraping that can significantly impact the quality of the data you collect. Understanding the Document Object Model (DOM) is paramount, as it defines the structure of web pages and how elements are accessed. By familiarizing yourself with the hierarchy of HTML tags, you can better navigate the web page’s structure to effectively locate the data you wish to scrape. Furthermore, tools like Chrome DevTools can aid in examining the HTML components directly, making identifying the right data locations much easier.

Moreover, leveraging web scraping tools that support automated HTML content analysis can save time and enhance efficiency. These tools can parse HTML documents, extract data, and even compile it into organized formats like CSV or JSON files. They often come equipped with features that can handle pagination, session handling, and even respect robots.txt, ensuring compliance with ethical scraping practices. By utilizing these advanced tools and techniques, you can significantly streamline your data extraction workflow and focus more on analysis and insights.

Scraping Articles: Best Practices to Follow

When scraping articles from online sources, adhering to best practices is key to ensuring a smooth and ethical process. First, always review a website’s terms of service and robots.txt file before scraping. This not only helps in understanding what content you are permitted to scrape, but also protects your scraping operation from potential legal ramifications. Websites can have different policies regarding data usage, and respecting these guidelines fosters a better scraping environment for everyone.

Furthermore, implementing a responsible scraping approach by throttling your requests can prevent overwhelming web servers. It’s common to encounter rate limits on many sites, and exceeding these can lead to being blocked or throttled permanently. Using delay tactics, such as pauses or randomized intervals between requests, can help maintain a low profile and reduce the load on the web server. By following these practices, you can ensure that your article scraping efforts are both productive and sustainable.

Understanding Legal Implications of Web Scraping

As web scraping grows more popular, understanding the legal implications surrounding the practice becomes crucial. Laws governing data extraction differ widely across jurisdictions. Some websites strictly prohibit scraping in their terms of service, and ignoring these can lead to legal challenges. Regions like the European Union have enacted data protection laws, such as the GDPR, that may impact how personally identifiable information can be collected and stored. It’s advisable for scrapers to conduct proper research into local laws to avoid potential litigation.

Moreover, being aware of the ethical dimension of web scraping is just as important as understanding legal aspects. Many professionals advocate for responsible scraping practices, emphasizing the need to respect copyright and intellectual property rights. Engaging in responsible scraping means giving credit to original sources and using scraped data in a manner that benefits the larger community, rather than exploiting it for profit without authorization. This approach not only promotes a healthy web scraping environment but also builds trust among developers and site owners.

Utilizing Proxies for Better Web Scraping Performance

In the realm of web scraping, proxies play a vital role in improving scraping performance while ensuring anonymity. By using proxies, scrapers can distribute their requests across multiple IP addresses, effectively dodging IP bans that may arise from sending too many requests to one server. Proxies can also help bypass geographical restrictions, allowing access to content that may be limited to specific regions. This opens up a wider range of sources for data extraction, enhancing the depth of your scraping projects.

Additionally, utilizing rotating proxies can further prevent detection by websites. These proxies automatically change the IP address after a specified number of requests, making it harder for target sites to recognize scraping patterns. This technique is particularly beneficial when dealing with sites that employ rate limiting or have strict anti-scraping measures in place. By incorporating proxies into your scraping strategy, you can protect your operations while ensuring efficient data collection.

Leveraging Machine Learning for Enhanced Data Extraction

In recent years, machine learning has emerged as a powerful ally in improving data extraction techniques in web scraping. By employing machine learning algorithms, scrapers can automate the process of identifying relevant data points amidst vast amounts of information. For instance, natural language processing (NLP) can be utilized to analyze text and extract key phrases or sentiments from articles, which is especially useful for businesses looking to gather market insights from online content.

Moreover, machine learning can aid in categorizing and organizing extracted data for easier access and analysis. By training models on labeled data, scrapers can improve the accuracy of their extraction methods over time, reducing manual oversight and allowing for scalable solutions to data collection. As machine learning continues to evolve, its application in web scraping is set to transform how data is extracted and utilized, leading to more sophisticated results.

Regular Expressions in Scraping: A Powerful Tool

Regular expressions (Regex) are invaluable for web scrapers, especially when dealing with complex textual patterns. Regex allows scrapers to define search patterns that can match specific strings within scraped data. This is particularly useful for extracting metadata, timestamps, or formatted text (like dates and email addresses) from articles where traditional parsing methods may fall short. Understanding how to apply regex can significantly enhance your ability to refine the data extracted from HTML content.

Moreover, incorporating regular expressions into your data cleaning routines can streamline the process of tidying up scraped information. By using regex, you can easily identify and replace unwanted characters or formats, ensuring that your final dataset is pristine and ready for analysis. This powerful tool thus not only boosts the efficiency of your scraping efforts but also improves the overall quality of the data you work with.

The Future of Web Scraping: Trends and Challenges

As technology continues to advance, the future of web scraping is poised to evolve, presenting both exciting opportunities and significant challenges. One of the most notable trends is the increased use of artificial intelligence and machine learning to enhance scraping capabilities. These technologies enable smarter data extraction, allowing scrapers to adapt to changing web environments and retrieve data more efficiently. As websites implement more sophisticated anti-scraping measures, leveraging AI could become essential for maintaining effective scraping operations.

However, with advancements come challenges, particularly concerning ethics and legality. As more companies recognize the value of their data, they are likely to implement stricter controls to deter scraping. This creates a need for scrapers to stay informed and agile, establishing best practices that ensure they remain compliant with regulations. Additionally, the development of ethical guidelines for web scraping will be critical as the need for data grows in various industries, shaping how the practice evolves over time.

Frequently Asked Questions

What are some effective web scraping tips for beginners?

When starting with web scraping, beginners should focus on understanding the structure of HTML content, using simple libraries like BeautifulSoup or Scrapy, and ensuring they respect a website’s robots.txt file. Learning these web scraping tips can set a solid foundation for effective data extraction techniques.

How can I scrape articles from news websites effectively?

To scrape articles from news websites effectively, you should utilize web scraping tools that can handle dynamic content, such as Selenium, which allows you to automate browser controls. Additionally, targeting specific HTML elements that contain the article content will ensure more accurate data extraction.

What data extraction techniques are best for large datasets?

For large datasets, consider using multi-threaded or asynchronous approaches with tools like Scrapy or Puppeteer. These data extraction techniques can improve scraping efficiency by allowing you to pull multiple pages simultaneously, reducing overall execution time.

Can web scraping techniques be used for web content analysis?

Yes, web scraping techniques are highly useful for web content analysis. By scraping relevant HTML elements, you can gather text data, images, and metadata, which can then be analyzed to gain insights about trends, sentiment, and more from the scraped articles.

What are the legal considerations when using web scraping techniques?

While web scraping techniques can be powerful, it’s important to be aware of legal considerations. Always check the terms of service of the website, respect copyright laws, and comply with the Data Protection Act when conducting data extraction.

How do I perform HTML content analysis after scraping?

After scraping the HTML content, you can perform analysis using natural language processing (NLP) tools to extract insights from the text, such as summarization and sentiment analysis. Utilizing libraries like NLTK or spaCy in tandem with scraped articles enhances your HTML content analysis capabilities.

What tools can I use for web scraping and data extraction?

Popular tools for web scraping and data extraction include BeautifulSoup, Scrapy, Selenium, and Octoparse. Each of these offers unique features to help you efficiently scrape content and implement various data extraction techniques.

Is it necessary to use proxies for effective web scraping?

Using proxies can be crucial in effective web scraping, especially when targeting a large number of requests to avoid being blocked by a website. Proxies help in distributing requests and maintaining anonymity during data extraction processes.

How can I handle CAPTCHA challenges during web scraping?

To handle CAPTCHA challenges during web scraping, consider using CAPTCHA solving services or employing browser automation tools like Selenium, which can simulate a true user experience. This technique often helps bypass security measures while scraping market articles.

| Key Points | Details |

|---|---|

| Website Scraping Techniques | Techniques and tips to extract data and content from websites. |

| Limitations of Access | Some websites, like nytimes.com, may restrict access due to browsing limitations. |

| Required Content | For effective scraping, provide HTML content or specific details. |

Summary

Website scraping techniques are essential for businesses and researchers looking to extract valuable data from online resources. These methods include using automated tools and learning how to navigate HTML structures to fetch the necessary information. Despite challenges like restricted access, understanding the fundamentals of scraping can empower users to gather insights from various websites effectively.