Web Scraping: Understanding Ethical Guidelines and Tools

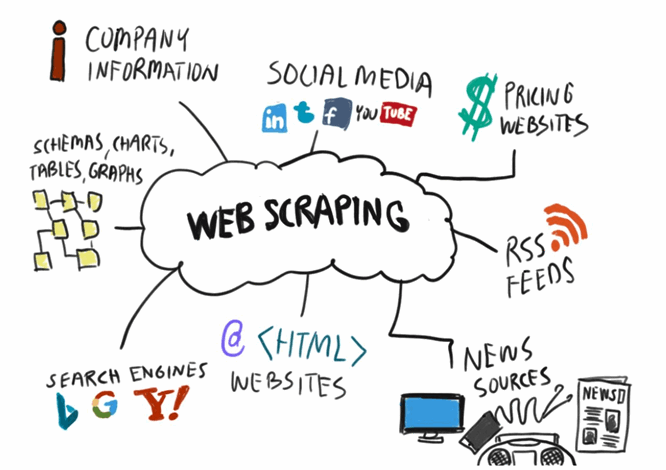

Web scraping is an invaluable technique that allows individuals and businesses to gather data from websites efficiently. By utilizing advanced web scraping tools, users can automate the extraction of information, transforming raw data into actionable insights. Ethical web scraping is increasingly gaining importance as it ensures compliance with legal standards while minimizing potential risks. With numerous web scraping techniques available, such as DOM parsing and using APIs, users can effectively tailor their data extraction methods to meet specific needs. Moreover, the availability of professional web scraping services has made it easier than ever for organizations to leverage data for competitive advantage.

Data harvesting from online sources, often referred to as web data extraction, has become a cornerstone of modern digital strategies. This practice encompasses various methods for collecting pertinent information from websites, enabling users to analyze trends and make informed decisions. As businesses turn to data-driven solutions, understanding the nuances of ethical data retrieval becomes crucial in maintaining integrity. Techniques related to online information gathering, such as employing bots or scraping algorithms, are continually evolving to adapt to changing web architectures. Consequently, the rise of dedicated scraping services has empowered companies to enhance their operational efficiency by accessing a wealth of online data.

Understanding Web Scraping

Web scraping is a powerful data extraction technique that allows individuals and organizations to gather information from websites. This process involves using bots or automated scripts to systematically fetch content from web pages, making it easier to analyze large sets of data. Unlike traditional data collection methods, web scraping provides quick access to substantial amounts of information, which can be beneficial for market research, content aggregation, and competitive analysis.

However, it is essential to comprehend the legal and ethical considerations surrounding web scraping. Many websites have policies or terms of service that explicitly prohibit automated data extraction. Therefore, understanding the rules regarding data use, including fair use and copyright issues, is crucial for ethical web scraping. Developing a solid awareness of these principles ensures that your scraping efforts align with legal standards and respect the rights of content creators.

Popular Web Scraping Tools

There are various web scraping tools available, each designed to cater to different user needs and technical backgrounds. Popular options like Beautiful Soup, Scrapy, and Selenium provide powerful frameworks for developers looking to extract data programmatically. These tools allow users to navigate and parse HTML documents effectively, making it easier to focus on the information that matters. Additionally, user-friendly applications such as ParseHub and Octoparse empower non-technical users to conduct web scraping without needing extensive coding knowledge.

Alongside these options, specialized web scraping services also exist, offering fully managed solutions for businesses seeking to extract data at scale. These services often include team expertise in data cleaning and analysis, ensuring that the gathered data is not only accurate but also actionable. With the growing demand for data-driven insights, utilizing robust web scraping tools and services can provide businesses with a competitive advantage.

Don’t forget to evaluate the scalability and performance of different tools for more extensive projects. Performance metrics like speed, extraction accuracy, and the ability to handle dynamic content can significantly impact the success of your data extraction strategy.

Ethical Web Scraping Practices

Adopting ethical web scraping practices involves respecting the boundaries set by website owners and understanding how to navigate the legal landscape. One critical aspect is to review a website’s robots.txt file, which outlines which pages can be crawled by bots and which cannot. By adhering to these guidelines, web scrapers can avoid potential legal repercussions and help maintain a mutually beneficial relationship with content providers.

Moreover, ethical scraping entails avoiding excessive requests within a short timeframe, which can overload a website’s server. Implementing techniques like rate limiting is vital to mitigate any potential disruptions to the site’s operation. Additionally, always attribute data to its original source when possible, which not only respects intellectual property but also preserves the integrity of the information you collect.

Web Scraping Techniques for Success

When embarking on a web scraping project, it’s essential to select the right techniques based on your specific goals and the structure of your target websites. Common techniques include using HTML parsing libraries to extract relevant information, leveraging APIs when available, and employing headless browsers to interact with sites that require JavaScript for rendering content. Each technique has its advantages and drawbacks, and choosing the correct one is vital for efficient data extraction.

For example, using APIs can often yield cleaner and more structured data since they provide data in a readily usable format. On the other hand, HTML parsing is necessary when APIs are not available, allowing scrapers to navigate site structures directly. Combining multiple techniques can enhance your scraping efficiency and result in high-quality data outputs, tailored to meet the analytical needs of your project.

Web Scraping Services: What to Consider

When considering a web scraping service, it’s crucial to evaluate various factors that determine the effectiveness, reliability, and legality of the data extraction process. Potential users should assess the service’s compliance with legal standards, including their approach to ethical scraping. A reputable service should offer transparency about their methods and data sourcing practices, ensuring they adhere to industry regulations.

Additionally, analyzing the pricing models and scalability options of the web scraping service is essential. Some services may charge based on the volume of data scraped, while others might offer subscription-based pricing. Understanding your needs and potential growth will help in selecting the best service that can adapt to evolving data requirements without sacrificing performance or quality.

Data Extraction Strategies for Businesses

Data extraction is a critical component for businesses looking to gain insights and drive decision-making. By implementing effective web scraping strategies, organizations can continuously gather and analyze large volumes of data from various online sources. This can include competitor pricing, market trends, customer feedback, and more. With proper data extraction techniques, businesses can stay ahead in their industry by making informed, agile decisions.

Moreover, using web scraping tools alongside data mining can enhance the depth of insights gathered. This combination allows businesses not just to collect data, but to analyze patterns and derive actionable insights that influence strategy and operations. Seamless integration of these strategies ensures businesses maintain a competitive edge in today’s data-driven environment.

Challenges in Web Scraping

Despite the advantages of web scraping, several challenges can hinder the data extraction process. One significant obstacle is the use of anti-scraping technologies by websites, such as CAPTCHAs and IP blocking, which are designed to prevent automated access. Scrapers may need to develop advanced techniques to circumvent these barriers, which can add complexity and require continual adjustments as websites evolve.

Additionally, website structure can change frequently, which may break scripts or methods used for scraping. This necessitates ongoing maintenance and updates to scraping configurations to ensure accuracy and reliability in data collection. Adapting to these challenges is part of the web scraping process, and being proactive in implementing solutions will facilitate smoother operations.

The Future of Web Scraping

As technology continues to advance, the future of web scraping appears promising. Innovations in artificial intelligence and machine learning are paving the way for more sophisticated scraping tools capable of handling complex site structures and extracting data with higher accuracy. These advancements will not only enhance the efficiency of data collection but also improve the depth of analysis by enabling better interpretation of unstructured data.

Furthermore, as data privacy laws evolve globally, web scraping practices will likely adapt to meet regulatory requirements. Ethical web scraping and responsible data usage will become critical focal points for companies that rely on data for their operations. Organizations that prioritize compliance alongside effectiveness in their scraping efforts will be best positioned to thrive in an increasingly data-centric world.

Maximizing Data Utility Post-Scraping

Once data has been successfully scraped, the next step is to maximize its utility through effective analysis and visualization. Implementing data analysis tools can help transform raw data into meaningful insights that inform business decisions. Techniques such as data cleansing, normalization, and transformation can further enhance the quality of the collected data, making it more actionable.

Moreover, visualizing data through dashboards and reports can enable stakeholders to easily understand trends and patterns. This not only aids in strategic planning but also improves communications across departments. The goal is to make the data user-friendly and insightful, allowing businesses to leverage their scraped information effectively.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is the process of automatically extracting data from websites. It involves using web scraping tools to send requests to a webpage and retrieve the HTML content for parsing and data extraction. This method can be utilized for various purposes, including research, data analysis, and monitoring trends.

What are some popular web scraping tools available?

There are several popular web scraping tools that facilitate data extraction, including Beautiful Soup, Scrapy, and Octoparse. These tools provide robust solutions for both beginners and advanced users, enabling efficient web scraping while handling different website structures.

Is ethical web scraping important, and why?

Yes, ethical web scraping is crucial as it ensures compliance with legal guidelines and respect for website owners’ terms of service. Ethical web scraping practices involve checking for robots.txt files, avoiding overloading servers, and ensuring that the data collected is used responsibly.

What web scraping techniques can improve results?

Effective web scraping techniques include using headless browsers for dynamic content, employing regular expressions for data parsing, and implementing delays between requests to mimic human browsing. These techniques enhance the accuracy and efficiency of data extraction processes.

What are web scraping services and when should I use them?

Web scraping services are professional solutions that assist businesses in gathering large datasets from the web without the need for manual coding. They are ideal for companies needing bulk data extraction, market analysis, or competitive intelligence without investing heavily in in-house scraping solutions.

How can I ensure data accuracy with web scraping?

To ensure data accuracy with web scraping, it’s essential to validate and clean the extracted data. Implement techniques such as data deduplication, format standardization, and cross-referencing against reliable sources to enhance the integrity of the data collected.

Can web scraping be done without programming knowledge?

Yes, many user-friendly web scraping tools offer no-code solutions that allow users to scrape data without any programming skills. Tools like ParseHub and DataMiner provide intuitive interfaces for users to set up scraping workflows easily.

What legal considerations should I keep in mind with web scraping?

While web scraping can be legal, it’s important to be aware of copyright laws and the terms of service of the websites you intend to scrape. Always review a site’s robots.txt file for permissions and consider seeking legal advice if unsure about compliance issues.

What types of data can be extracted through web scraping?

A wide range of data types can be extracted through web scraping, including textual content, product prices, user reviews, and social media data. The versatility of web scraping tools allows users to target specific data points that meet their analytical requirements.

How does web scraping differ from API data extraction?

Web scraping involves extracting data directly from the HTML of a webpage, whereas API data extraction utilizes a structured interface provided by the website. While APIs often offer more reliable and extensive access to data, web scraping can be useful when APIs are unavailable.

| Key Point | Description |

|---|---|

| Web Scraping | Web scraping is the process of using bots to extract content and data from websites. |

| Legal Considerations | Not all websites permit scraping, and some may have protections in place. |

| Technical Challenges | Websites like nytimes.com often have sophisticated structures and restrictions that complicate scraping efforts. |

| Ethical Practices | Respect for website terms of service is crucial when considering web scraping. |

Summary

Web scraping is a powerful tool for gathering online data, but it’s essential to navigate legal and ethical considerations carefully. Websites, such as nytimes.com, may restrict access to their content, making web scraping a challenging endeavor. Understanding these dynamics is vital for anyone looking to engage in effective and responsible web scraping.