Web Scraping Techniques: Understanding the Basics

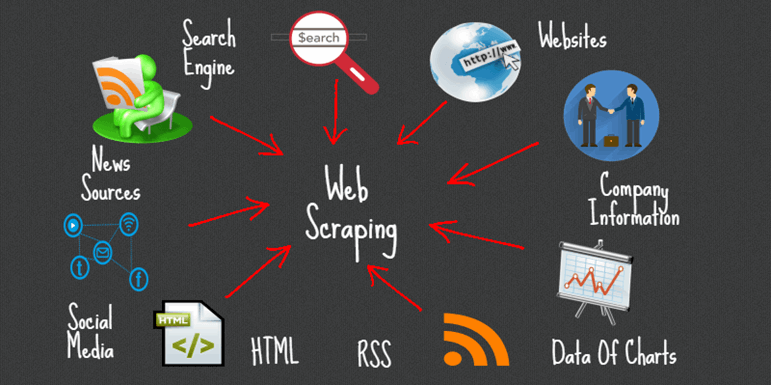

Web scraping techniques have revolutionized the way we gather and analyze data from the vast expanse of the internet. By employing effective data extraction methods, individuals and businesses can harness valuable information embedded in web pages, transforming raw HTML into structured datasets. This web scraping guide will delve into various scraping tools and practices that enable seamless content scraping, ensuring accurate and efficient results. Whether it’s for market research, price comparison, or academic purposes, mastering these techniques can unlock a treasure trove of insights. Join us as we explore the fundamentals and complexities of effective web scraping techniques to leverage the power of information at your fingertips.

In the realm of digital data collection, various approaches, including information retrieval and automated data gathering, are used to mine useful insights from websites. Often referred to as data harvesting or content extraction, these methods enable users to convert unstructured information into organized formats suitable for analysis. By leveraging scraping software and automated scripts, one can efficiently navigate the web while collecting pertinent data for research or business intelligence. Understanding these alternative terms and methodologies is crucial for anyone looking to optimize their online data strategies. As we delve deeper, you’ll discover how these concepts interlink and bolster your digital strategies.

Understanding Web Scraping Techniques

Web scraping is a powerful method used to extract data from websites and transform it into a more usable format. By understanding how to utilize various web scraping techniques, developers and data enthusiasts can efficiently gather information that is available across the internet. Techniques can range from simple methods, such as using browser plugins, to more complex programming solutions that involve writing scripts in languages like Python or Java. A comprehensive web scraping guide often discusses these different approaches, allowing users to choose the best method based on their specific data extraction needs.

Among the most popular web scraping techniques is HTML extraction, which involves retrieving and parsing the raw HTML of web pages to find the specific data elements required. This process usually begins with sending a request to a server, which then returns the HTML content, allowing scrapers to navigate the Document Object Model (DOM) to identify and extract the desired information. Mastery of HTML extraction is crucial for anyone wanting to delve deeper into web scraping since it lays the groundwork for more advanced data extraction methods.

Essential Scraping Tools for Effective Data Extraction

The landscape of web scraping tools is vast and varied, offering numerous options tailored to different use cases and expertise levels. For beginners, user-friendly scraping tools such as Octoparse and ParseHub can simplify the scraping process by providing visual interfaces that don’t require programming skills. These tools often come equipped with features like point-and-click scraping and scheduled data extraction, making it accessible for users to gather information from multiple sources with minimal effort.

On the other hand, advanced users may prefer libraries like BeautifulSoup or Scrapy, which are written in Python and give more control over the scraping process. These frameworks allow for customized scripts that can handle complex tasks, such as interacting with web forms, managing cookies, and bypassing anti-scraping measures. Integrating these scraping tools into a broader web scraping strategy can significantly enhance efficiency and effectiveness, especially when extracting large volumes of data.

Key Considerations for Responsible Content Scraping

When engaging in content scraping, it is essential to consider the ethical implications and legal restrictions associated with web scraping. Different websites have varying policies regarding data usage, which can usually be found in their terms of service. As a responsible data extractor, it’s crucial to adhere to these guidelines to avoid potential legal issues or IP bans. Awareness of robots.txt files is also important, as they provide directives regarding what is permissible when scraping a site.

Moreover, scraping techniques should be implemented respectfully, avoiding overloading servers with too many requests in a short period. Effective scraping practices might include incorporating rate limiting, where requests are spaced out to mimic human browsing behavior. By prioritizing responsible content scraping, you ensure that your data extraction processes do not inadvertently harm the sources you depend on for information, leading to more sustainable scraping practices.

Benefits of Using LSI in Web Scraping

Latent Semantic Indexing (LSI) plays a significant role in enhancing the efficacy of web scraping methods by allowing for the extraction of related concepts and keywords that support the main topics. By integrating LSI into the data extraction process, scrapers can identify valuable information that may not be immediately visible through simple keyword searches. This approach leads to a more holistic understanding of the content being scraped, enriching the data set and providing deeper insights into niche topics related to your central subject.

For instance, utilizing LSI terms such as ‘data extraction methods’ or ‘content scraping’ during the scraping process can significantly improve the relevancy and quality of the captured data. This enables the extraction of a broader spectrum of information that captures nuances and variations in language. Incorporating LSI can enhance the overall outcome of web scraping projects, especially when analyzing large datasets for marketing or research purposes.

How to Extract Data with HTML and CSS Selectors

HTML and CSS selectors are a fundamental part of the web scraping process, as they allow users to pinpoint the exact elements within a webpage that house the desired data. By mastering selectors, scrapers can navigate through the HTML structure effectively, extracting text, images, links, and other elements without overexposing themselves to irrelevant data. Learning to write effective selectors can greatly streamline the data extraction process.

Using libraries like BeautifulSoup, scrapers can harness the power of CSS selectors to filter out specific data points efficiently. These selectors can target classes, ids, or other attributes within HTML tags and enable scrapers to collect data that matches their criteria. Hence, understanding HTML and CSS selectors is key to optimizing the data extraction tasks associated with web scraping.

Automating Data Extraction with Web Scraping Scripts

Automation is a game-changer in the realm of web scraping, allowing users to set up scripts that handle the data extraction process with little to no manual intervention. This not only saves time but also ensures that data can be gathered on a consistent basis, which is essential for businesses relying on real-time data for decision-making. By writing effective scraping scripts, users can programmatically navigate through websites, gather information, and store it in structured formats like CSV or JSON.

Moreover, automation can mitigate some of the challenges posed by anti-scraping technologies, as a well-structured script can include measures to mimic legitimate user behavior, such as randomizing request intervals or rotating user agents. This capability enhances the robustness of the scraping process while reducing the risk of detection. Thus, becoming proficient in automation techniques is vital for anyone serious about maximizing their web scraping endeavors.

Navigating Challenges in Web Scraping

Despite the various tools and techniques available, web scraping can come with its fair share of challenges. Websites may employ numerous strategies to prevent scraping, such as CAPTCHAs, dynamic content loads, or blocking IP addresses after a certain number of requests. Scrapers must develop strategies to handle these challenges effectively without violating web scraping ethics or the site’s terms of service.

Moreover, the dynamic nature of websites means that HTML structures can change frequently, which could lead to scrapers failing to retrieve the desired data if the scripts are not regularly updated. This necessity for maintenance adds another layer of complexity to web scraping projects. Overcoming these obstacles requires staying informed about new developments in web technologies and continuously refining scraping strategies.

Content Scraping vs. Data Scraping: Understanding the Difference

Many people use the terms content scraping and data scraping interchangeably, but they refer to different processes. Content scraping typically focuses on extracting media, text, or other types of content from specific web pages, often with the aim of republishing it elsewhere. This type of scraping can sometimes lead to issues related to copyright infringement if proper attribution is not provided.

Data scraping, on the other hand, refers to the process of extracting structured data—like tables, figures, or even database entries—from websites to analyze and use for various purposes, including business intelligence or market analysis. Recognizing the distinction between these two types of scraping is vital as it drives not only the approach but also the legal implications of web scraping activities. Understanding this difference can guide scrapers toward ethical and responsible practices.

Best Practices and Guidelines for Web Scraping

To ensure successful and legal web scraping, it is important to follow best practices and established guidelines. First, always respect the robots.txt file of any site you plan to scrape, as it outlines the permissions granted by the webmaster regarding web crawlers. Even if a site does not explicitly block scraping, it’s prudent to reach out to the site owner for permission, particularly if the data scraped will be used commercially.

In addition to ethical considerations, optimizing your scraping processes for efficiency is equally essential. This includes implementing efficient algorithms for navigating websites, employing error handling in case of request failures, and regularly reviewing and updating your scraping scripts to adapt to site changes. Following these guidelines helps build a positive reputation in the web scraping community while maximizing the quality and relevance of extracted data.

Frequently Asked Questions

What are the most common web scraping techniques used today?

The most common web scraping techniques include HTML extraction, where data is parsed directly from web pages, and content scraping, which involves collecting information for analysis. Additionally, data extraction methods like using APIs or web crawling tools are widely utilized for efficient scraping.

How can I get started with a web scraping guide?

To start with a web scraping guide, familiarize yourself with web scraping techniques such as using Python libraries like BeautifulSoup for HTML extraction and Scrapy for crawling websites. Also, explore scraping tools that simplify the process, including Octoparse and Parsehub.

What scraping tools are available for beginners?

For beginners in web scraping techniques, tools like BeautifulSoup and Selenium are great for HTML extraction. Other user-friendly scraping tools include ParseHub and Octoparse, which offer visual interfaces for content scraping without requiring extensive coding knowledge.

What is HTML extraction in web scraping?

HTML extraction in web scraping refers to the method of pulling data directly from the HTML structure of a web page. This technique involves parsing the HTML to find and extract specific elements, such as text or images, which can then be organized for further analysis.

What best practices should I follow for effective data extraction methods?

Effective data extraction methods involve following best practices such as respecting website terms of service, using user-agent headers to mimic browsers, handling pagination correctly, and implementing rate limiting to avoid overwhelming a server during your scraping activities.

Are there any legal considerations when using web scraping techniques?

Yes, there are important legal considerations when using web scraping techniques. Always check the website’s terms of service; unauthorized scraping can lead to legal issues. Be cautious with content scraping and ensure compliance with copyright laws and data protection regulations.

How can I automate web scraping tasks?

You can automate web scraping tasks by using scraping tools and frameworks like Scrapy or Puppeteer. These tools support scheduling and can handle repetitive tasks, making it easier to extract data consistently without manual intervention.

What challenges might I face when scraping content from dynamic websites?

When scraping content from dynamic websites, challenges include dealing with AJAX content loading, requiring techniques like headless browsing with tools like Selenium. Additionally, anti-scraping measures such as CAPTCHAs and bot detection can hinder your data extraction efforts.

| Key Point | Details |

|---|---|

| Website Restrictions | Certain websites, like nytimes.com, have restrictions against web scraping. |

| Web Scraping Techniques | There are various techniques available for scraping including using libraries like BeautifulSoup, Scrapy, and tools to parse HTML. |

| Legal Considerations | Always review a website’s terms of service before scraping to avoid legal issues. |

Summary

Web scraping techniques are essential tools for extracting data from websites. As highlighted in the discussion, it’s crucial to recognize the legal and ethical boundaries when scraping, specifically with content-rich platforms like nytimes.com. Understanding the different methods available for web scraping, such as using libraries for HTML parsing, enables users to effectively gather data while adhering to website restrictions. By employing these techniques responsibly, one can leverage the vast amounts of information on the web while respecting the rights of content providers.