Web Scraping Techniques: Master the Art of Data Extraction

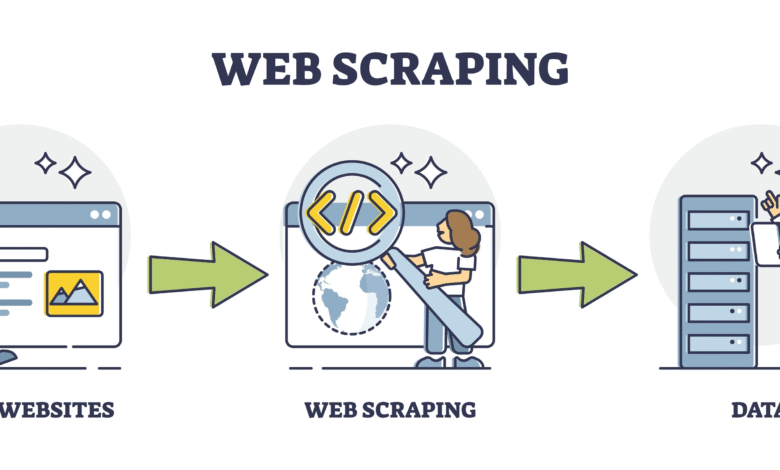

Web scraping techniques have revolutionized the way we extract valuable information from the vast expanse of the internet. In today’s data-driven world, mastering data scraping methods is crucial for businesses and enthusiasts alike, enabling them to gather insights efficiently. From learning how to scrape websites to utilizing various web scraping tools, there’s a wealth of options available for extracting data from websites. However, following the best practices for web scraping is essential to ensure legality and data accuracy. As more individuals and organizations recognize the importance of leveraging data, understanding these techniques can significantly enhance decision-making and strategic planning.

When we talk about web data extraction, we delve into an exciting frontier of transforming unstructured information into structured datasets. From automated script-based solutions to sophisticated software, the methods employed for aggregating online content are continuously evolving. Understanding how to harvest web-based insights not only aids in informed decision-making but also equips businesses with tools to stay competitive. Utilizing the right web data mining strategies can lead to innovative applications across various sectors. Whether you refer to it as web harvesting or data extraction, the goal remains to tap into the rich resources available online.

Understanding Web Scraping Techniques

Web scraping techniques refer to the various methods used to extract data from websites. These approaches vary in complexity, from simple HTML parsing to more advanced techniques involving the use of APIs (Application Programming Interfaces). By mastering different web scraping techniques, a user can effectively collect and analyze data from various online sources, which can benefit businesses and researchers alike.

Common web scraping techniques include the use of libraries such as Beautiful Soup and Scrapy in Python, which enable users to navigate the Document Object Model (DOM) of a webpage and extract the desired information. These tools help in automating the extraction process, making it faster and more efficient, especially when dealing with large volumes of data from multiple websites.

Best Practices for Web Scraping

When it comes to web scraping, adhering to best practices is essential to ensure legal compliance and ethical standards. It is crucial to respect the website’s a href=”robots.txt”b file, which outlines the rules about which parts of the site can be accessed by bots. Additionally, users should avoid overloading a server with requests; implementing rate limiting and reviewing the site’s terms of service are good strategies to avoid potential penalties or legal repercussions.

Another best practice involves structuring the collected data efficiently for future use. By organizing the data into well-defined formats such as CSV or JSON, users can streamline the analysis process. Furthermore, utilizing error handling methods ensures that any anomalies during the scraping process are addressed promptly, thereby enhancing the integrity of the collected data.

Effective Data Extraction Strategies

Data extraction is a core component of web scraping, and employing effective strategies can significantly impact the quality of the results. One method is to use CSS selectors or XPath, which are powerful tools that allow precise targeting of elements within a webpage. Learning how to navigate and implement these strategies can dramatically improve the accuracy of the extracted data.

Moreover, utilizing libraries like Selenium can be beneficial when dealing with dynamic content that is generated by JavaScript. This allows users to capture data that may not be present in the initial HTML of a webpage, ensuring a comprehensive data extraction process. Understanding these strategies and knowing when to apply them is key to successful data harvesting.

Using Web Scraping Tools for Efficiency

Numerous web scraping tools are available that can streamline the data extraction process. Tools such as Octoparse, ParseHub, and Import.io offer user-friendly interfaces that allow users, even those without extensive coding knowledge, to perform web scraping tasks. These platforms often provide pre-built templates for popular websites, making it easier to get started with minimal setup.

Additionally, cloud-based web scraping tools can enhance efficiency by running processes on remote servers. This capability allows for faster data collection without straining local resources. Furthermore, many of these tools come with built-in scheduling features that enable users to automate scraping tasks, ensuring that data is gathered at regular intervals without manual intervention.

How to Scrape Websites: Step-by-Step Guide

To successfully scrape a website, it’s crucial to follow a structured approach. Start by identifying the specific data you wish to collect, which could range from product details to user comments. Next, use tools like browser developer tools to inspect the structure of the webpage, noting the elements containing the desired data. This information will guide the selection of the appropriate scraping technique.

Once you have the data structure mapped out, you can begin coding your scraping script using a language like Python. Libraries such as Requests can be used to fetch webpage content, while Beautiful Soup will help parse the HTML and extract the relevant data. Always test your script on a smaller scale before scaling up to avoid errors and ensure compliance with the website’s terms.

Legal Considerations in Web Scraping

Legal considerations play a crucial role in web scraping, as different web domains may have varying rules regarding automated data collection. It’s essential to read and understand a website’s terms of use and robots.txt file, which provides guidelines on what is permissible for bots. Violating these rules can lead to legal consequences and possible bans from the website.

In some cases, engaging in scraping activities without permission could also violate copyright laws, particularly when extracting large volumes of proprietary content. Therefore, it is advisable to seek permission from the website owner for extensive data extraction projects or to utilize publicly available APIs where possible. Proactive legal awareness can mitigate risks while scraping effectively.

Challenges and Solutions in Data Scraping

Web scraping is not without its challenges, which can range from technical difficulties to website restrictions. One common challenge is dealing with CAPTCHAs, which are designed to prevent automated access. Solutions include using CAPTCHA-solving services or employing headless browsers that can mimic human interactions more accurately.

Another significant hurdle is dynamic content, wherein information is loaded asynchronously. To overcome this, using tools such as Selenium or Puppeteer allows scrapers to wait for the content to load fully before attempting to extract data. Familiarizing oneself with these challenges, along with their solutions, will enhance a scraper’s effectiveness and adaptability.

Integrating Web Scraping with Data Analytics

Integrating web scraping with data analytics can provide profound insights for businesses and researchers. After successfully extracting data, the next step involves organizing and analyzing it to derive meaningful conclusions. This integration can be enhanced by using data visualization tools such as Tableau or Power BI, which can help present the data in a more digestible format.

Furthermore, leveraging machine learning models can enable predictive analytics based on the scraped data. For instance, analyzing customer feedback or product pricing trends can inform business strategies and decision-making processes. The synergy between web scraping and data analytics opens up new avenues for intelligence gathering and operational optimization.

Future Trends in Web Scraping

The future of web scraping is poised for significant changes as technology evolves. The increased use of Artificial Intelligence (AI) and machine learning in developing web scraping tools will likely enhance their efficiency and accuracy. New algorithms may emerge to navigate complexities such as JavaScript-heavy websites and real-time data extraction.

Additionally, as more businesses recognize the importance of data-driven decisions, the demand for web scraping services will likely rise. This trend will contribute to the development of more user-friendly tools that cater to non-technical users. Staying abreast of these trends will be essential for anyone looking to leverage web scraping for competitive advantages and enhanced data collection needs.

Frequently Asked Questions

What are the best practices for web scraping techniques?

When using web scraping techniques, it’s essential to follow best practices such as respecting website terms of service, avoiding excessive requests to prevent being blocked, and implementing proper error handling. Additionally, using user-agent strings to mimic a browser and setting delays between requests can help in extracting data from websites more ethically.

How to scrape websites effectively using various data scraping methods?

To scrape websites effectively, begin by selecting an appropriate data scraping method such as HTML parsing, using APIs, or browser automation tools. Choose a web scraping tool like Beautiful Soup for Python or Scrapy, which allows you to easily extract data from websites by navigating the HTML structure.

What web scraping tools are recommended for beginners?

For beginners in web scraping, tools like Octoparse, ParseHub, and Import.io are highly recommended due to their user-friendly interfaces and powerful scraping capabilities. These web scraping tools provide visual interfaces to help you extract data from websites without needing extensive coding knowledge.

Can I use web scraping techniques to extract data from websites legally?

Yes, you can use web scraping techniques legally, provided you comply with the website’s robots.txt and terms of service. Always check the site’s scraping policies and avoid collecting sensitive or copyrighted data. Legal boundaries vary by jurisdiction, so it’s crucial to perform due diligence.

What are some common challenges in extracting data from websites?

Common challenges in extracting data from websites include dealing with dynamic content loaded via JavaScript, anti-scraping measures like CAPTCHAs, and fluctuating HTML structures. Developers often need to employ more sophisticated web scraping techniques, like headless browsing and advanced parsing methods to overcome these issues.

How can I optimize my web scraping techniques for better performance?

To optimize web scraping techniques, focus on minimizing requests and data processing times. Utilize multi-threading or asynchronous requests to speed up the data extraction process. Implement caching mechanisms for commonly accessed pages, and analyze the data structures to streamline your scraping logic.

What programming languages are best for scraping websites?

The best programming languages for scraping websites include Python, due to its libraries like Beautiful Soup and Scrapy, as well as JavaScript for front-end scraping. Other languages like Ruby, PHP, and Java can also be effective depending on your specific web scraping needs and project requirements.

How to handle CAPTCHA when scraping websites?

Handling CAPTCHA effectively while scraping websites often requires using automated CAPTCHA solving services or employing human-based solutions depending on the complexity of the CAPTCHA. In some cases, using browsers with automation tools can help bypass CAPTCHA challenges by mimicking user behavior.

| Key Point | Description |

|---|---|

| AI Limitations | The AI cannot access the internet or scrape live data directly. |

| Web Scraping Guidance | The AI can provide instructions or code snippets for web scraping tasks. |

| User Input Required | Specific details about the information needed are required for effective guidance. |

Summary

Web scraping techniques are essential for gathering data from websites efficiently. While AI models, like this one, lack the ability to access the internet, they can still assist you by providing guidance on how to implement web scraping. By using appropriate libraries and understanding website structures, you can extract valuable information effectively. If you require specific examples of code or methods, just provide the details of what you wish to scrape.