Web Scraping Techniques: Master Data Extraction Methods

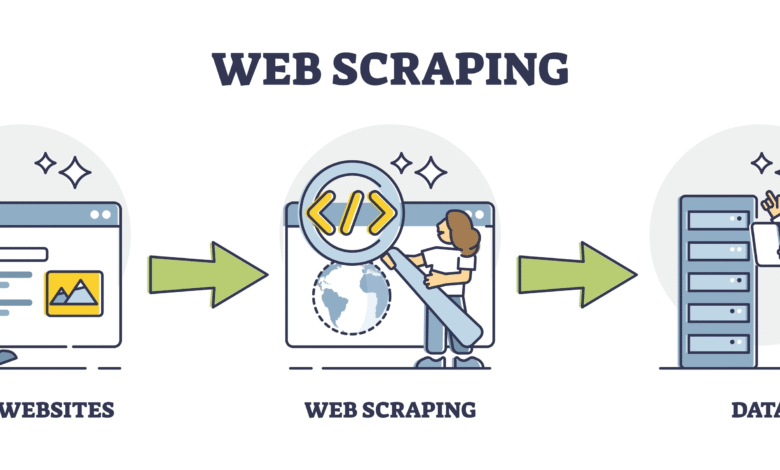

Web scraping techniques have revolutionized the way we gather information from the internet. By utilizing methods like data extraction techniques, individuals and businesses can automate web data collection and streamline their research processes. A comprehensive web scraping tutorial can help you learn how to scrape web pages efficiently, empowering you to extract valuable insights from vast online resources. Various web scraping methods are available, catering to different needs and technical skill levels. Whether you’re seeking information for market analysis or academic research, mastering web scraping techniques will enhance your ability to harness data and make informed decisions.

In the realm of online data gathering, scraping methodologies play a crucial role in extracting relevant information from vast digital landscapes. Techniques for harvesting web content, often referred to as data mining, offer innovative approaches to mine the web for usable insights. Users can explore diverse strategies to automate web data collection efficiently, making the process not only faster but also more accurate. As we delve into this subject, we will uncover various data retrieval processes and provide guidance on effective web content acquisition. Understanding these advanced scraping capabilities can significantly empower researchers, marketers, and analysts alike.

Understanding Web Scraping Techniques

Web scraping techniques are essential for extracting data from websites efficiently. Often used by developers and data analysts, these methods involve automating the data retrieval process to collect large volumes of information from multiple web pages. By employing techniques such as HTML parsing and DOM manipulation, users can transform scattered web data into structured formats suitable for analysis, thereby enhancing productivity and ensuring data accuracy.

Commonly used web scraping methods include using libraries like BeautifulSoup for Python, which simplifies the process of navigating and extracting information from HTML documents, and Selenium for automating browser activities. These tools enable the gathering of data ranging from basic text to more complex interactive elements, making them invaluable for comprehensive data extraction projects.

Effective Data Extraction Techniques

Effective data extraction techniques go beyond simple scraping; they require strategic planning and execution to ensure that the data collected is relevant and useful. For instance, understanding the structure of a web page and the specific elements that contain the desired data can drastically improve the efficiency of the scraping process. Advanced techniques may also involve the use of APIs when available, allowing for more structured and reliable data retrieval.

Additionally, crafting a robust web scraping tutorial can empower individuals to leverage these data extraction techniques responsibly. This includes understanding the legal implications of scraping websites, such as complying with the site’s robots.txt file and respecting copyright regulations. A well-structured tutorial can guide users through a step-by-step process, ensuring they are equipped with the knowledge to harness data effectively while adhering to best practices.

Automating Web Data Collection

Automating web data collection is a game-changer for businesses and researchers alike. With the ability to programmatically gather information from various sources, organizations can maintain up-to-date databases that inform decision-making processes. Tools such as Scrapy allow users to create spiders that can crawl multiple pages simultaneously, saving considerable time and effort compared to manual data collection.

Furthermore, employing automation not only increases efficiency but also reduces human error inherent in manual data entry. By utilizing these advanced web scraping techniques, teams can focus on analyzing the collected data rather than spending precious resources on the collection process itself. As automation continues to evolve, the potential applications in various industries are limitless, driving innovation in fields like market research, competitive analysis, and real-time data monitoring.

Web Scraping Tutorial for Beginners

A web scraping tutorial for beginners is a valuable resource that demystifies the process of extracting data from websites. Such tutorials typically start with the basics, explaining the foundational concepts of web technologies, such as HTML and CSS, and how they relate to data scraping. It is crucial for beginners to understand how web pages are structured to effectively navigate and pull data.

Furthermore, a comprehensive tutorial will often introduce various libraries and tools, such as Python’s Requests and BeautifulSoup, providing hands-on examples that learners can follow. These practical exercises not only build confidence but also encourage users to engage with the scraping process actively, ultimately leading to a deeper understanding of data extraction techniques and their applications.

Common Challenges in Web Scraping

Despite its advantages, web scraping presents several challenges that practitioners must navigate. One common issue is dealing with websites that employ anti-scraping measures, such as CAPTCHAs and dynamic content that requires JavaScript execution. Understanding how to circumvent these obstacles through tools like Selenium can be crucial for successful scraping, as it allows for simulating user interactions on complex sites.

Another challenge involves ensuring data accuracy and consistency, especially when scraping multiple pages or websites. Data may be presented in different formats, requiring further processing and cleaning before it can be analyzed. To address these issues, it’s essential to refine one’s data extraction techniques, incorporating best practices such as establishing data validation checks to ensure the reliability of the collected information.

Best Practices for Responsible Web Scraping

Best practices for responsible web scraping are paramount to ensure compliance with legal and ethical standards. Before commencing a scraping project, it’s advisable to review the target website’s terms of service and robots.txt file to determine allowable actions. This practice not only prevents potential legal repercussions but also fosters a respectful relationship with website owners, who may provide APIs or other access methods for data retrieval.

It’s also essential to implement polite scraping techniques, such as adhering to rate limits and timed intervals between requests, to minimize the impact on website performance. By adopting these best practices, web scrapers can enjoy the benefits of data extraction while maintaining integrity and sustainability within the digital ecosystem.

Leveraging APIs for Data Extraction

Leveraging APIs for data extraction offers a more structured and reliable approach compared to traditional web scraping techniques. Many websites provide APIs that allow users to query and retrieve data in a format such as JSON or XML, which is easy to parse and use. This can significantly streamline the data extraction process, eliminating the need for extensive data cleaning and manipulation.

Moreover, using APIs often comes with the added benefit of documentation and support, making it easier for developers to understand how to interact with the data. Diving into API usage not only broadens the scope of available data sources but also enhances the capability to access real-time data updates, proving advantageous for applications in analytics and market research.

Choosing the Right Web Scraping Tools

Choosing the right web scraping tools is crucial for achieving efficient and effective data extraction. Various tools are available, each with unique features catering to different scraping needs. For instance, tools like ParseHub and WebHarvy offer visual interfaces, making them user-friendly for those less technically inclined, while more advanced users might prefer code-based solutions like Scrapy or BeautifulSoup.

Additionally, considerations such as scalability, support for handling dynamic web content, and integration capabilities with other data processing tools should influence the selection process. By carefully evaluating these factors, users can select the most suitable web scraping tools that align with their specific project requirements and technical expertise.

Future Trends in Web Scraping

The future of web scraping is set to evolve rapidly with advancements in artificial intelligence and machine learning. These technologies will enable more sophisticated data extraction techniques that can adapt to the changing structures of websites and employ natural language processing to derive insights from unstructured data. This evolution will likely enhance the accuracy and efficiency of data scraping processes, leading to more actionable insights for businesses.

Moreover, as ethical considerations surrounding data privacy continue to gain attention, the future of web scraping will also focus on compliance and responsible practices. Developers will need to integrate ethical guidelines and build tools that respect user privacy while maximizing data utility. The ongoing dialogue around data rights will shape the landscape of web scraping, ushering in a new era of more responsible data practices.

Frequently Asked Questions

What are the best web scraping techniques for beginners?

For beginners, the best web scraping techniques include using libraries like Beautiful Soup with Python, which simplifies data extraction from HTML and XML documents. Another popular method is using web scraping frameworks such as Scrapy, which allows for efficient data collection and automation. Learning how to scrape web pages with these tools can provide a solid foundation in data extraction techniques.

How can I automate web data extraction effectively?

To automate web data extraction effectively, consider using tools like Selenium, which simulates browser actions, allowing you to scrape dynamic web pages. Another option is to use APIs provided by websites, which can streamline data collection without traditional web scraping methods. Understanding web scraping methods and automation can greatly enhance your data workflows.

What is a web scraping tutorial for advanced users?

An advanced web scraping tutorial often includes topics such as handling web scraping challenges like CAPTCHAs, using proxies to avoid IP bans, and managing pagination for extensive data collections. Techniques like headless browsing with Puppeteer or Selenium are also covered, allowing experienced users to scrape content efficiently and ethically.

What are common data extraction techniques used in web scraping?

Common data extraction techniques in web scraping include HTML parsing with libraries like lxml or Beautiful Soup, XPath for navigating XML documents, and regular expressions for pattern matching. Each technique has its strengths, and understanding them can improve your overall web scraping methods and capabilities.

How do I manage data from multiple web pages when scraping?

Managing data from multiple web pages while scraping can be handled by implementing pagination support within your scraping script, which allows you to extract data across various pages systematically. Additionally, utilizing data storage solutions like CSV files, databases, or cloud services can help consolidate the data collected using web scraping techniques.

What are the legal considerations to keep in mind when web scraping?

When web scraping, it’s essential to adhere to the website’s terms of service and robots.txt file to ensure compliance with legal guidelines. Avoid scraping sites that explicitly prohibit it and respect copyright laws. Understanding the legal landscape of web scraping methods helps you conduct data extraction ethically and responsibly.

Can I scrape websites that require login credentials?

Yes, you can scrape websites that require login credentials by using session management techniques in your web scraping tutorial. Libraries such as Requests in Python can help maintain sessions, allowing you to log in and then scrape the data you need while respecting the site’s rules on data access.

What tools are available for web scraping automation?

Several tools are available for web scraping automation, including open-source libraries like Scrapy and Beautiful Soup, and browser automation frameworks like Selenium. These tools enable the automatic collection of data from websites, streamlining the entire web data extraction process.

| Key Points |

|---|

| Web scraping techniques allow users to extract data from websites automatically, often utilizing tools and scripts. |

| Common tools for web scraping include Beautiful Soup, Scrapy, and Selenium, which automate data extraction processes. |

| Web scraping can be used for various purposes such as data analysis, price comparison, and content aggregation. |

| It’s important to respect the website’s terms of service and robots.txt files to avoid legal issues while scraping. |

Summary

Web scraping techniques are essential for extracting information efficiently from various online sources. These methods allow businesses and individuals to gather valuable data for analysis and research purposes. Understanding and implementing effective web scraping techniques can enhance productivity and unlock a wealth of information from the web.