Web Scraping Techniques: Learn Python & Beautiful Soup

Web scraping techniques are indispensable tools for developers and data scientists looking to extract valuable insights from the vast amounts of data available online. Utilizing Python web scraping libraries, such as the popular Beautiful Soup, facilitates the process of parsing HTML and retrieving crucial information from web pages. Through effective web data extraction methods, one can automate the gathering of data for research, analytics, or competitive intelligence. By mastering these techniques, including the use of the Python requests library to fetch webpage content, you can efficiently scrape websites to build robust datasets. Whether you’re a beginner or an experienced coder, understanding these strategies will elevate your ability to retrieve and manipulate online information seamlessly.

When exploring the art of data retrieval from the internet, one often encounters various methodologies and tools that simplify the process of collecting information. These methodologies encompass everything from simple automated browsing to complex algorithms designed for systematic data harvesting. Knowledge of frameworks like Beautiful Soup can prove invaluable, providing a user-friendly interface for parsing HTML and XML documents. Moreover, techniques such as utilizing the Python requests library enable seamless interaction with web servers, enhancing the speed and efficiency of your data extraction efforts. With the increasing demand for information analysis, mastering these data acquisition strategies has become essential for anyone involved in tech or research-driven fields.

Understanding the Basics of Web Scraping

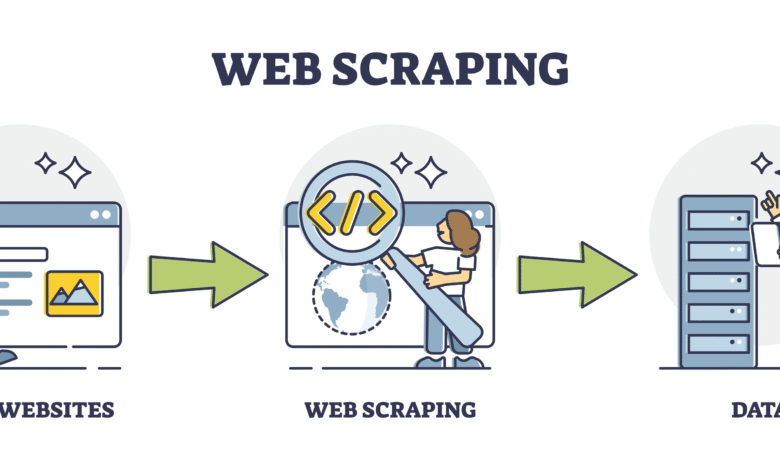

Web scraping is the automated process of extracting information from websites. It’s a powerful tool that allows you to gather data from the internet efficiently, especially when analyzing large sets of information. With the advent of Python, many developers have turned to this programming language for their web scraping tasks due to its flexibility and ease of use. Understanding the basics of web scraping is essential to effectively harness its potential.

The process typically starts with sending a request to a website, fetching its HTML content using Python’s requests library. Once the response is obtained, the next step involves parsing the HTML content. This is where libraries like Beautiful Soup come into play, providing powerful tools for navigating and manipulating the parse tree of the HTML.

Employing Python Requests Library for Efficient Data Retrieval

The Python requests library is integral for web scraping, providing a simple way to make HTTP requests. When scraping websites, the first step is usually to import the requests module and initiate a request to the desired URL. The simplicity of this library makes it a favorite among developers. For instance, one can easily handle various request types, such as GET and POST, increasing the versatility of data retrieval.

Using the requests library, you can customize your requests by adding headers, managing sessions, and handling parameters efficiently. This functionality is crucial for bypassing some of the obstacles presented by websites that implement security measures against web scraping. By mimicking browser behavior, you enhance the chances of successfully extracting the required data without being flagged.

Utilizing Beautiful Soup for HTML Parsing

Beautiful Soup is a widely-used Python library specifically designed for parsing HTML and XML documents. After obtaining the HTML content from a web page using the requests library, Beautiful Soup allows you to sift through the data easily. The library provides various methods to search for tags and navigate the parse tree, enabling efficient data extraction.

Once you have parsed the HTML, Beautiful Soup allows you to navigate through it using tag names, classes, or IDs. For example, if you are interested in extracting article titles from a web page, you can locate the corresponding h2 tags, making data collection streamlined and efficient. This makes Beautiful Soup an indispensable tool for anyone serious about web data extraction.

Key Techniques for Scraping Websites Responsibly

Web scraping must be done responsibly to avoid legal issues and ensure ethical use of data. Before initiating a scraping project, it’s vital to check the target website’s robots.txt file. This file outlines which parts of the site are permissible to scrape, guiding you on ethical scraping practices. Ignoring these guidelines can result in IP bans or legal complications.

Another best practice is to limit the frequency of your requests. When scraping websites, sending too many requests too quickly can overwhelm the server, leading to performance issues or blacklisting. Adding delays between requests and using user-agent headers that mimic web browsers can help maintain a low profile as you extract data.

Best Practices for Structuring Extracted Data

After gathering the necessary data from websites, the next step is to structure it for easy analysis. A common approach is to format the extracted data into structured formats like JSON or CSV. These formats are versatile and can easily be manipulated or imported into databases for further processing.

When structuring data, it’s crucial to maintain clarity and consistency in how information is organized. For instance, if you’re collecting article titles, authors, and publication dates, maintaining a clear schema helps facilitate accurate analysis later on. Proper structuring also simplifies exporting the data for use in various applications or reports.

Handling Errors and Exceptions in Web Scraping

Error handling is a significant aspect of web scraping. When you’re scraping websites, various issues may arise, such as connection errors, page not found errors, or changes in the HTML structure of the website. By incorporating try-except blocks in your code, you can gracefully handle these exceptions, ensuring that your scraper can continue to function even when an unexpected issue arises.

Additionally, implementing logging in your web scraping scripts can collect useful information on errors as they occur. This practice helps in debugging and adjusting your scraping strategy. By actively monitoring and handling potential errors, you enhance the reliability of your web scraping projects significantly.

Advanced Web Scraping Techniques

As your web scraping skills develop, it’s beneficial to explore advanced techniques to enhance your data extraction capabilities. This includes using libraries such as Scrapy, which is designed for large-scale web scraping projects, managing complex architectures and handling multiple requests in a more structured manner. Scrapy allows for greater control and efficiency, making it easier to manage large datasets.

Another advanced technique involves using APIs where available. Many websites offer APIs to access their data, providing a more stable and consistent method of data retrieval. Utilizing APIs can significantly reduce the complexity of scraping compared to parsing HTML, as they often return data in a structured format like JSON.

Integrating Web Scraping with Data Analysis Tools

Once you’ve successfully scraped data from websites, the next logical step is to integrate your collected data with data analysis tools. Popular tools such as Pandas in Python offer powerful functionalities for data manipulation and analysis. By leveraging these tools, you can transform the scraped data into meaningful insights, allowing for richer analysis and reporting.

Moreover, integrating web scraping with data visualization libraries like Matplotlib or Seaborn can enhance your ability to present findings visually. By creating graphs and plots from the extracted data, you can effectively communicate patterns and trends to stakeholders, making your insights more impactful and accessible.

The Future of Web Scraping Technologies

As technology evolves, so do the methods and tools for web scraping. The future of web scraping technologies is poised to become more sophisticated with the advancement of artificial intelligence and machine learning. These technologies may enable more intelligent data extraction methods, allowing for better handling of dynamic web pages and interactive content.

Moreover, as websites continue to enhance their security measures against unauthorized data extraction, advances in web scraping may also focus on developing more sophisticated user agents and scraping techniques that comply with ethical standards. The future could provide hybrid solutions that balance efficient data collection with robust compliance and respect for user privacy.

Frequently Asked Questions

What are the best Python web scraping techniques?

Some of the best Python web scraping techniques include utilizing libraries like Beautiful Soup for parsing HTML, making HTTP requests with the Python requests library, and automating data extraction with Scrapy. These methods help in efficiently scraping websites and extracting web data.

How do I start with a Beautiful Soup tutorial for web scraping?

To start a Beautiful Soup tutorial for web scraping, you should first install the Beautiful Soup library alongside requests. Create a simple script that sends a request to your target website, retrieves the HTML content, and then uses Beautiful Soup to parse and extract the desired elements. Follow guides available online that offer step-by-step instructions.

What is web data extraction and how is it related to web scraping techniques?

Web data extraction refers to the process of retrieving specific data from web pages, closely linked to web scraping techniques. It involves automating the extraction of information using tools like the requests library for fetching pages and Beautiful Soup for parsing the HTML structure.

Can I scrape websites without permission using Python?

No, you should not scrape websites without permission. Always check the website’s robots.txt file and comply with its terms of service. Ethical web scraping practices include requesting permission and ensuring that the data you collect is used responsibly.

What is the role of the Python requests library in web scraping?

The Python requests library plays a crucial role in web scraping by enabling you to send HTTP requests to web servers and retrieve HTML content. Without it, you wouldn’t be able to fetch the web pages you want to scrape and extract data from.

How can I extract multiple elements from a webpage using Beautiful Soup?

To extract multiple elements from a webpage using Beautiful Soup, you can use methods like `find_all()` that allow you to specify the tag names or classes you want to target. For example, `soup.find_all(‘div’, class_=’example’)` would return all div elements with the class ‘example’ for further data extraction.

What is the importance of complying with a website’s robots.txt file in web scraping?

Complying with a website’s robots.txt file is essential in web scraping because it outlines the site’s policy on web crawlers and scraping activities. Respecting these guidelines helps for ethical scraping and avoids potential legal issues.

How do I ensure my web scraping project is scalable?

To ensure your web scraping project is scalable, consider using frameworks like Scrapy that handle multiple requests and follow best practices like efficient data storage, using asynchronous requests, and managing API calls effectively.

Are there any limitations to using web scraping techniques?

Yes, limitations to using web scraping techniques include legal restrictions, potential blocking by websites, changes in website structure that can break your scraper, and the ethical considerations regarding data usage. Always review the legality and ethics of your scraping activities.

What should I do if the HTML structure of a website changes while scraping?

If the HTML structure of a website changes while scraping, you will need to adjust your Beautiful Soup parsing logic to match the new structure. Regular maintenance of your scraper is essential to ensure it remains functional and can adapt to website updates.

| Step | Description |

|---|---|

| 1 | Send a request to the website using an HTTP library. |

| 2 | Parse the HTML content using Beautiful Soup. |

| 3 | Extract the relevant information, such as text and images. |

| 4 | Structure the extracted data into a usable format like JSON or CSV. |

Summary

Web scraping techniques involve several systematic steps to collect data from websites efficiently. It is crucial to start by sending a request to retrieve the desired webpage’s HTML content. Then, parsing this HTML using tools such as Beautiful Soup allows you to identify and extract pertinent information like text and images. After extracting the data, it’s essential to structure it appropriately, converting it into formats like JSON or CSV for future use. Always remember to adhere to the site’s terms of service and check the robots.txt file for permission. In summary, mastering these web scraping techniques not only facilitates data gathering but can also significantly enhance your data analysis and business intelligence efforts.