Web Scraping Techniques: How to Extract Data Effectively

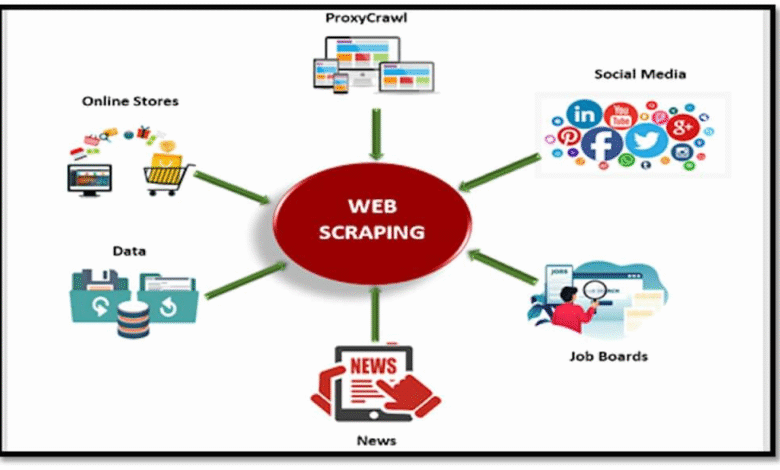

Web scraping techniques have become essential tools for gathering data from websites seamlessly and efficiently. In this digital age, mastering these techniques opens doors to various opportunities, from market research to competitive analysis. With an effective web scraping tutorial, you can learn how to scrape websites systematically, utilizing the best practices in data extraction methods. Automated web scraping not only saves time but also increases the accuracy of the data collected. Delving into these techniques can revolutionize how businesses and individuals harness online information.

When it comes to extracting information from the web, utilizing various methodologies can significantly enhance one’s data collection efforts. By exploring advanced strategies for online data gathering, you can discover the art of harvesting valuable insights from digital sources. Techniques such as content mining and web harvesting are crucial for anyone aiming to leverage the power of online information. Employing smart automation in your online scraping endeavors not only streamlines the process but also optimizes results. Understanding these methodologies will equip you with the necessary skills to thrive in an increasingly data-driven world.

Understanding Web Scraping Techniques

Web scraping is a powerful method for extracting data from websites automatically. It’s widely used in various industries for tasks such as market research, price monitoring, and gathering data for academic purposes. One of the most common web scraping techniques involves using libraries and tools like Beautiful Soup and Scrapy in Python. These libraries allow users to interact with HTML documents and parse the data they need effectively. Whether you’re scraping simple lists or complex tables, these tools provide robust solutions for efficient data extraction.

Another effective technique is using automated web scraping services, which can handle large-scale scraping tasks without manual intervention. These services often employ sophisticated algorithms that mimic human behavior to navigate through websites, reducing the risk of being blocked by firewalls or CAPTCHA systems. When learning how to scrape websites, it’s essential to familiarize yourself with the targeted site’s structure and layout. This knowledge enables you to identify the elements you want to extract, ensuring a smoother scraping experience.

Best Practices for Data Extraction

When engaging in data extraction, adhering to scraping best practices is crucial for maintaining ethical standards and avoiding legal issues. Always check a website’s robots.txt file to determine which parts of the site you are allowed to scrape. Additionally, respect the website’s terms of service and avoid overwhelming the server with requests. Implementing delays between requests and using user-agent rotation are good strategies to prevent your IP address from being blocked.

Moreover, it’s important to compile and store your scraped data securely. Using structured formats like JSON or CSV can make it easier to analyze and process the data later. Always validate and clean the data post-extraction to ensure it is accurate and usable. By following these best practices in your web scraping tutorial or projects, you can create a sustainable scraping operation that yields high-quality data.

Automated Web Scraping Frameworks

Automated web scraping frameworks like Selenium and Puppeteer have revolutionized the way scraping is performed. These tools allow users to automate interactions with websites more naturally, simulating a user’s actions such as clicking buttons and filling forms. This is particularly useful for websites that rely heavily on JavaScript, as these frameworks can render the full website before data extraction. For beginners learning how to scrape websites, these frameworks provide an accessible interface that simplifies the process.

Integrating automated web scraping into your workflow can greatly enhance productivity. Additionally, both Selenium and Puppeteer offer extensive support for various programming languages, making them flexible choices for developers. As you advance in your web scraping journey, experimenting with these frameworks can provide insights into more complex scraping techniques, such as handling AJAX requests and managing headless browsing.

How to Scrape Websites: A Step-by-Step Guide

Scraping websites effectively requires a clear plan and understanding of the process. Start by defining your objectives: what data do you intend to extract, and why is it important? Finding the right tools tailored to your needs is the next step. Python offers an array of powerful libraries, including Requests for fetching web pages and Beautiful Soup for parsing HTML. This combination is particularly effective for beginners looking to get started with web scraping.

Once you’ve set up your development environment, the next step in your scraping journey involves writing the code to fetch and parse the required data. Use your knowledge of HTML to navigate the document structure and locate the elements you need. Utilize various data extraction methods, such as selecting elements by tag, class, or ID, to ensure you get the right content. Testing and refining your script will lead to more reliable and accurate scraping results.

Data Processing Post-Scraping

After successfully scraping data, the next critical phase involves processing the collected information. Data processing entails cleaning and transforming the extracted data into a usable format. This stage may involve removing duplicates, handling missing values, or converting data types to ensure consistency. Utilizing libraries like Pandas in Python can streamline this process, allowing you to manipulate and analyze the data efficiently.

Additionally, organizing your data into databases or data frames can aid in further analysis or reporting. Depending on your project’s requirements, you might want to perform additional steps such as data modeling or visualizing insights through graphs. This step not only enhances the value of your scraped data but also provides actionable insights that can drive business decisions or research findings.

Ethics in Web Scraping

Ethics play a vital role in web scraping, as it involves extracting data from platforms created by others. Understanding the legal implications and ethical guidelines ensures that your scraping practices remain respectful and lawful. It’s essential to be transparent about your data collection methods, especially if the data will be published or used commercially. Proper attribution and respect for data ownership are critical components of ethical scraping.

Additionally, acknowledging the significance of user privacy is paramount while conducting web scraping. Collecting personally identifiable information without consent can lead to serious legal consequences. Therefore, striving for responsible scraping methods, such as focusing on open data sources or anonymized data, aligns your practices with ethical norms and respects the rights of data producers.

Maintaining Scraping Efficiency

To maximize the efficiency of your web scraping projects, implementing effective strategies is key. Efficient data extraction not only saves time but also enhances the volume of data you can gather. One way to maintain scraping efficiency is by optimizing your scripts, ensuring they execute quickly and handle exceptions gracefully. This may involve using asynchronous requests or parallel processing techniques to speed up the scraping process while still complying with best practices.

Moreover, monitoring the performance of your scraping jobs regularly is crucial. Establishing a logging system can help track errors and data quality issues. As websites evolve, your scraping scripts may require updates. Keeping this in mind and being adaptable to changes in website structures can safeguard the integrity and reliability of your data extraction efforts.

Advanced Web Scraping Strategies

For those who have mastered the basics of web scraping, exploring advanced strategies can further enhance your abilities. Techniques such as scraping dynamic content loaded via APIs, dealing with paginated data, and extracting data from interactive elements can vastly enrich the data set you obtain. Many modern websites employ various technologies that affect how content is presented, thus requiring you to leverage advanced techniques for data extraction effectively.

Additionally, incorporating machine learning algorithms to analyze and extract insights from your scraped data can provide a competitive edge. This integration can automate parts of the data processing stage, making informed predictions or classifications based on historical trends. The combination of advanced web scraping strategies with machine learning not only enriches your data collection process but also sets the stage for more sophisticated analyses.

Common Challenges in Web Scraping

While web scraping is a valuable tool for data extraction, it also comes with its set of challenges. For instance, encountering websites that deploy anti-scraping measures such as CAPTCHAs or IP blocking can hinder your efforts. These obstacles necessitate a strategic approach to web scraping that includes frequent IP rotation or using proxy services to maintain anonymity and prevent detection.

Moreover, changes in website design can impact your existing scraping scripts, leading to malfunctions or incomplete data extraction. Regular maintenance of your scraping code and adapting it to new website structures is crucial for ensuring its longevity and reliability. Keeping abreast of updates in both scraping tools and website standards can help mitigate these challenges and enhance the overall scraping experience.

Frequently Asked Questions

What are the best practices for web scraping techniques?

When utilizing web scraping techniques, it’s essential to follow best practices such as respecting robots.txt files, avoiding aggressive scraping to prevent server overload, and ensuring compliance with legal restrictions. Additionally, consider using user-agent rotation and delaying requests to mimic human behavior.

How do I start with a web scraping tutorial?

A good web scraping tutorial will guide you on the basics of data extraction methods. Start by selecting a programming language like Python, which has libraries such as Beautiful Soup and Scrapy. Follow step-by-step instructions to install these tools and visualize how to parse HTML to extract the desired data.

What are the most common data extraction methods used in web scraping?

In web scraping, common data extraction methods include using HTML parsing libraries (like Beautiful Soup), direct API calls, and utilizing browser automation tools such as Selenium. Each method serves different purposes, depending on the complexity of the task and the website structure.

What is automated web scraping and how does it work?

Automated web scraping involves using scripts or software to collect data from web pages without manual intervention. By scheduling periodic tasks and utilizing tools that mimic human browsing, automated web scraping allows for efficient data gathering while saving time and resources.

How can I scrape websites effectively?

To scrape websites effectively, begin by identifying the data you want to collect. Choose the right web scraping technique, whether it’s API scraping or HTML parsing, and ensure you are ethical in your approach by complying with the website’s terms of service. Use libraries and frameworks for programming that simplify the scraping process.

| Point | Description |

|---|---|

| Web Scraping | The process of extracting data from websites. |

| Techniques | Methods include using libraries like BeautifulSoup for HTML parsing or Scrapy for crawling. |

| Limitations | Some sites block scraping or require permission to access their content. |

| Legal Considerations | Always check the site’s terms of service to avoid legal issues with scraping. |

| Use Cases | Commonly used for data analysis, research, and competitive analysis. |

Summary

Web scraping techniques are essential for efficiently gathering data from various online sources. Despite my inability to access external websites like nytimes.com for scraping, I can provide insights on how to leverage techniques such as BeautifulSoup and Scrapy for effective data extraction. Awareness of limitations and legal considerations is critical for responsible scraping. Utilizing these techniques opens doors to valuable data insights, reinforcing their importance in data-driven decision-making.