Web Scraping Techniques: Extracting Content Effectively

Web scraping techniques have become essential for those looking to harness the vast information available online. By employing popular tools such as Python web scraping libraries, developers can efficiently extract valuable data from various websites. Among these tools, the Beautiful Soup tutorial serves as a great starting point for beginners wanting to dive into HTML parsing. Additionally, the Scrapy framework allows for more complex data scraping projects, providing robust features for web data extraction. However, it is crucial to respect each website’s terms of service and robots.txt file when scraping content from websites, ensuring ethical practices in data collection.

In the world of data mining, automated data harvesting is a powerful method for obtaining online information. Techniques such as content scraping enable users to collect and interpret data from digital platforms where manual collection would be inefficient. Python programming language offers a suite of libraries tailored for these tasks, ensuring users can manage data extraction seamlessly. Moreover, frameworks like Scrapy provide a structured approach for extensive data retrieval projects. Navigating ethical implications, such as compliance with website policies, remains a cornerstone of responsible data engagement.

Introduction to Web Scraping Techniques

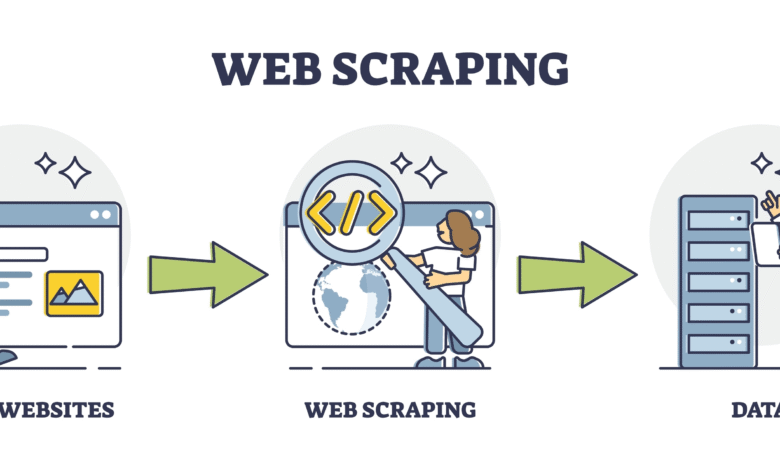

Web scraping techniques involve the automated process of gathering data from websites. By utilizing programming languages and libraries such as Python, developers can extract valuable information efficiently. This practice is particularly useful for aggregating data across multiple sources, allowing for extensive analysis and data-driven decision-making. Web scraping simplifies retrieving content that is otherwise tedious to collect manually.

One of the most popular tools for web scraping in Python is Beautiful Soup, which provides a robust framework for parsing HTML and XML documents. Another powerful tool is Scrapy, which is great for large-scale scraping and web crawling. Both of these libraries enable developers to navigate the HTML structure of a webpage, precisely extract the desired information, and store it for further processing.

Getting Started with Beautiful Soup Tutorial

Beautiful Soup is an easy-to-use library in Python for web scraping. It allows users to parse HTML documents and access elements of the document tree easily. To get started, you first need to install the library using pip: `pip install beautifulsoup4`. Once installed, you can use it in combination with the requests library to retrieve web page content.

After fetching the content of a page, you can create a Beautiful Soup object and begin navigating its elements. For example, you can locate tags like `

` for titles or `

` for paragraphs. Beautiful Soup also supports searching for elements with specific attributes or contents, making it easier to extract relevant data such as articles, images, and other multimedia.

Scraping Content from Websites with Scrapy Framework

The Scrapy framework is a powerful alternative for web scraping that provides a complete solution for extracting data. It is particularly beneficial when you need to scrape large volumes of data quickly and efficiently. With Scrapy, you can define your own spiders, which are essentially scripts that navigate through the site and extract data according to rules you specify.

In addition to its data extraction capabilities, Scrapy also offers built-in support for handling complexities like pagination and sessions. This makes it an ideal choice for scraping content from dynamic sites or dealing with APIs. By utilizing Scrapy’s functionalities, developers can automate the scraping process across numerous pages and manage requests without overwhelming the target server.

Understanding the Importance of Robots.txt

When scraping content from websites, it is crucial to respect the site’s robots.txt file. This file provides directives to web crawlers about which pages can or cannot be accessed and serves to protect sensitive content. Before scraping any website, developers should make it a best practice to review this file to ensure compliance with the site’s terms of service.

Failing to adhere to the guidelines outlined in the robots.txt file can result in legal repercussions or being banned from the site. Consequently, conscientious web scraping involves not just the technical aspects but also ethical considerations regarding data privacy and ownership.

Parsing HTML and Extracting Data

One of the essential steps in web scraping is parsing the HTML of web pages to extract meaningful data. By using libraries like Beautiful Soup or Scrapy, you can navigate through the nested HTML elements that structure web content. This capability is fundamental, as it allows you to select and extract specific parts of a webpage, such as articles, headlines, or product details.

After parsing the HTML, the extracted data can be transformed into structured formats like CSV, JSON, or even directly into a database. This transformation is vital for further analysis and data manipulation, allowing developers and data scientists to work with the information meaningfully, leveraging techniques such as data visualization or machine learning.

Storing and Managing Scraped Data

Once you have successfully scraped data from web pages, the next step is to effectively store and manage this data. Storing scraped content in databases allows for efficient querying and data retrieval, which is essential for analysis. Utilizing databases like SQLite or PostgreSQL with Python can streamline this process.

Moreover, organizing the data properly during the scraping process ensures that it remains easily accessible and manageable. Adding metadata such as the source URL and timestamp can enhance the comprehensibility and usability of your scraped data, making it straightforward for future analysis or reporting.

Ethics and Best Practices in Web Scraping

Web scraping, while a powerful tool for data gathering, comes with ethical considerations that developers must be aware of. It is imperative to prioritize ethical scraping practices, which include abiding by the terms and conditions of the website being scraped, respecting data ownership, and minimizing server load during scraping operations.

Furthermore, transparency is essential when scraping data. If your purpose for scraping is to use the data commercially, notifying the site owners and obtaining permission can foster goodwill and prevent potential legal issues. Transparency not only safeguards you but also enhances the credibility of your web scraping efforts.

Data Extraction Strategies for Effective Web Scraping

Developing effective data extraction strategies is vital for successful web scraping projects. This starts with determining the required data and the best methodologies for accessing that data. Understanding the structure of the target website and identifying the unique identifiers for elements to be scraped will contribute to more efficient extraction.

Incorporating techniques such as XPath and CSS selectors in your scraping logic can enhance the accuracy of the data extraction process. Such strategies help in isolating elements without interference from surrounding HTML, ensuring the accuracy and relevance of the scraped content.

Advanced Features of Scrapy for Large-Scale Projects

For larger web scraping projects, leveraging the advanced features of the Scrapy framework can significantly enhance efficiency. With its built-in support for handling concurrent requests, Scrapy enables developers to scrape a multitude of pages at once. This feature is particularly valuable when dealing with extensive data requirements across multiple websites.

Additionally, Scrapy’s middleware and pipeline systems allow developers to customize how requests and responses are processed, providing flexibility that can be vital for large-scale web scraping projects. Utilizing these advanced features can streamline the scraping process, from initial data collection to final storage.

Frequently Asked Questions

What are some effective Python web scraping techniques?

Effective Python web scraping techniques include using libraries such as Beautiful Soup for parsing HTML and Scrapy framework for building web crawlers. These tools allow for structured web data extraction by facilitating easy navigation through HTML tags and managing requests to retrieve page content.

How does a Beautiful Soup tutorial help in web scraping?

A Beautiful Soup tutorial aids in web scraping by teaching how to parse HTML and XML documents, making it easier to scrape content from websites. It covers essential functions for searching tags, navigating the parse tree, and extracting specific data, which is crucial for effective data extraction.

What benefits does the Scrapy framework offer for web data extraction?

The Scrapy framework offers numerous benefits for web data extraction, including asynchronous requests, built-in handling of various formats like JSON and XML, and an easy-to-use API for defining data models. Additionally, Scrapy provides powerful tools for managing spiders and pipelines to clean up and store scraped data.

How can I scrape content from websites while adhering to their policies?

To scrape content from websites responsibly, always check the robots.txt file for scraping guidelines and adhere to their terms of service. Use respectful scraping techniques by implementing delays, randomizing requests, and limiting the amount of data extracted to avoid overloading the server.

What is the process for scraping content from a website using Python?

The process for scraping content from a website using Python typically involves several steps. First, you send a request to the desired URL using libraries like Requests. Then, parse the HTML content with Beautiful Soup or Scrapy. Finally, navigate through the HTML structure to extract necessary information like text or images.

Why should I use Python for web scraping instead of other programming languages?

Python is widely favored for web scraping because of its simplicity and powerful libraries such as Beautiful Soup and Scrapy. Its ease of use allows developers to quickly write and run web scraping scripts, while its extensive community support aids in troubleshooting and learning effective web scraping techniques.

What kind of data can I extract using web scraping techniques?

Using web scraping techniques, you can extract various types of data, including text, images, links, and structured data such as tables or lists from websites. This versatility allows for rich data extraction from online resources, which can be beneficial for market research, competitive analysis, and content aggregation.

What should I consider when choosing a web scraping library for Python?

When choosing a web scraping library for Python, consider factors such as the complexity of the website being scraped, ease of use, documentation quality, and community support. Libraries like Beautiful Soup are great for simple projects, while Scrapy is better suited for more complex web data extraction tasks involving multiple pages.

| Key Point | Details |

|---|---|

| Web Scraping Libraries | Use libraries like Beautiful Soup and Scrapy to facilitate web scraping in Python. |

| Process of Web Scraping | The process generally involves requesting page content, parsing HTML, and extracting information. |

| Types of Information to Extract | Relevant information such as titles, body text, articles, and keywords can be extracted. |

| Respect Robots.txt | Always check the website’s robots.txt file and terms of service to comply with scraping policies. |

Summary

Web scraping techniques are essential tools for efficiently gathering information from the web. In this digital age where data is abundant, learning how to effectively extract content using techniques such as Beautiful Soup or Scrapy can provide tremendous advantages for researchers, analysts, and anyone looking to gather data. It’s crucial to respect a website’s policies by checking its robots.txt file to ensure compliance with their scraping rules.