Web Scraping Techniques: Essential Tips for Beginners

Web scraping techniques offer a powerful way to gather data from websites, making it a popular choice among data analysts and enthusiasts alike. In the digital age, knowing how to web scrape can significantly enhance your ability to perform data extraction from websites efficiently. With the right web scraping tools, users can automate the collection of information, saving countless hours of manual work. However, it’s important to adhere to scraping best practices to ensure ethical and legal compliance. Whether you’re looking to retrieve product details or competitor analysis, mastering these techniques can give you a competitive edge in data-driven decision-making.

When it comes to harvesting online data, various methods and strategies come into play, collectively known as data harvesting techniques. These methods, which encompass everything from automated bots to manual capture, are essential for anyone aiming to collect valuable information from the internet. Understanding the nuances of these strategies can facilitate improved results when engaging in online inquiry. As you delve into the world of data mining, familiarizing yourself with the most effective data extraction methods and tools will empower you to navigate the ocean of online content more adeptly.

Understanding Web Scraping Basics

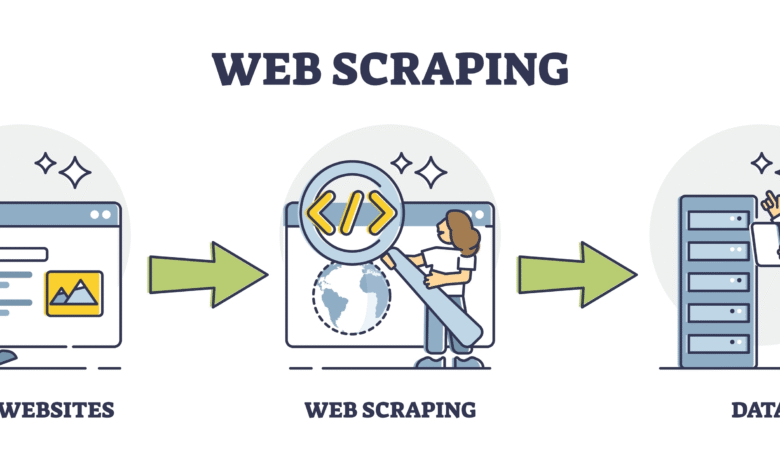

Web scraping is a powerful technique for extracting data from websites, often referred to as web harvesting or web data extraction. This method enables individuals and businesses to gather large amounts of information efficiently without manual effort. Understanding how to web scrape is critical for anyone looking to leverage publicly available data for analysis or other applications.

At its core, web scraping involves sending requests to web servers and retrieving the HTML content of web pages. From there, the data can be parsed and structured into a usable format, such as CSV or JSON. Mastering web scraping basics opens up a wealth of possibilities for data collection and analysis.

Web Scraping Techniques and Tools

There are various web scraping techniques, including HTML parsing, DOM manipulation, and browser automation. Each technique has its own use cases and is suited for different types of sites. For instance, if you’re working with JavaScript-heavy sites, using browser automation tools like Selenium may be the best approach.

In addition to understanding web scraping techniques, utilizing the right web scraping tools can dramatically improve your efficiency. Popular tools include Beautiful Soup for Python, which is fantastic for HTML parsing, and Scrapy, a powerful framework for extracting web data. Automating the extraction process not only saves time but also minimizes human error.

Best Practices for Effective Web Scraping

When engaging in web scraping, it’s essential to follow best practices to ensure you do so legally and ethically. Always check a website’s Terms of Service before scraping, as some sites explicitly prohibit it. Additionally, implement respectful scraping techniques, such as limiting the frequency of your requests and providing a user-agent string to identify your scraper.

Furthermore, data extraction from websites should focus on extracting only the necessary information. Over-scraping can not only lead to getting blocked by the site but can also create significant data management issues. By prioritizing quality over quantity, web scrapers can create a more robust and effective data collection strategy.

Navigating Legal Considerations in Web Scraping

The legal landscape of web scraping can be complex, with various laws and regulations governing the use of web data. Understanding copyright issues, data privacy laws, and potential liabilities is crucial for anyone involved in scraping. For instance, the Computer Fraud and Abuse Act (CFAA) in the U.S. has implications for unauthorized access to computer systems.

Additionally, companies may employ measures to limit or prevent scraping on their sites. Always consider seeking permission where necessary and maintaining transparency about your intentions if you are scraping a particular data set. This respect for legal boundaries will not only protect you but also encourage a more cooperative relationship with the data source.

Advanced Strategies for Web Data Extraction

For those looking to enhance their web scraping practices, leveraging APIs can be a game changer. Many websites offer APIs that allow direct access to their data without the complexities of scraping. Using these APIs ensures compliance and can provide structured data, making data extraction from websites more seamless.

Additionally, consider implementing rate limiting in your scraping logic to avoid overwhelming the server and ensure your IP address remains undetected. Alternating between multiple IPs or using proxies can also be effective in maintaining uninterrupted access to the content.

Common Challenges in Web Scraping and How to Overcome Them

Web scraping is not without its challenges. Websites can frequently change their structure, rendering your scraping scripts ineffective. This is often referred to as ‘website fragility.’ To combat this, regularly update your scraping scripts and implement error handling protocols to manage and adapt to these changes efficiently.

Moreover, security measures such as CAPTCHAs present obstacles to web scrapers. Employing browser automation tools capable of bypassing simple CAPTCHAs or utilizing human-solving services are common practices to navigate these challenges effectively.

The Future of Web Scraping Technologies

The world of web scraping is ever-evolving, with advancements in technology leading to more sophisticated scraping tools and techniques. As artificial intelligence and machine learning become increasingly integrated into web scraping platforms, the capacity for predictive data extraction is on the rise, enabling further automation and efficiency.

Additionally, the growing awareness of data ethics and regulations suggests that the future of scraping will require a more conscientious approach toward compliance and user privacy. Innovations in data anonymization and ethical scraping practices are likely to become essential components of web scraping strategies.

Building a Web Scraping Project: Step-by-Step Guide

Starting a web scraping project can be daunting without a clear plan. Begin with defining your goals: what data do you intend to collect and how will it benefit your analysis? Next, identify the websites that hold this information and evaluate their structure. Tools like Chrome DevTools can assist you in understanding the layout of web pages.

Once you’ve assessed the website, write your scraping scripts using appropriate libraries or tools based on the complexity of your target data. Testing these scripts in small batches can help in troubleshooting and ensuring accuracy before scaling up your project.

Learning Resources for Aspiring Web Scrapers

For those looking to deepen their knowledge of web scraping, a variety of resources are available. Online platforms such as Coursera and Udemy offer courses specifically focused on web scraping techniques and tools, ensuring that learners engage with practical examples and real-world applications.

Books, blogs, and forums related to web scraping provide a plethora of information and community support. Engaging with fellow web scrapers, whether through online communities or local meetups, can foster learning and sharing of best practices.

Frequently Asked Questions

What are some common web scraping techniques?

Common web scraping techniques include HTML parsing, utilizing APIs, and web crawling. Each method serves different purposes depending on the data extraction needs, such as using libraries like BeautifulSoup or Scrapy for HTML parsing.

How do I get started on how to web scrape effectively?

To get started with web scraping, first identify the data you need, select appropriate web scraping tools like Python libraries (BeautifulSoup, Scrapy, or Selenium), then write scripts to automate data extraction while adhereing to website terms of service.

What are the best web scraping tools available?

Some of the best web scraping tools available include Scrapy for comprehensive scraping projects, BeautifulSoup for HTML parsing, and Octoparse for a user-friendly interface. Each tool offers unique features suited for different web scraping techniques.

What are some best practices for data extraction from websites?

Best practices for data extraction include obeying robots.txt files, avoiding excessive requests to prevent server overload, and ensuring data accuracy through validation techniques. It’s also essential to check the legal implications of web scraping the targeted site.

How can I ensure my web scraping is ethical?

To ensure your web scraping is ethical, always check and follow the website’s terms of service, limit the frequency of your requests, and give credit for any data used publicly. Additionally, respect copyright laws to avoid legal issues.

Can I automate data extraction from websites?

Yes, you can automate data extraction using web scraping tools like Selenium, which allows you to simulate browser actions, or Scrapy, which can crawl entire websites efficiently. Automation makes large-scale data extraction easier and faster.

What is the difference between web crawling and web scraping?

Web crawling is the process of systematically browsing the web to index content, while web scraping focuses on extracting specific data from web pages. Both techniques are used in gathering information from the internet but serve distinct purposes.

| Key Point | Explanation |

|---|---|

| Website Access Limitations | Cannot access specific web pages for scraping. |

| General Assistance | Can provide guidance on extracting content and general web scraping queries. |

Summary

Web scraping techniques are essential for efficiently extracting and processing data from websites. While direct access to a website’s content may not be possible, various methods and tools can facilitate web scraping tasks. By understanding the underlying concepts and implementing best practices, individuals can harness data from the web without violating site policies. Always respect robots.txt files and adhere to ethical scraping guidelines to maintain a positive relationship with website owners.