Web Scraping Techniques: Best Practices and Tools

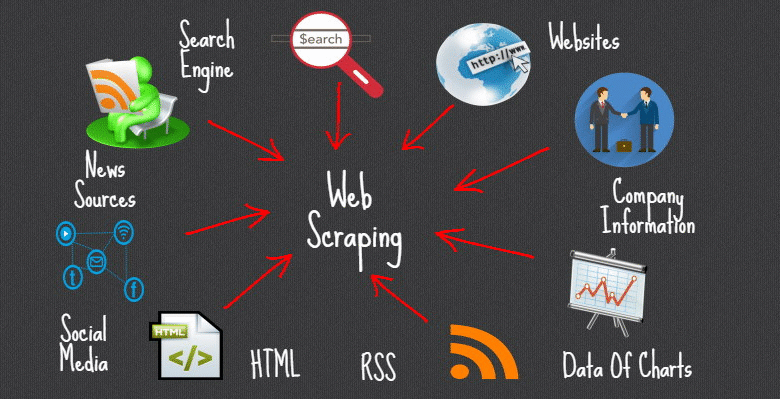

Web scraping techniques are essential for anyone looking to extract valuable data from online sources efficiently. By employing various data extraction methods, users can automate the collection of information, saving both time and effort. Understanding scraping best practices is crucial for ensuring compliance with legal terms and data quality. With the right web scraping tools at your disposal, such as Python libraries or dedicated software, you can easily learn how to scrape a website effectively. For beginners, a well-structured scraping data tutorial can provide the foundational knowledge necessary to start successfully gathering online data.

In today’s digital landscape, extracting information from websites can be referred to through various terms like data harvesting and content mining. These processes enable individuals and businesses to gather insights and compile trends from vast amounts of online data. Utilizing effective scraping methods not only enhances research capabilities but also aids in competitive analysis. With an array of advanced technologies and programming strategies, data extraction allows for a seamless integration of online information into databases. Whether it’s for market research or analytics, mastering these skills opens a wealth of opportunities.

Understanding Web Scraping Techniques

Web scraping techniques encompass a range of methods used to automatically extract large amounts of information from websites. At its core, web scraping often involves sending requests to a website’s server and retrieving the HTML content, which can then be parsed to pull specific data fields. This process may utilize various programming languages such as Python, with libraries like Beautiful Soup or Scrapy, enabling developers to write scripts that efficiently navigate and extract data from multiple web sources. Understanding these scraping techniques is essential for anyone looking to automate data collection.

Additionally, there are several key best practices to keep in mind when employing web scraping techniques. It’s crucial to respect the website’s robots.txt file, which indicates the areas of the site that are off-limits for scraping. Be aware of rate limiting and avoid sending too many requests in a short period to prevent being blocked. Also, leveraging web scraping tools that come equipped with compliance features can help ensure that your data extraction aligns with legal requirements, promoting ethical scraping practices.

Key Data Extraction Methods

Data extraction methods are vital in web scraping, as they dictate how information is gathered and processed. Methods such as XPath, CSS selectors, and regular expressions are commonly employed to pinpoint the exact data elements needed from the HTML structure of the website. Each method has its unique syntax and usage, making it important for scrapers to choose the right one based on the complexity of the target web data.

In the realm of data extraction, automated solutions often integrate these methods into user-friendly interfaces, providing users with a seamless experience to scrape data without requiring extensive programming knowledge. Many of these solutions come equipped with pre-built templates for popular websites, significantly diminishing the time required to set up a scraping project.

Scraping Best Practices for Success

To achieve successful data acquisition through web scraping, adhering to best practices is paramount. Understanding ethical guidelines and ensuring compliance with local laws is the first step. Implementing user-agent rotation and proxy servers can be a game changer in masking your scraping identity, providing anonymity, and avoiding IP bans. This strategic approach not only enhances the efficiency of your scraping efforts but also preserves the integrity of the target websites.

Moreover, it’s important to maintain organized data storage. Whether you’re using relational databases or flat files, implementing a structured format helps in managing and analyzing your scraped data effectively. Incorporating logging and error handling in your scraping scripts will facilitate smoother operations and help identify issues quickly, ensuring that you maintain consistent data flow without significant interruptions.

Essential Web Scraping Tools

When diving into the world of web scraping, utilizing the right tools can dramatically enhance your data extraction experience. Tools like Octoparse, ParseHub, and WebHarvy provide point-and-click functionality that simplifies the scraping process for those who might lack programming skills. Each of these tools is designed to accommodate various scraping scenarios, allowing users to extract data from simple web pages to dynamically loaded websites.

On the other hand, for those with coding experience, libraries such as Beautiful Soup, Selenium, and Scrapy offer robust frameworks that allow for greater customization and control over the scraping process. These tools provide the flexibility to implement complex scraping logic, handle JavaScript-rendered pages, and integrate smoothly with data processing pipelines, making them indispensable for serious web scrapers.

How to Scrape a Website Effectively

Learning how to scrape a website effectively requires a clear understanding of the HTML structure of the target site. Begin by analyzing the website using browser developer tools to inspect the elements you wish to scrape. Properly mapping out the necessary tags and their attributes will facilitate the extraction process. Once you have a clear plan, you can write a script that targets these elements using your preferred web scraping framework or tool.

It’s essential to test your scraping scripts iteratively since websites frequently change their designs and structures. By setting up automated checks or alerts, you can modify your scraping logic in response to any website updates, ensuring your scraped data remains accurate and relevant over time.

Scraping Data Tutorial for Beginners

For those just starting with web scraping, following a structured tutorial can provide a solid foundation. Begin by selecting a straightforward website to practice on, one that doesn’t have complex Javascript or authentication barriers. A simple static site allows beginners to grasp the basics without getting overwhelmed. Tutorials often guide newcomers through the installation of necessary tools and libraries, as well as step-by-step instructions for writing their first scraping script.

Throughout the scraping data tutorial, learners will gain insight into troubleshooting common challenges they may face, such as handling pagination, navigating through multiple pages, and managing data storage options. Engaging with community forums or support channels can further enhance the learning experience, providing access to valuable insights and best practices from seasoned web scrapers.

Handling Legal Considerations in Web Scraping

As web scraping continues to grow in popularity, it is critical to understand the legal landscape surrounding this practice. There are varying regulations across different jurisdictions concerning data collection, and some websites explicitly prohibit scraping activities. Familiarizing yourself with terms of service and data privacy laws, such as GDPR, is essential to minimize the risk of litigation and foster a responsible scraping process.

This legal awareness is particularly relevant when dealing with sensitive data. Even when data is publicly available, ethical considerations should encourage scrapers to avoid practices that could infringe on users’ privacy rights or exploit data without proper consent. Being proactive about these considerations not only protects you legally but also promotes a healthier relationship between data providers and scrapers.

Advanced Techniques in Web Scraping

Once you have mastered the basics of web scraping, exploring advanced techniques can significantly enhance your data extraction capabilities. Techniques such as web crawling, which allows for the continuous discovery of new data across multiple pages, can streamline the scraping process. By implementing a crawler, you can automate the process of locating and extracting data without manual intervention.

Another advanced technique involves machine learning algorithms for more intelligent data extraction. Using NLP techniques to analyze and categorize scraped content can provide deeper insights and transform raw data into actionable intelligence. As the field of web scraping evolves, continuous learning and adaptation to these advanced methods will enable you to stay ahead in the data-driven landscape.

Troubleshooting Common Scraping Errors

When engaging in web scraping, encountering errors is a common aspect of the journey. Issues such as designated user-agent restrictions, HTTP error codes, or dynamic webpage structures can impede scraping efforts. Implementing detailed logging within your scraping scripts can help identify the root cause of these issues quicker and allow for efficient debugging.

Moreover, common solutions include implementing retry mechanisms for failed requests, adjusting scraping intervals, and employing user-agent rotation techniques. By being proactive in identifying potential pitfalls in your scraping process, you can significantly reduce downtime and enhance the reliability of your data extraction efforts.

Frequently Asked Questions

What are the most effective web scraping techniques for beginners?

For beginners, effective web scraping techniques include using libraries such as Beautiful Soup and Scrapy, which simplify the process of parsing HTML data. Learning about data extraction methods like XPath and CSS selectors is also beneficial, as they help locate the specific data you need on a webpage.

How do web scraping tools differ in their data extraction methods?

Web scraping tools vary in their data extraction methods, with some offering out-of-the-box solutions while others require coding knowledge. Tools like Octoparse provide a user-friendly interface for non-programmers, while libraries like Scrapy and Beautiful Soup require Python coding expertise to implement more customized scraping best practices.

What are the best practices for scraping data from websites?

To employ effective scraping best practices, always check a website’s terms of service to ensure compliance. Use respectful scraping techniques, such as implementing delays between requests and adhering to robots.txt guidelines. Additionally, storing data efficiently in formats like CSV or JSON will enhance usability.

Can I learn how to scrape a website without programming knowledge?

Yes, you can learn how to scrape a website without programming knowledge by using visual web scraping tools like ParseHub and WebHarvy. These tools allow you to point and click to select the data you want, making it accessible even to those without coding skills.

What resources are available for a scraping data tutorial?

There are many online resources for a scraping data tutorial, including comprehensive courses on platforms like Udemy or Coursera. Additionally, there are numerous free tutorials available on YouTube that focus on various web scraping techniques and tools, catering to different skill levels.

How do I choose the right web scraping tools for my project?

When choosing the right web scraping tools for your project, consider factors such as the complexity of the website, your technical expertise, and budget. If you’re a beginner, a user-friendly tool like Octoparse may be ideal, while experienced users might prefer more powerful libraries like Puppeteer or Scrapy for complex tasks.

What ethical considerations should I keep in mind while using web scraping techniques?

When using web scraping techniques, it’s essential to respect the website’s terms of service and avoid overloading servers with too many requests. Ethical scraping also includes proper attribution of data sources and ensuring that sensitive information is handled responsibly.

Are there any legal restrictions on web scraping data from websites?

Yes, there can be legal restrictions on web scraping data from websites. Different jurisdictions have varying laws regarding data privacy and intellectual property. It’s crucial to understand these laws and review the specific terms of service of any website before scraping to avoid potential legal issues.

What common challenges do developers face with web scraping techniques?

Developers often face challenges such as website structure changes, CAPTCHA systems, and IP blocking when implementing web scraping techniques. To address this, developers can use rotating proxies, headless browsers, or AI-powered scraping tools that adapt to changes automatically.

How can web scraping enhance data analysis in business?

Web scraping can significantly enhance data analysis in business by providing up-to-date information from competitors, market trends, and customer sentiments. This data helps businesses make informed decisions, optimize marketing strategies, and improve overall performance.

| Key Points |

|---|

| Web scraping involves extracting data from websites using automated scripts, usually through programming languages like Python. |

| Common web scraping techniques include using libraries like Beautiful Soup, Scrapy, and requests in Python. |

| It is essential to respect website terms of service and legal considerations when scraping data. |

| Data scraped can be used for various purposes, including research, market analysis, and competitive intelligence. |

| Tools and frameworks can automate the scraping process and handle large datasets efficiently. |

Summary

Web scraping techniques play a crucial role in data extraction from websites. These techniques involve using specialized tools and libraries to obtain structured data efficiently and legally. While methods vary in complexity, they often center on understanding the target website’s structure and employing suitable programming capabilities to retrieve the required data without infringing on any copyright or usage guidelines. By mastering web scraping techniques, individuals and businesses can harness valuable insights that inform strategies and decisions.