Web Scraping Techniques: A Guide to Extracting Data

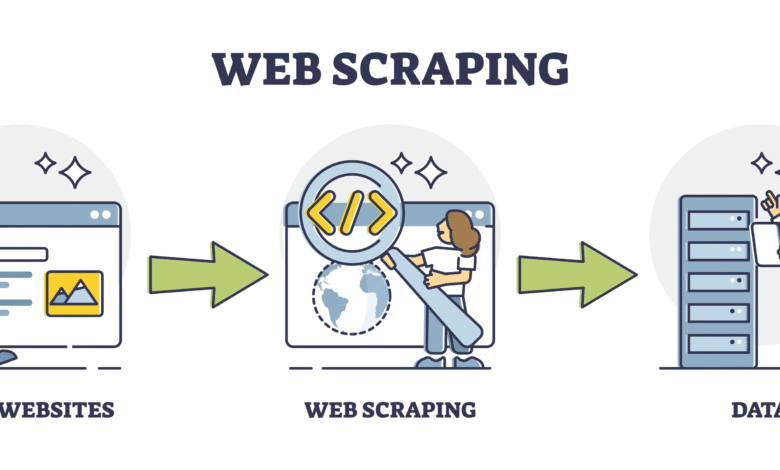

Web scraping techniques are essential tools for businesses and developers looking to automate data collection from websites. By employing effective strategies, one can harness valuable information from various online sources without manually navigating through each page. Whether you are wondering how to scrape websites for market analysis or seeking data extraction methods for research purposes, mastering these techniques is crucial. Additionally, utilizing the right web scraping tools can simplify the process, allowing you to gather insights efficiently. However, it’s important to adhere to scraping ethical guidelines to ensure compliance and respect for website owners and their content.

In the realm of data gathering, extracting information from the web—often termed data harvesting or web data extraction—has become a key practice for many industries. This innovative approach harnesses technology to pull relevant content from various online platforms, catering to needs ranging from marketing to academic research. Whether utilizing automated scripts or specialized software, individuals and organizations are finding effective ways to leverage this digital resource. It’s vital, however, to navigate these strategies within established ethical boundaries to maintain integrity and respect for data sources. Embracing these concepts not only enhances efficiency but also fosters responsible data usage.

Understanding Web Scraping Techniques

Web scraping techniques are vital for extracting data from websites efficiently. To scrape content, you need to understand various methods such as HTML parsing, DOM manipulation, and leveraging APIs. These techniques allow you to navigate the document structure of web pages, effectively gathering the information you need for analysis or reporting. Familiarizing yourself with libraries like Beautiful Soup for Python or Scrapy can enhance your ability to scrape web pages—enabling you to extract structured data regularly.

One of the core techniques in web scraping involves understanding the layout of the target website. Utilizing tools like Selenium, you can simulate a user’s browser to interact with dynamic websites that load content using JavaScript. This method ensures that all relevant data is scraped, even from pages that may not render correctly with static requests. Combining these scraping techniques with robust error handling ensures that your data extraction remains efficient, allowing you to gather significant amounts of data quickly and reliably.

Essential Web Scraping Tools

Selecting the right web scraping tools is essential for any scraping project. Various tools are available that simplify the process of data extraction from websites. Some popular ones include Octoparse, ParseHub, and WebHarvy, which offer user-friendly interfaces for non-coders to extract web data without delving into complex programming. These tools often come with built-in features that allow scheduling of scraping tasks, automating repetitive data downloads, and exporting the scraped data into various formats like CSV, JSON, or databases.

Moreover, programming libraries like Beautiful Soup and Scrapy are indispensable for developers looking to build custom web scraping solutions. These libraries provide functionalities to parse HTML and XML documents, making it easy to navigate through elements and extract necessary data. Advanced users can also utilize regular expressions to fine-tune their data extraction processes, enhancing the efficiency and accuracy of the scrape.

The Importance of Data Extraction Methods

Data extraction methods are crucial for driving insights from raw data on the web. Opting for the right method depends on the complexity of the data set and the specifics of your scraping needs. There are various methods available, ranging from simple data scraping techniques to more complex ones that require programming skills. For instance, if you’re targeting structured data from web APIs, leveraging these endpoints can lead to a smoother extraction process compared to scraping unstructured HTML.

In contrast, when dealing with website data that is more dispersed and less structured, utilizing advanced tools or programming techniques becomes necessary. Understanding the relationship between different data points and how they are displayed on a webpage can help you craft your extraction logic effectively. The right data extraction method will not only enhance your data analysis capabilities but also ensure you are gathering reliable data crucial for making informed decisions.

Scraping Ethical Guidelines to Follow

When engaging in web scraping, it is imperative to adhere to scraping ethical guidelines. These guidelines are designed to respect the rights of website owners and ensure compliance with legal frameworks. Always check a website’s Terms of Service before initiating a scrape. Many websites specify whether data scraping is prohibited, and violating these terms can lead to IP bans or legal repercussions.

Another important ethical guideline is to be mindful of the load your scraping activities impose on a website’s server. Implement measures such as rate limiting to avoid overwhelming the server with requests. Using polite scraping techniques, such as respecting the ‘robots.txt’ file of a website, can also ensure that you are only accessing publicly available data while maintaining a good relationship with the sources you are scraping.

Getting Started with How to Scrape Websites

If you are interested in how to scrape websites, the first step is to start with the basics of web technologies including HTML, CSS, and JSON. Familiarity with these technologies will help you understand how the web is structured and where to find the data you need. For beginners, using a graphical web scraping tool can provide a strong foundational experience, while more advanced users may prefer coding their scrapers using tools like Python and JavaScript.

Once you have a clear grasp of how websites function, select a specific target site and define the data you wish to extract. Verify that you have authorization to scrape this data, and then proceed to build your scraper. Remember to test your script frequently to refine how it interacts with the web page, ensuring you capture all required data accurately while avoiding errors.

Analyzing Scraped Data Effectively

Once the data is scraped, the next critical step is analyzing and processing it effectively. This analysis involves cleaning the data, handling duplicates, and ensuring that it can be used for further insights. Employ tools like Pandas in Python for data manipulation, allowing you to filter, aggregate, and visualize your data more comprehensively.

Data analysis can reveal trends, patterns, and valuable insights from the datasets you have gathered through web scraping. It’s essential to utilize statistical tools and methods to derive meaningful information that can aid in decision-making processes, business strategies, or research projects. A robust analytical approach ensures that the scraped data provides the intended value and supports your overall objectives.

Leveraging Web Scraping for Business Intelligence

Businesses are increasingly leveraging web scraping for powerful insights into market trends, competitor analysis, and customer sentiment. By systematically extracting data from competitor websites and social media platforms, companies can discern patterns and adjust their strategies accordingly. Employing web scraping tools tailored for business intelligence can streamline this process, allowing for easy comparison and aggregation of vast amounts of data.

Moreover, utilizing advanced analytics and visualization tools can help businesses make sense of the collected data, turning raw information into actionable insights. This enables companies to track their competitors more closely, understand customer preferences, and identify emerging market opportunities effectively. Web scraping for business intelligence is not just a trend but a strategic advantage in today’s data-driven world.

Best Practices for Web Scraping Projects

Proper planning and execution of best practices for web scraping projects can significantly improve both efficiency and results. Firstly, it’s crucial to outline clear objectives for what data needs to be scraped and the desired formats for this data. This helps narrow down the necessary tools and techniques to be used on the project.

Additionally, writing clean and modular code can enhance the maintainability of your scraper. Employing version control along with comprehensive documentation will also facilitate collaboration and future updates. Frequent testing and validation of the scraping process will help catch any potential issues and ensure the data remains reliable and up-to-date.

Future Trends in Web Scraping

The landscape of web scraping is continually evolving, with emerging technologies set to shape its future. Artificial Intelligence (AI) and Machine Learning (ML) are playing increasingly significant roles in web scraping, allowing for more sophisticated data recognition and extraction methods. These technologies can adapt to changes on target websites, decreasing the need for constant updates to scrapers.

Moreover, as data privacy regulations become more stringent globally, ethical web scraping practices will continue to gain importance. Businesses will need to pivot towards compliant data scraping solutions that balance effective data gathering with adherence to legal standards, ensuring that they protect user privacy while still extracting valuable data.

Frequently Asked Questions

What are the most effective web scraping techniques for beginners?

For beginners, effective web scraping techniques include using Python libraries like BeautifulSoup and Scrapy, which simplify the process of data extraction from websites. Start by understanding HTML structure and practicing how to scrape websites by extracting relevant data from static HTML pages.

How do I choose the right web scraping tools for my project?

Choosing the right web scraping tools depends on your specific project needs. Look for tools that offer features such as user-friendly interfaces, browser automation, API integration, and support for data extraction methods like XPath or regular expressions. Popular options include Octoparse, ParseHub, and Selenium.

What are the ethical guidelines for web scraping?

Adhering to ethical guidelines for web scraping is crucial. Always check a website’s robots.txt file to see what is allowed for scraping, respect the terms of service, and avoid overwhelming servers with excessive requests. It’s also important to use data responsibly and ensure compliance with laws like GDPR.

Can I scrape dynamic websites using web scraping techniques?

Yes, you can scrape dynamic websites that use JavaScript to load data. Techniques such as using headless browsers (like Selenium) or tools like Puppeteer allow you to capture data rendered on the client side. These methods enable data extraction from single-page applications (SPAs) effectively.

What challenges might I face when scraping websites, and how can I overcome them?

Challenges in web scraping include CAPTCHAs, IP blocking, and data structure changes. To overcome these, you can implement rotating proxies, use CAPTCHA-solving services, and regularly update your scraping scripts to adapt to structural changes on the target website.

What data extraction methods are best for large-scale web scraping projects?

For large-scale web scraping projects, robust data extraction methods such as distributed scraping with tools like Scrapy Cluster or deploying cloud-based services can be effective. Utilizing these methods allows you to manage multiple scraping tasks simultaneously and handle large volumes of data efficiently.

How can I learn more about web scraping techniques and best practices?

You can learn more about web scraping techniques and best practices through online courses, tutorials, and forums dedicated to data science and web development. Websites like Coursera, Udemy, and community platforms like Stack Overflow offer valuable resources for both beginners and advanced users.

| Key Point | Description |

|---|---|

| Access Limitations | You cannot access certain websites like nytimes.com to scrape content. |

| Guidance Availability | General advice on web scraping techniques can be provided. |

| User Engagement | Users can request specific guidance if needed. |

Summary

Web scraping techniques are essential tools for extracting data from websites despite access limitations. While certain sites may prohibit direct scraping, there are still various methods and best practices that can be employed to gather valuable data. From using libraries like Beautiful Soup and Scrapy to understanding legal implications, mastering these techniques allows users to effectively collect and analyze information for diverse applications.