Web Scraping Techniques: A Comprehensive Guide

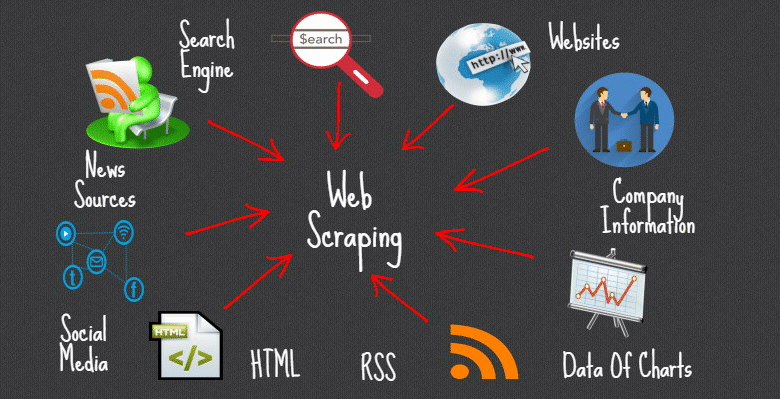

Web scraping techniques have emerged as essential tools for data extraction in today’s digital landscape. Whether you’re interested in gathering market insights, analyzing competitor strategies, or populating databases, understanding how to scrape websites effectively is crucial. By mastering web scraping best practices, you can navigate the intricacies of data extraction methods while ensuring compliance with legal standards. Utilizing various web scraping tools, you can streamline the collection process and increase the efficiency of your data gathering efforts. Moreover, implementing proven web crawling tips will not only improve the accuracy of your data but also enhance your overall scraping experience.

When it comes to automatic data retrieval, various methodologies are employed to capture relevant information from online sources. This practice, often referred to as data mining or information harvesting, enables users to turn unstructured web data into structured insights. Leveraging effective strategies for online data collection can profoundly impact the way businesses operate and make decisions. By utilizing different software solutions for web scraping and executing meticulous data gathering processes, one can maximize the information available on the web. Understanding the best ways to automate these processes will position you strategically in your industry.

Understanding Web Scraping Techniques

Web scraping is a powerful technique used to gather data from websites efficiently. Whether you are looking to collect prices from e-commerce sites, scrape news articles for sentiment analysis, or gather statistics for research, understanding the basics of web scraping is essential. Using scripting languages such as Python with libraries like Beautiful Soup and Scrapy can streamline the process of extracting data from HTML structures.

Additionally, web scraping involves navigating through various privacy settings and anti-bot measures implemented by websites. This means that understanding web crawling tips, such as utilizing user-agent headers to mimic browser requests, is crucial. It’s important to adhere to website policies and robots.txt files to avoid legal complications while scraping.

Key Data Extraction Methods

Data extraction methods vary widely based on the purpose of your scraping activity. While some may opt for simple copy-pasting, more sophisticated methods involve automation through scripts that can parse HTML or JSON responses from web servers. Selecting the right data extraction methods can significantly affect the quality and quantity of data you collect.

For instance, API scraping is an effective method when dealing with structured data, whereas HTML scraping is preferred for non-API platforms. Utilizing parsing libraries is essential for both methods, as they help in breaking down HTML documents to extract the required data efficiently. Understanding your target data type determines your extraction strategy.

Web Scraping Best Practices to Follow

When engaging in web scraping, it is vital to adhere to web scraping best practices to ensure both legality and efficiency. First and foremost, always check a site’s terms of service to confirm that scraping is allowed, as violating this can lead to legal repercussions. It’s recommended to limit the rate of requests to avoid overloading the server, which can lead to IP bans.

Implementing error handling in your scraping scripts is another best practice. This involves managing responses for when pages aren’t accessible, or when the desired data isn’t found. By ensuring that your scraper can fail gracefully, you will save time and effort in debugging, thus enhancing the overall effectiveness of your scraping endeavors.

Utilizing Effective Web Scraping Tools

There are numerous web scraping tools available that can simplify the data extraction process. These tools come with various features, such as point-and-click interfaces, scheduling options, and options for data storage formats like CSV or JSON. Tools like Octoparse and ParseHub are designed for users who may not want to write code but still need robust web scraping capabilities.

Moreover, for developers, libraries such as Scrapy provide more flexibility and power for building custom scrapers. These tools not only ease the process of writing scraping code but also improve accuracy in data extraction. Choosing the right tool based on your technical skill and scraping needs can significantly reduce the time and effort involved in your project.

Optimizing Data with Latent Semantic Indexing (LSI) Techniques

Latent Semantic Indexing (LSI) is a technique used to analyze relationships between a set of documents and the terms they contain. In the realm of web scraping, LSI can be invaluable for ensuring the relevance and context of the data you are scraping. By using LSI, you can enhance your web content’s SEO performance through smart keyword inclusion that resonates with search engines.

Implementing LSI in your scraped content allows for a richer user experience as it aligns your data extraction objectives with the intent behind search queries. By analyzing different related terms such as ‘web scraping best practices’ and ‘data extraction methods,’ you can refine your scraped dataset to ensure it meets the search criteria identified by your audience.

Ensuring Ethical Web Scraping Practices

Ethical considerations in web scraping cannot be overlooked. It’s crucial to respect the craftsmanship behind the website and consider the implications of your scraping activities. Many site owners view scraping as a theft of their intellectual property, especially if it leads to direct competition or loss of traffic. Engaging in ethical scraping practices fosters goodwill and can prevent future legal issues.

To promote ethical scraping, always reach out to the website owner before scraping their data, if possible. Offering to generate traffic back to their site or providing data insights can help create a more collaborative relationship. Moreover, monitoring your scraping activity and being transparent about your intentions can establish trust and demonstrate professionalism.

Challenges in Web Crawling and Scraping

Web crawling and scraping both come with their own sets of challenges. One significant issue is the constant change in web page structures, which can break scraping scripts and lead to incomplete data extraction. Additionally, many sites employ anti-scraping technologies, such as CAPTCHAs and dynamic content, which require advanced techniques to bypass.

Another challenge is managing large volumes of data. Scraping multiple pages can generate massive datasets that require effective storage solutions and database management. Establishing a clear strategy for filtering and simplifying data can alleviate processing woes, ultimately improving the efficiency of your data extraction efforts.

The Role of Machine Learning in Web Scraping

Machine learning is increasingly playing a role in enhancing web scraping capabilities. By employing algorithms that learn from data, you can better identify patterns in web content. This adaptation allows for more accurate data collection, improving the relevance of the information scraped from websites.

Using machine learning models, scrapers can predict changes in web layouts and adjust their extraction methods accordingly. The capacity for real-time data processing and dynamic adaptations can significantly increase the efficiency and effectiveness of web scraping projects, allowing users to stay ahead of changes in site structures.

Future Trends in Web Scraping Technology

As technology evolves, so does the landscape of web scraping. Advances in AI and natural language processing are set to revolutionize how data is extracted from the web. Future trends suggest an integration of smart assistants and automated tools that handle complex scraping tasks without human intervention, leading to significantly increased efficiency.

Moreover, the growth of cloud computing services will enable more robust data storage solutions for scraped data. This shift could lead to higher accessibility and processing power, simplifying the analysis of massive datasets collected from various sources over the internet.

Frequently Asked Questions

What are the best web scraping techniques for beginners?

Some of the best web scraping techniques for beginners include using user-friendly web scraping tools like Beautiful Soup and Scrapy in Python, which simplify data extraction. Start with learning HTML and CSS selectors, as these will help you navigate web pages effectively. Familiarizing yourself with basic REST APIs can also enhance data retrieval methods.

How can I scrape websites effectively without getting blocked?

To scrape websites effectively without getting blocked, use web scraping best practices such as rotating IPs, implementing delay between requests, and setting proper User-Agent headers. Additionally, adhering to the website’s robots.txt file and limiting the frequency of requests can help maintain good standing with site administrators.

What are some common data extraction methods used in web scraping?

Common data extraction methods in web scraping include DOM manipulation using libraries like jQuery or JavaScript for interactive sites, and HTTP requests for static pages. Parsing HTML with Beautiful Soup or using API endpoints when available are also effective data extraction methods, allowing for structured data retrieval.

What web scraping tools are recommended for large-scale data extraction?

For large-scale data extraction, tools such as Scrapy, Octoparse, and ParseHub are highly recommended. Scrapy is particularly versatile for complex projects with its crawling capabilities, while Octoparse offers a user-friendly interface for those wanting to avoid extensive coding. Ensure you choose the right tool based on your data needs and technical expertise.

What are essential web crawling tips for efficient data collection?

Essential web crawling tips include organizing your crawl by defining clear objectives, using sitemap.xml files for site structure, and implementing polite scraping techniques like respecting robots.txt. Additionally, logging crawled URLs can help prevent duplicate data and streamline your data collection process.

| Key Points |

|---|

| Detailed information retrieval requires specific content from a source. |

| Direct access to external websites, like nytimes.com, is not possible for information gathering. |

| Assistance can be offered on how to retrieve content using web scraping techniques. |

Summary

Web scraping techniques are essential for extracting information from various online sources. While accessing specific articles is not feasible when direct browsing is unavailable, there are effective methods to gather and analyze content from websites. By utilizing tools and scripts that automate the data retrieval process, one can efficiently collect the necessary information for analysis. These web scraping techniques vary in complexity but are fundamental for insights into online data.