Web Scraping Techniques: A Complete Guide to Success

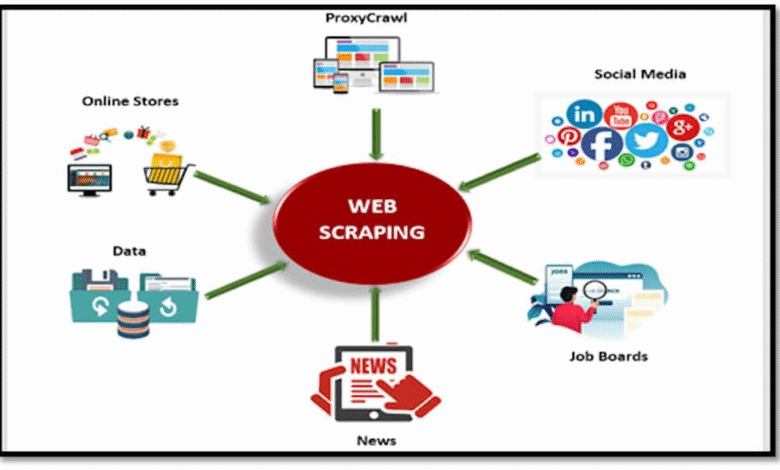

Web scraping techniques have become essential for businesses and researchers seeking to gather data efficiently from various online sources. These methods not only enable effective data extraction but also facilitate the use of powerful web scraping software, which automates the collection process. Understanding how to scrape websites is crucial for leveraging valuable information, and utilizing robust data extraction tools can significantly enhance accuracy and speed. For those new to this field, a web crawler tutorial can be an invaluable resource, outlining best web scraping practices to ensure compliance and efficiency. As the demand for competitive intelligence grows, mastering these techniques is a vital asset in today’s data-driven environment.

Data harvesting skills, often referred to as information retrieval or content scraping, are gaining traction as crucial assets in the digital age. The ability to effectively navigate and extract data from online platforms using various extraction strategies empowers users in multiple industries, from marketing to research. When individuals learn about automated data gathering methods or explore the intricacies of web crawler utilities, they open doors to uncharted data reservoirs. Additionally, adherence to ethical guidelines and best practices in data collection ensures responsible use of these technologies. This evolving landscape encourages continued exploration into advanced scraping methodologies and tools.

Introduction to Web Scraping Techniques

Web scraping techniques have become integral to data gathering in today’s information-centric world. By leveraging various methods of data extraction, businesses and individuals can collect valuable information from websites for analysis, research, or competitive intelligence. Understanding how to scrape websites efficiently involves mastering tools and practices that optimize the extraction process.

The landscape of web scraping has evolved with the advent of advanced web scraping software that simplifies the task. Users can access numerous data extraction tools, ranging from simple browser plugins to sophisticated applications that can navigate complex websites seamlessly. By embracing the right techniques, users can automate data collection, minimize manual effort, and maximize accuracy.

Understanding Web Crawlers: The Backbone of Data Extraction

Web crawlers, also known as spiders or bots, play a pivotal role in the web scraping ecosystem. They systematically browse the internet, indexing content and gathering information from various sources. Learning about these crawlers through a comprehensive web crawler tutorial can enhance your understanding of how to scrape websites effectively. Crawlers follow links on pages to discover new content, making them fundamental to building large datasets.

Implementing the best web scraping practices can lead to successful data extraction using these crawlers. It’s essential to respect the target website’s robots.txt file, which dictates which parts can be crawled and which cannot. This adherence not only maintains ethical standards but also reduces the risk of IP bans or legal issues.

Best Web Scraping Practices for Effective Data Collection

When it comes to web scraping, adhering to best practices ensures that data collection is ethical, efficient, and effective. One critical practice is to minimize server requests; excessively scraping a website can lead to throttling or banning by the website. Using user-agent rotation and adhering to a scraping schedule can help address this concern.

Furthermore, employing reliable web scraping software enables users to manage their scraping tasks better. These tools often provide features like retry mechanisms and and data validation, which enhance the overall quality of the data being gathered. By integrating these best practices, individuals can streamline their data extraction processes and achieve meaningful insights from web sources.

Exploring Data Extraction Tools for Seamless Workflow

Data extraction tools vary widely in functionality and ease of use. Basic tools provide necessary scraping capabilities, while advanced software includes features like cloud storage integration, proxy support, and automation functionalities. Choosing the right tools directly impacts the efficiency and success of web scraping projects.

Investing in powerful data extraction tools can significantly expedite the process of gathering large volumes of information. These tools often come with built-in templates or scripting capabilities that cater to a variety of data sources, further enhancing the scraping experience. Evaluating the suitability of tools based on specific project requirements allows users to make informed decisions.

How to Scrape Websites: Step-by-Step Guide

Knowing how to scrape websites involves understanding both the technical aspects and best practices of web scraping. A typical process begins with identifying the target site and determining the data to be extracted. From there, users must select a strategy, whether it’s using pre-built software or custom-coded scripts.

Once you’ve set your goals, the actual scraping process can begin. It’s crucial to structure your scripts or utilize the software’s functionalities to navigate the website effectively, handle pagination, and extract the desired information accurately. Finally, organizing the extracted data into a manageable format, such as CSV or JSON, ensures that it can be processed or analyzed further.

Navigating Legal Considerations in Web Scraping

Web scraping operates under a cloud of legality that wary scrapers must navigate. Understanding the legal ramifications of scraping specific websites is essential, particularly concerning copyright issues and terms of service violations. Different jurisdictions have varying laws regarding data scraping, so familiarizing yourself with these can prevent future legal problems.

A comprehensive approach includes seeking permission from websites when possible and understanding the implications of the Computer Fraud and Abuse Act (CFAA) as it relates to unauthorized access. When these factors are taken into account, web scraping can be conducted without compromising legal standing, ultimately contributing to a more responsible scraping environment.

Optimizing Web Scraping for Performance

Performance optimization in web scraping is vital for ensuring swift and reliable data extraction. Techniques such as multi-threading can significantly speed up the process, allowing scrapers to handle multiple requests simultaneously without overloading a server. Additionally, using efficient data storage solutions helps manage the volume and accessibility of the extracted data.

Another optimization strategy includes caching results from previous scraping sessions to avoid redundant requests. This approach not only conserves resources but also enhances the speed and efficiency of future scrapes. Implementing these performance-enhancing strategies results in both time savings and improved accuracy in data extraction.

Choosing the Right Web Scraping Software

Selecting the right web scraping software is a critical decision that impacts the efficacy of your data extraction efforts. With numerous options available, it’s important to assess the specific needs of your project – such as the type of data, frequency of scraping, and the technical skills of the user. User-friendly software can benefit beginners, while more advanced tools may offer customization for experienced users.

Additionally, evaluating software based on integration capabilities with other tools or APIs can enhance your workflow. Many top-rated scraping software solutions come with built-in options for data analysis and visualization, allowing users to derive insights from their scraped content effectively. Making well-informed software choices sets the foundation for successful web scraping endeavors.

Future Trends in Web Scraping Technologies

As web technologies continue to evolve, so too does the field of web scraping. Emerging trends include the integration of AI and machine learning to improve the accuracy and efficiency of data extraction processes. These technologies can refine data filtering and enhance the identification of relevant content, allowing users to tap into vast datasets easily.

Another trend is the increased focus on ethical scraping practices, as awareness of data privacy concerns grows. Regulations like the GDPR influence how data is scraped, leading to software developments that prioritize ethical compliance and user privacy. Staying updated on these trends ensures that businesses remain competitive while adhering to evolving standards.

Frequently Asked Questions

What are the best web scraping practices to follow?

To ensure effective and ethical web scraping, always check a website’s terms of service, utilize data extraction tools that comply with legal guidelines, respect the site’s robots.txt file, and avoid overwhelming the server by pacing your requests.

How to scrape websites using Python?

You can scrape websites using Python by utilizing libraries like Beautiful Soup and Scrapy. These web scraping software options allow you to parse HTML, navigate web pages, and extract the desired data efficiently.

What is a web crawler tutorial and what should it include?

A web crawler tutorial should cover the fundamentals of web crawling, including tools, techniques for navigating websites, handling pagination, data extraction strategies, and best practices to avoid being blocked by sites.

What are some effective data extraction tools for beginners?

Some effective data extraction tools for beginners include Octoparse, ParseHub, and Import.io. These web scraping software applications offer user-friendly interfaces and provide powerful features for scraping data without extensive coding knowledge.

What is the role of web scraping software in data analysis?

Web scraping software plays a crucial role in data analysis by automating the process of gathering large sets of data from websites, allowing analysts to cleanse, organize, and interpret this information to derive insights efficiently.

| Key Point |

|---|

| Web Scraping Techniques |

| The process of extracting information from websites. |

| Common tools and libraries used for web scraping include Beautiful Soup, Scrapy, and Selenium. |

| Data scraped can include text, images, links, and more, depending on the target website’s structure. |

| Always check the website’s ‘robots.txt’ file to ensure compliance with their scraping policies. |

| Web scraping techniques can be employed for various applications such as data analysis, market research, and lead generation. |

Summary

Web scraping techniques are essential for efficiently extracting valuable data from various websites. These techniques utilize specialized tools and libraries to automate the data collection process, allowing users to gather information like text, images, and links. Ensuring compliance with website policies by consulting ‘robots.txt’ before scraping is crucial to avoid legal issues. Employing these techniques can greatly enhance data analysis, market research, and lead generation efforts, making it an invaluable skill in today’s data-driven landscape.