Web Scraping Restrictions: Understanding Legal Boundaries

Web scraping restrictions are a critical aspect that anyone looking to harvest data from online sources must consider. As more businesses turn to digital information gathering, understanding the legal framework surrounding web scraping becomes essential. Many websites, especially news sites, enforce specific conditions regarding data extraction through their site terms of service. Violating these guidelines can lead to serious legal implications, which is why a solid web scraping summary of the applicable laws and regulations is vital for any developer or data analyst. Moreover, being informed about content summarization rules can help navigate the complexities of this practice while ensuring compliance.

When discussing the act of extracting data from websites, one might encounter various terms such as data mining and information retrieval. These processes, often referred to in conjunction with the concept of web scraping, involve pulling significant amounts of data for analysis or content curation. However, it’s crucial to recognize the inherent limitations and policies set by individual web platforms that dictate how their information can be used. As such, understanding the legal implications, especially regarding scraping news sources, is paramount for ethical data usage. Recognizing these nuances ensures that users respect each site’s unique terms while effectively utilizing their data.

Understanding Web Scraping Legalities

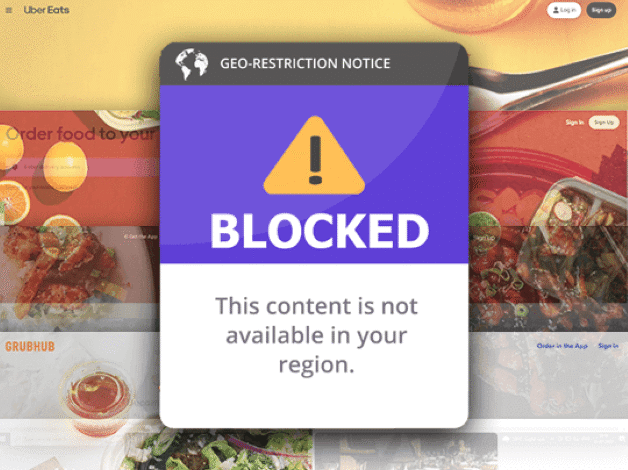

Web scraping has become a powerful tool for gathering information online, but it’s critical to navigate its legal landscape carefully. Regulations regarding web scraping vary from site to site, and observing the terms of service is paramount. Websites such as news outlets often have clear restrictions in their terms against automated data extraction. Ignoring these guidelines can lead not only to content access being blocked but also to potential legal repercussions.

For instance, major news sites like the New York Times explicitly outline the use of web scraping and may prohibit any automated means of data collection. Therefore, anyone interested in using web scraping for legitimate purposes should conduct thorough research into each site’s legal requirements before proceeding to ensure compliance and avoid violations.

Scraping News Sites: Challenges and Considerations

When considering web scraping specifically for news sites, it is essential to understand that many of these platforms actively protect their content. While scrapers might be aimed at collecting data for analysis or summarization, they often encounter barriers like CAPTCHAs and dynamic page loading. As a result, if a content scraper attempts to harvest news articles for summarization, it often runs the risk of violating the site’s terms of service.

Moreover, even if the scraping tool works effectively, the content acquired must still be handled with care. There are rules of content summarization that protect the integrity of the original work. By not adhering to the specified guidelines, one could face copyright issues or claims of plagiarism, further complicating what initially seemed like a straightforward data extraction task.

The Impact of Site Terms of Service on Web Scraping

Every website has its own unique terms of service that outline what users can and cannot do with the site’s content. This is particularly crucial for web scraping, as many notable websites include clauses that explicitly prohibit scraping. Understanding and respecting these terms not only avoids potential legal issues but also fosters ethical data collection practices.

For those looking to leverage data from various websites, it is vital to read through these terms thoroughly. Some sites may offer APIs openly, making it easier and permitted to retrieve data legally. Thus, clarifying the site’s specifications is necessary to ensure compliance with any web scraping efforts.

Creating a Web Scraping Summary: Best Practices

In order to create an effective web scraping summary, one must implement best practices that respect the original source’s content while providing valuable insights. This includes paraphrasing rather than directly copying text to avoid copyright infringement. Ensuring that the extraction process follows ethical guidelines is essential for establishing trust and credibility.

Additionally, utilizing Latent Semantic Indexing (LSI) can improve the relevancy of the summary generated. By focusing on related terms, such as ‘scraping news sites’ and ‘web scraping summary,’ one can provide contextually rich content that enhances the overall quality of the generated summaries.

Ethical Considerations in Web Scraping

Ethics play a significant role in web scraping. When collecting data from online platforms, it’s critical to consider the implications of the actions. Beyond legality, scrapers have an ethical responsibility to respect the content owners and their rights. This means not only adhering to the terms of service but also representing the data accurately and avoiding the misuse of information.

Being ethical in web scraping doesn’t just protect you; it preserves the integrity of the digital ecosystem. By opting to summarize content rather than replicate it entirely, scrapers can contribute positively to the web environment while also providing value to their audience.

Common Techniques for Web Scraping News Articles

Web scraping news articles involves a variety of techniques that require adapting to different website architectures. Technologies like BeautifulSoup for Python allow scrapers to parse HTML content effectively, while tools such as Selenium can be used for more interactive web pages. Understanding the technical aspects while keeping the legal caveats in mind ensures productive scraping.

Moreover, combining these technical skills with a clear understanding of the site’s terms of service allows scrapers to innovate responsibly. Planning and strategizing around site design, data storage, and output formats can optimize the extraction process while complying with all legal frameworks.

The Role of APIs in Content Acquisition

APIs serve as a bridge for acquiring content from websites legally and efficiently. Instead of scraping websites directly, utilizing an available API can save time, effort, and the risk of violating terms of service. Many major news outlets offer APIs that provide structured data feeds, allowing developers to access headlines, articles, and multimedia content seamlessly.

Furthermore, APIs usually have clear usage guidelines, facilitating ethical data utilization. When scraping isn’t an option or is heavily restricted, exploring these APIs can provide a legitimate alternative for obtaining the information required for analysis or summarization.

Consequences of Non-Compliance in Web Scraping

Failing to comply with the terms of service can lead to various negative outcomes for those engaged in web scraping. From having access to data revoked to facing legal action from the content providers, non-compliance poses serious risks. Websites monitor for automated data extraction, and aggressive enforcement of policies can result in costly repercussions.

Consequently, understanding the potential consequences is integral for anyone involved in web scraping endeavors. By maintaining adherence to the legal guidelines, scrapers can focus on their projects without the looming fear of violating set protocols.

Future Trends in Web Scraping and Content Acquisition

As technology evolves, the field of web scraping is also shifting toward more sophisticated methods that respect legal frameworks. Future trends may include advanced machine learning algorithms that help distinguish between permissible data collection and prohibited practices based on specific site terms of service and regional regulations.

Additionally, innovations in web technologies will likely reshape how scrapers gather content. Increased awareness and advancements in ethical scraping practices will contribute to a landscape that values responsible data acquisition, paving the way toward a more sustainable approach for businesses and developers alike.

Frequently Asked Questions

What are the web scraping restrictions imposed by site terms of service?

Web scraping restrictions are often outlined in a site’s terms of service (TOS). Many sites prohibit scraping to protect their content, user privacy, and server resources. Violating these restrictions can lead to legal consequences.

Is web scraping legal if it follows content summarization rules?

Web scraping can be legal if it adheres to content summarization rules and does not infringe on copyright or the site’s terms of service. Always review a website’s TOS before scraping for compliance.

How do different sites enforce web scraping legal restrictions?

Different sites enforce web scraping legal restrictions through various means such as CAPTCHAs, IP blocking, and user-agent detection. It’s essential to respect these measures to avoid legal issues.

What should I know about web scraping news sites regarding legal restrictions?

When scraping news sites, it’s crucial to understand their terms of service, as many restrict the use of automated tools. You should consider the ethical implications and seek permission where applicable.

What is a web scraping summary and how does it relate to site terms of service?

A web scraping summary is a concise representation of the data obtained through scraping. It’s vital to ensure that creating such summaries doesn’t violate a site’s terms of service, which may restrict how their content is used.

Are there any consequences for violating web scraping restrictions on news sites?

Violating web scraping restrictions on news sites can lead to legal action, including cease-and-desist orders, account bans, or even lawsuits. Always check the site’s TOS to avoid such outcomes.

How can I check if web scraping is allowed on a specific website?

To check if web scraping is allowed, review the website’s terms of service and robots.txt file. These resources will provide guidelines on what is permissible regarding scraping their content.

| Aspect | Description |

|---|---|

| Understanding Web Scraping Restrictions | Web scraping involves extracting data from websites, but many sites have restrictions against it. |

| Terms and Conditions | Sites like nytimes.com explicitly state in their terms that scraping is forbidden. |

| Legal Implications | Ignoring these restrictions can lead to legal consequences including bans or lawsuits. |

| Alternatives | Instead of scraping, consider using available APIs or summaries to access data. |

Summary

Web scraping restrictions are critical to understand for anyone looking to extract data from websites legally. These restrictions protect content creators and provide guidelines on how their content may be used. For instance, sites like nytimes.com have explicit rules against scraping, and disregarding these could lead to significant legal repercussions. Opting for alternatives such as APIs or summaries can provide valuable information without infringing on ownership rights.