SkinnyTok: The Risks of Viral Weight Loss Trends

SkinnyTok is making waves as one of the most talked-about weight loss trends on TikTok, capturing the attention of millions eager to explore various methods to shed pounds rapidly. This trend features countless creators sharing their personal journeys and tips, often promoting extreme approaches to achieve thinness in what can be an unhealthy manner. While some influencers discuss healthy eating habits and emphasize portion control, others may unintentionally glamorize disordered eating practices, putting viewers at risk for harmful behaviors. As the hashtag continues to proliferate, it’s crucial to scrutinize the messages being endorsed in the realm of social media fitness and weight loss trends. With over 60,000 videos dedicated to this content, it’s clear that SkinnyTok has sparked a significant conversation on the implications of dietary choices and self-image among its audience.

The phenomenon of SkinnyTok highlights the intricate relationship between social media and body image, capturing the attention of those seeking faster results in their weight-loss endeavors. Known variably as the thinspiration movement, this wave of content promotes extreme weight reduction strategies, attracting users eager to find the quickest ways to achieve their fitness goals. Yet, this allure of instant transformation often forgets the importance of sustainable, healthy eating practices that nurture the body instead of depriving it. As discussions around this trend escalate, it’s essential to consider the potential consequences of such weight-loss ideologies, emphasizing a more balanced approach to dieting and self-care. By fostering awareness and positive messaging online, individuals can navigate this landscape more effectively without falling prey to toxic diet culture.

The Impact of SkinnyTok on Weight Loss Trends

The rise of SkinnyTok on TikTok has sparked significant attention regarding its impact on weight loss trends among users. With over 60,000 videos associated with the hashtag, it’s clear that many are actively engaging with content that promotes extreme weight loss methods. This trend often glorifies rapid transformations that can lead to unhealthy practices and unrealistic body standards. Even popular influencers, like Mandana Zarghami, acknowledge both the motivational aspects and the accompanying risks of disordered eating that often come with such content.

As SkinnyTok continues to proliferate, participants are increasingly bombarded with mixed messages about fitness and nutrition. While some creators advocate for healthy eating habits and balanced lifestyles, others may narrow their focus to drastic measures intended for quick weight loss. This creates a dissonance that can adversely affect viewers, particularly those with a history of disordered eating. As the platform’s algorithms tend to promote engaging content, it’s crucial for users to remain aware of what they consume digitally, ensuring that their sources are grounded in healthy, supportive principles.

Healthy Eating Habits vs. Social Media Influence

Navigating healthy eating habits in an era of social media can be challenging. Platforms like TikTok are rife with various dietary trends that often lack scientific backing. In contrast to the popular SkinnyTok trend, which emphasizes extreme weight loss, experts recommend embracing a more holistic approach to nutrition. This includes incorporating diverse foods that promote overall well-being rather than focusing solely on appearance. As Dr. Jillian Lampert of The Emily Program points out, the glorification of drastic measures to alter body image can perpetuate cycles of unhealthy behavior.

It’s essential for individuals to cultivate a healthy relationship with food, viewing it as a positive source of energy rather than something to fear. Practicing mindful eating, where one pays attention to hunger cues and emotional triggers, can foster a more sustainable lifestyle. Influencers and content creators have a responsibility to share these messages and discourage harmful perceptions about food, countering the toxic diet culture prevalent on social media.

By promoting awareness around healthy eating, people can prioritize nutrition, which supports both physical and mental health. Individuals should seek out content that encourages balanced diets, regular exercise, and self-acceptance to foster a holistic approach to health.

Navigating Disordered Eating Associated with SkinnyTok

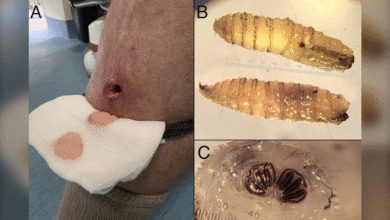

The SkinnyTok trend highlights the dangerous intersection between social media and disordered eating. Many individuals may find themselves inadvertently swept up in a cycle of comparison, leading to negative body image and unhealthy eating behaviors. As Dr. Anastasia Rairigh emphasizes, the extreme behaviors promoted by some TikTok creators can have grave consequences, including severe health issues like heart arrhythmias. This reality underscores the urgent need for accountability among influencers who share detrimental advice.

It’s vital for those consuming content related to SkinnyTok to remain vigilant about their mental and physical health. The increasing exposure to extreme body ideals can trigger harmful thoughts and behaviors in susceptible individuals. Education about the signs of disordered eating can help empower users to recognize when content is straying into dangerous territory. Ultimately, promoting body positivity and encouraging conversations around mental health can help break the cycle perpetuated by harmful social media trends.

The Role of Social Media in Fitness and Wellness

Social media platforms, particularly TikTok, play a significant role in shaping fitness and wellness narratives. With an ever-growing audience eager for quick tips and transformations, misleading trends like SkinnyTok can overshadow beneficial practices. While social media can serve as an excellent resource for motivation and community support, it also poses risks through the sensational emphasis on extreme body shapes and diets. This dichotomy underlines the importance of approaching social media consumption mindfully.

To navigate this digital landscape, consumers should seek positive influences that advocate for sustainable fitness journeys without sacrificing overall health. Engaging with creators who emphasize safe practices and realistic goals can help mitigate the adverse effects of FakeToks and misinformation. Establishing a supportive network online can facilitate healthier habits and promote a balanced perspective toward fitness.

Responsible Influencing in the Age of TikTok

The responsibility of content creators has never been more critical than in the age of TikTok, especially with trends like SkinnyTok gaining traction. Influencers have a unique platform to educate their audiences about healthy living while also shaping perceptions of body image. Those who emphasize balanced eating and regular activity rather than extreme weight loss tactics can foster a more positive environment. This showcases the potential for social media to impact lives positively, challenging the glorification of unhealthy habits.

Influencers should aim to share realistic testimonials about their wellness journeys, stressing the importance of mental health and body positivity. By doing so, they can inspire their followers to cultivate a healthy lifestyle without succumbing to pressure from harmful societal standards. The narrative surrounding fitness must shift from one of extreme measures to one of empowerment and self-acceptance.

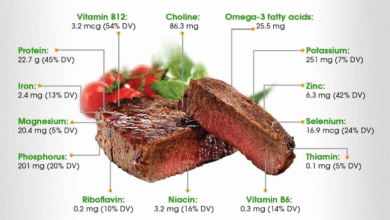

The Dangers of Malnutrition in Pursuit of Thinness

The pursuit of extreme thinness, often celebrated across platforms like TikTok, poses significant health risks, a point highlighted by medical professionals such as Dr. Brett Osborn. Malnutrition resulting from fad diets can lead to devastating physical consequences, including weakened bones and other long-term health complications. Awareness of these risks is essential as users engage with trends like SkinnyTok, where the allure of a desirable body may overshadow the imperative of overall health.

Promoting a balanced view of body weight is key in combating the negative impacts of diet culture. Advocating for nutrition that supports bodily functions rather than solely aesthetic goals creates a healthier discourse around weight management. Health and wellness influencers play a crucial role in redefining beauty standards, emphasizing strength and vitality over thinness.

Healthy Alternatives to Popular Weight Loss Trends

As trends such as SkinnyTok rise in popularity, it’s crucial to consider healthier alternatives to weight loss that promote sustainable lifestyles. Instead of engaging with harmful practices, individuals can adopt changes that center on balanced diets, increased physical activity, and mental wellness. Emphasizing whole foods, moderate portions, and mindful eating habits can yield better long-term results while supporting overall wellbeing.

Additionally, practices such as regular physical activity, stress management techniques like yoga or meditation, and maintaining healthy social connections can drastically improve one’s health journey. Educating oneself on nutritional values and engaging with supportive communities can create a positive feedback loop that encourages healthier choices, moving away from the toxicity often linked to trends like SkinnyTok.

The Psychological Impact of Extreme Weight Loss Content

The psychological ramifications of consuming extreme weight loss content cannot be underestimated. Users who frequently encounter SkinnyTok exchanges may experience heightened self-criticism and dissatisfaction with their bodies. This kind of environment creates a breeding ground for eating disorders, where individuals feel compelled to adhere to unhealthy standards set by social media. As experts warn, these feelings can quickly spiral into more severe mental health issues.

By promoting body positivity and the importance of mental health, social media users can help steer conversations around fitness and wellness in a healthier direction. Encouraging awareness and acceptance of diverse body types can significantly reduce the anxiety associated with thinness trends. It’s vital for both content creators and consumers to foster an online space that champions mental well-being rather than perpetuating harmful ideals.

Cultivating a Balanced Approach to Wellness

Amidst the noise of social media trends like SkinnyTok, cultivating a balanced approach to wellness is crucial. This includes embracing diverse health practices that prioritize not only physical attributes but also mental and emotional well-being. Healthy eating must be seen as a lifelong journey, filled with choices that nourish both the body and mind, rather than as a temporary assignment.

Individuals are encouraged to take control of their health narratives by setting realistic goals and seeking information from credible sources. Establishing a support system on social media that promotes healthy discussions about fitness can foster an environment where everyone feels capable of achieving their personal wellness goals without succumbing to undue pressure. By advocating for comprehensive health practices, users can inspire others to pursue balance and vitality in their everyday lives.

Frequently Asked Questions

What is SkinnyTok and how does it relate to TikTok weight loss trends?

SkinnyTok is a viral trend on TikTok that showcases various weight loss methods aimed at achieving extreme thinness quickly. While some creators share healthy eating habits, others promote harmful practices that could glamorize disordered eating and unrealistic body standards, making it crucial for viewers to discern which content to follow.

How can SkinnyTok influence healthy eating habits?

SkinnyTok can influence healthy eating habits by highlighting portion control, daily movement, and nutritious food choices. However, it is essential for followers to engage with content that promotes balanced eating rather than extreme dieting, as some SkinnyTok trends may unintentionally encourage unhealthy behaviors.

What are the dangers of following SkinnyTok weight loss trends?

Following SkinnyTok weight loss trends can pose significant risks, including the glorification of extreme thinness and unhealthy behaviors. Experts warn that this trend may lead to disordered eating and health issues such as malnutrition, hair loss, and decreased bone density if not approached with caution.

Can SkinnyTok content be triggering for those with a history of disordered eating?

Yes, SkinnyTok content can be triggering for individuals who have experienced disordered eating. The emphasis on extreme body image and drastic weight loss methods can prompt harmful thoughts and behaviors, making it essential for followers to be mindful of their consumption of such content.

How can individuals maintain a healthy perspective while using SkinnyTok?

To maintain a healthy perspective while using SkinnyTok, individuals should focus on creators who promote wellness, positive body image, and balanced nutrition. Prioritizing content that encourages physical health over extreme thinness is vital to combat the toxic aspects of diet culture portrayed in some TikTok weight loss trends.

What should viewers look out for when consuming SkinnyTok content?

Viewers should be vigilant about the messages conveyed in SkinnyTok content. It’s important to identify creators who advocate for building muscle, healthy eating habits, and overall wellness rather than those who promote extreme weight loss tactics or glamorize unhealthy body images.

What steps can individuals take to counteract the negative effects of SkinnyTok?

To counteract the negative effects of SkinnyTok, individuals can engage in critical thinking about the content they view, follow health professionals instead of influencers, and cultivate a positive relationship with food and body image. Seeking guidance from registered dietitians or mental health professionals can also provide extra support against the damaging impact of social media on body image.

| Key Aspects | Details |

|---|---|

| The SkinnyTok Trend | A popular weight-loss trend on TikTok featuring over 60,000 videos discussing extreme weight loss methods. |

| Influencer Perspectives | Mandana Zarghami emphasizes portion control and movement, while warning that some content glamorizes unhealthy habits. |

| Health Risks | Dr. Brett Osborn highlights malnutrition consequences and stresses that low body weight does not equal good health. |

| Impact of Algorithms | Social media algorithms amplify harmful messages, possibly worsening body image issues among viewers. |

| Balanced Nutrition Advocacy | Experts like Dr. Rairigh call for a healthy attitude towards food and caution against drastic weight-loss behaviors. |

Summary

SkinnyTok is gaining attention as a notable weight-loss trend on TikTok, but it comes with significant risks. While it offers some fitness and nutrition advice, the extreme methods promoted can lead to harmful health consequences. Influencers like Mandana Zarghami highlight the importance of promoting balanced lifestyles, yet there is a real concern about the glorification of unhealthy habits within the trend. It’s crucial for individuals to critically assess the content they consume and prioritize their health over extreme body standards.