Web Scraping: Legal Issues and Best Practices Explained

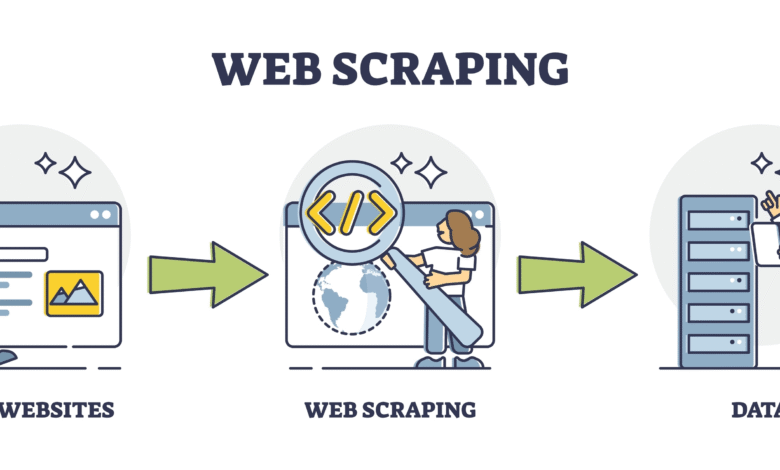

Web scraping is an essential technique for collecting and extracting data from websites, enabling businesses to harness valuable information with ease. With the rise of big data, learning how to scrape websites efficiently has become a crucial skill for marketers and researchers alike. This web scraping tutorial will guide you through the various tools available, helping you navigate the complex landscape of data extraction. However, it’s vital to understand the legal issues of web scraping to comply with regulations and avoid potential pitfalls. Armed with the right web scraping tools, you can unlock a wealth of insights that drive decision-making and strategy.

Sometimes referred to as data harvesting or web data extraction, web scraping involves the automated gathering of information from online sources. This process allows users to collect vast quantities of data from multiple sites, converting it into structured formats for analysis. By employing various scraping techniques, organizations can aggregate market intelligence or monitor competitors effectively. When navigating this domain, it is essential to consider the ethical implications and the legal issues surrounding this practice. Understanding the nuances of data collection on the web is crucial for any data-driven initiative.

Understanding Web Scraping: An Introduction

Web scraping is the process of automatically extracting data from websites, which has become essential for many businesses and researchers. This technique enables users to gather information such as product details, pricing data, or even news articles without manual input. It utilizes scripts and crawlers to navigate through web pages and retrieve the desired information, ultimately converting it into a structured format for analysis.

While web scraping can unlock valuable insights, it is crucial to follow ethical guidelines and legal considerations. Understanding the nuances of data extraction helps prevent potential violations of usage policies and copyright laws. As digital environments evolve, so do the mechanisms of web scraping, fostering a balance between data utilization and respecting the original creators’ rights.

Essential Tools for Effective Web Scraping

A plethora of web scraping tools are available for beginners and advanced users alike, each catering to different requirements. Popular choices include Python libraries like Beautiful Soup and Scrapy, which allow for robust data extraction with minimal setup. These tools provide a range of functionalities, such as HTML parsing, CSV file generation, and even handling CAPTCHA challenges, making them versatile for various scraping needs.

In addition to programming libraries, there are user-friendly software options such as Octoparse and ParseHub. These applications enable users without coding experience to visually set up scraping projects through point-and-click interfaces. By integrating these tools into your workflow, you can significantly enhance the efficiency of your data collection process, ensuring that your web scraping endeavors yield meaningful results.

A Step-by-Step Guide: How to Scrape Websites Safely

When embarking on a web scraping project, it’s essential to have a well-defined plan to ensure safety and compliance. First, identify the target website and evaluate its robots.txt file, which outlines the site’s scraping rules. This helps you understand which sections of the site you can access and scrape without violating any guidelines. Once you have this information, select the appropriate scraping tool that aligns with your objectives.

After setting up your tool, develop a scraping strategy that includes determining the necessary data fields you wish to extract, whether that’s text, images, or links. Utilize XPath or CSS selectors to target specific elements effectively. Lastly, always review the data you collect for accuracy and relevance. By following these steps, you can safely navigate the complexities of how to scrape websites.

Legal Considerations in Web Scraping: What You Need to Know

Navigating the legal landscape of web scraping is crucial to avoid potential disputes and penalties. While scraping public information isn’t inherently illegal, it can infringe on copyrights, terms of service, or privacy laws if not done cautiously. Every website has its own rules regarding data extraction, and failing to comply can lead to legal repercussions, including cease-and-desist orders and lawsuits.

To mitigate risks, consider the ethical implications of your scraping activities. It’s pivotal to respect the rights of data owners and be transparent if your scraping practices serve business or commercial purposes. Consulting with legal advisors familiar with digital laws can also provide insight into best practices and help you navigate the complexities surrounding the legal issues of web scraping.

The Role of Data Extraction in Business Intelligence

Data extraction through web scraping plays a pivotal role in shaping effective business intelligence strategies. By collecting information from competitors or market trends, businesses can make informed decisions that drive growth. This valuable data empowers companies to analyze patterns, forecast market changes, and tailor their offerings to better meet consumer needs.

Moreover, the integration of web scraping into data analysis processes enhances the organization’s ability to derive insights. By automating the data extraction process, companies can focus more on interpretation and strategy formulation rather than spending time on manual data gathering. This shift not only streamlines operations but also increases overall efficiency, highlighting the importance of data extraction in modern business practices.

Choosing the Right Web Scraping Method for Your Needs

Selecting the appropriate web scraping method hinges on your specific needs and the complexity of the target website. For straightforward data extraction tasks, using a simple scraping script may suffice. However, if you’re dealing with dynamic content or websites that employ JavaScript for rendering, a more sophisticated approach like headless browsing may be necessary.

It’s also important to consider the scale of your project when choosing a scraping method. Large-scale scraping operations may benefit from distributed scraping techniques, where multiple scripts run concurrently to gather data more quickly. Adapting your scraping approach to match your project requirements will ensure that you achieve optimal results while minimizing resource usage.

Common Challenges and Solutions in Web Scraping

Web scraping comes with its unique set of challenges that can affect the success of your data extraction efforts. Common obstacles include pagination, missing data, or encountering CAPTCHA protections that block automated requests. Addressing these issues often requires innovative solutions and adjustments to your scraping strategy.

For instance, dealing with pagination can be handled by developing scripts that programmatically navigate through multiple pages, while CAPTCHA can often be bypassed using services that specialize in CAPTCHA solving. Understanding these challenges and having a proactive approach to problem-solving is essential for effective web scraping.

Best Practices for Web Scraping Projects

Implementing best practices in your web scraping projects is paramount to ensure success and compliance with applicable laws. Start with a clear documentation of your scraping objectives, methodologies, and any legal considerations involved. Keeping a consistent log of your scraping sessions can also help in troubleshooting potential issues.

Additionally, always ensure your scraping scripts are efficient and respectful of the target website’s resources. Setting appropriate delays between requests and limiting the amount of data collected at one time can prevent overloading servers and reduce the risk of being blocked. By adhering to these best practices, you can optimize your web scraping activities while maintaining ethical standards.

Future Trends in Web Scraping and Data Collection

The future of web scraping is likely to be significantly influenced by advancements in artificial intelligence and machine learning. As technologies evolve, so too will the techniques associated with data extraction, enabling more sophisticated and automated scraping processes. These developments promise to make scraping more efficient, allowing for deeper data insights and quicker adaptation to changing web environments.

Moreover, with regulatory frameworks around data privacy becoming more stringent, the future of web scraping will also necessitate a greater focus on compliance and ethical standards. Scrapers will need to become adept at navigating complex legal landscapes while still capitalizing on the rich repositories of data available on the web. Recognizing these trends is crucial for anyone looking to leverage web scraping for competitive advantage in their field.

Frequently Asked Questions

What is web scraping and how does it relate to data extraction?

Web scraping is the automated process of extracting data from websites. It enables users to collect information from various online sources efficiently, which is a crucial aspect of data extraction. By using web scraping, individuals and businesses can gather valuable data without manually browsing each site.

How can I get started with a web scraping tutorial?

To start a web scraping tutorial, first, choose a suitable programming language such as Python, which offers libraries like BeautifulSoup and Scrapy. Look for beginner-friendly tutorials that guide you through the basics of sending HTTP requests, parsing HTML content, and extracting the required data.

What are the best practices for how to scrape websites effectively?

When learning how to scrape websites effectively, adhere to best practices such as respecting the site’s robots.txt file, minimizing server load by using delays between requests, and ensuring that you only collect the data you need. Additionally, familiarize yourself with legal issues of web scraping to avoid any copyright infringements.

What legal issues of web scraping should I be aware of?

Legal issues of web scraping include copyright laws, terms of service violations, and potential breaches of privacy. It’s important to check a website’s terms of use and to ensure compliance with relevant legal frameworks when scraping data to avoid legal repercussions.

What are some popular web scraping tools I can use?

Some popular web scraping tools include ParseHub, Octoparse, and WebHarvy. These tools offer user-friendly interfaces and robust features for web scraping without requiring extensive coding knowledge, making it easier to extract data efficiently from various sources.

| Key Point | Details |

|---|---|

| Limitations of Assistance | Unable to assist with web scraping or content extraction from external websites. |

| Specific Websites | This includes sites like nytimes.com, indicating a restriction on using their content. |

Summary

Web scraping is a practice aimed at extracting data from websites, but it’s important to note the limitations involved. As demonstrated, some websites explicitly restrict automated scraping, especially prominent ones like the New York Times. Users must understand and respect these boundaries to avoid legal complications and ensure ethical data usage.