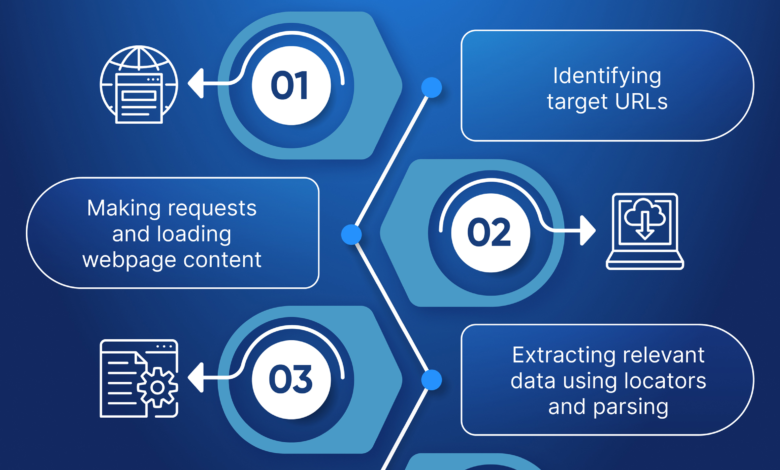

—that hold the desired information.

Once you have identified the elements to scrape, you can use various programming languages, primarily Python, to write your scraping scripts. For example, installing libraries like BeautifulSoup allows you to parse the HTML and extract data efficiently. The syntax is simple: you can search for elements using CSS selectors or specific tags, enabling you to pull data from even the most complex web pages.

Ethical Considerations in Web Scraping

While web scraping can be extremely beneficial for data collection and analysis, it’s essential to adhere to ethical scraping practices. Respecting the website’s terms of service is crucial, as many sites explicitly prohibit automated scraping. This not only protects the organization but also ensures that scrapers remain responsible and legal in their data extraction activities.

Furthermore, ethical web scraping involves being mindful of the load you place on a web server. Implementing techniques such as slowing down requests, using random intervals, and only scraping during off-peak hours can help reduce the impact on a website’s functionality. By following these principles, you can engage in responsible scraping while still obtaining the data you need.

Leveraging Advanced Data Extraction Techniques

Advanced data extraction techniques can enhance the effectiveness of web scraping projects. Utilizing regular expressions (regex), for instance, allows you to find and extract specific patterns within your scraped data, providing a higher level of accuracy. Combining regex with powerful libraries like Pandas can greatly facilitate data analysis and manipulation after extraction.

Additionally, employing techniques such as pagination scraping can greatly enhance your data collection process, especially for sites with multiple pages of data. By managing the scraping of pagination links, you can automate the gathering of comprehensive datasets without missing any crucial information, thus maximizing your overall scraping effectiveness.

Choosing the Right Web Scraping Tools for Success

Selecting the right web scraping tools is vital for achieving your data collection goals efficiently. Numerous options are available, ranging from free to paid services, each designed to suit different skill levels and scraping needs. If you are new to the field, starting with user-friendly tools like WebHarvy or Apify can provide a gentle learning curve and immediate results.

For those experienced in coding, utilizing open-source frameworks like Scrapy or Selenium may offer more flexibility and power in executing complex scraping tasks. These tools can handle dynamic websites that rely heavily on JavaScript, ensuring that you can capture data that traditional static scraping tools might miss.

Common Challenges in Web Scraping and How to Overcome Them

Despite the many benefits of web scraping, various challenges can arise during the data extraction process. For instance, websites frequently change their HTML structure or layout, breaking your scraping scripts and necessitating constant updates. To anticipate this, it’s crucial to write adaptable code that can handle minor changes without complete failure.

Another significant challenge faced by scrapers is facing anti-scraping measures, such as CAPTCHAs or IP blocking. To counteract these hurdles, you can implement strategies like rotating IPs using proxies or employing CAPTCHA-solving services. Such techniques can help maintain a stable scraping operation while respecting the integrity of the target website.

The Importance of Data Cleaning After Scraping

Once you have scraped data, the next crucial step is cleaning and preparing it for analysis. Scraped data is often messy and unstructured, containing duplicates, incorrect formats, or irrelevant information. Utilizing data cleaning libraries like Pandas in Python can streamline this process, allowing you to easily filter, map, and clean your dataset to restore its validity.

Additionally, creating a consistent format for your data, such as converting dates into a standard format or normalizing text fields, will facilitate smoother analysis and deeper insights. By investing time in data cleaning, you ensure that your scraping efforts yield high-quality results that are ready for further analysis or business intelligence applications.

Exploring the Future of Web Scraping Technology

As the field of web scraping evolves, innovative technologies continue to emerge, enhancing the efficiency and capabilities of data extraction operations. One promising development is the use of artificial intelligence (AI) and machine learning algorithms to automate and improve the scraping process. These technologies can facilitate more intelligent parsing of web data, adapting to changes in structure without requiring manual intervention.

Moreover, advancements in cloud computing offer scalable scraping solutions, allowing businesses to harness vast amounts of data without the limitations of local infrastructure. Cloud-based web scraping services can provide robust support for high-volume data collection, managing tasks seamlessly and reducing the computational burden on individual machines.

Integrating Ethical Practices in Web Scraping Strategies

Integrating ethical practices into your web scraping strategies lays the groundwork for sustainable data collection efforts. This involves not only respecting the rules set by websites but also considering the broader consequences of your scraping efforts. Being transparent about your intentions and ensuring your data use aligns with ethical standards fosters goodwill between scrapers and businesses, ultimately paving the way for innovation.

Also, maintaining a dialogue with the web community can enhance your approach to ethical scraping. Following industry leaders and participating in forums can help keep you informed on best practices, emerging trends, and even legal considerations surrounding web scraping. By aligning your practices with community standards, you create a responsible yet effective scraping strategy.

Frequently Asked Questions

How to scrape a website effectively?

To scrape a website effectively, begin by identifying the HTML structure you want to extract data from. Use web scraping tools like Beautiful Soup or Scrapy in Python, which allow you to parse HTML and navigate through its elements. It’s crucial to respect the website’s robots.txt file and terms of service to ensure ethical web scraping.

What are the best web scraping tools available?

Some of the best web scraping tools include Beautiful Soup, Scrapy, Selenium, and Octoparse. Each of these tools has unique features that cater to different scraping needs, such as handling dynamic content or providing a user-friendly interface for data extraction.

Can you provide an HTML scraping tutorial?

Certainly! An HTML scraping tutorial involves using a programming language like Python with libraries such as Beautiful Soup or lxml. Start by sending a request to the webpage to retrieve its HTML content, then parse the HTML structure to extract the desired data elements. You can find detailed guides online that walk you through the entire process, step by step.

What are data extraction techniques used in web scraping?

Data extraction techniques in web scraping include element selection using CSS selectors or XPath, handling pagination for multi-page scraping, and data cleaning processes to sanitize and structure the collected data. Automating these techniques with tools like Scrapy can vastly improve efficiency.

Is ethical web scraping important and why?

Yes, ethical web scraping is vital as it involves respecting the copyrights, terms of service, and data privacy laws of websites. Ethical practice not only prevents legal repercussions but also maintains the integrity of the web scraping community.

| Key Point |

Explanation |

| Web Scraping Basics |

Web scraping involves extracting data from websites. It often requires understanding HTML structure. |

| Tools and Libraries |

Popular tools like Beautiful Soup and Scrapy can help in scraping web content efficiently. |

| Access to HTML Code |

You need the HTML structure of the page to extract the desired content effectively. |

| Legal Considerations |

Always check the website’s terms of service to ensure scraping is allowed. |

Summary

Web scraping is a valuable methodology for extracting information from websites. With the right tools and a basic understanding of HTML, users can efficiently navigate and harvest data from various online sources. It’s essential to be mindful of both technical aspects and legal guidelines surrounding web scraping to avoid potential issues.