Web Scraping Guidance: Techniques for Safe Data Extraction

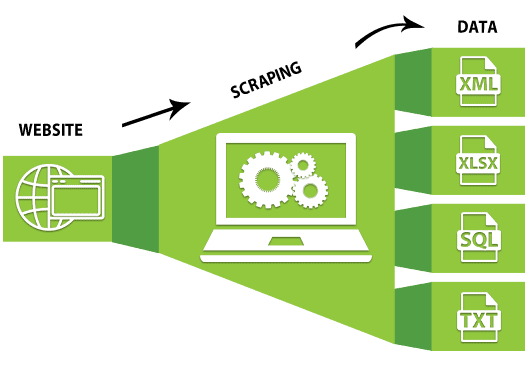

Web scraping guidance is essential for businesses and developers looking to extract valuable data from websites without a hassle. By learning how to scrape data effectively, you can unlock a wealth of information that can drive decision-making and enhance your projects. With a myriad of web scraping techniques available, it’s crucial to understand the best practices to ensure that you are scraping websites safely and ethically. Our comprehensive data extraction tutorial will equip you with the knowledge to navigate the world of web scraping confidently. Whether you’re a beginner or an experienced developer, mastering these skills can open new doors for data analysis and insights.

In the realm of digital data collection, extracting information from web platforms using automated processes is popularly known as web crawling or data mining. This process allows users to gather essential information by employing various methods and tools designed for web scraping. Understanding different scraping methodologies can empower developers and analysts to utilize the internet effectively, ensuring that the data acquired meets their specific needs. Moreover, grasping the nuances of safe website interaction is key to maintaining compliance with policies while ensuring a seamless scraping experience. Join us as we explore how these practices can optimize your data-gathering endeavors.

Understanding Web Scraping Basics

Web scraping is the process of automatically extracting data from websites. It involves making HTTP requests to a server and retrieving the HTML or JSON responses containing the data. Understanding how to scrape data effectively requires knowledge of different web scraping techniques such as parsing HTML, managing sessions, and handling dynamic content. Data extraction can be performed using various programming languages and libraries, with Python’s Beautiful Soup and Scrapy being among the most popular tools for beginners.

To start your web scraping journey, familiarize yourself with the structure of the target website. Use browser developer tools to inspect the HTML elements that contain the data you want to scrape. This foundational knowledge will help you select the right web scraping techniques that match the complexity of the site you’re targeting. Additionally, ensure that you respect the site’s terms of service, as unethical scraping practices can lead to being blacklisted.

Web Scraping Guidance: Keeping It Safe and Legal

When scraping websites, it’s crucial to follow ethical guidelines to avoid legal repercussions and ensure a positive user experience. One key method is to check the site’s robots.txt file to see what is permissible for scraping and what data is prohibited from automated extraction. Implementing proper request throttling to avoid overwhelming the server and changing your IP address if necessary can also help in scraping websites safely.

Moreover, consider the legality of your activities. Some websites have strict terms of service that can prohibit unauthorized data scraping. Understanding the legislation surrounding data privacy, such as the GDPR, is paramount. Always attribute the source of the data when sharing or using it and explore services that offer public APIs as an alternative to direct scraping, as they often provide a legal and structured way to access data.

Popular Web Scraping Techniques

There are several web scraping techniques used by developers, depending on the complexity of the data being extracted. The most basic technique involves simple HTTP requests to get the webpage’s HTML source, followed by HTML parsing to locate and extract data. More advanced techniques include using headless browsers, which simulate user actions and can scrape websites that rely heavily on JavaScript for rendering content.

Another important technique in scraping is using AJAX calls to retrieve data in JSON format, which is often more straightforward than parsing HTML. Tools like Scrapy and Puppeteer offer robust capabilities for handling both static and dynamic content, making them valuable for projects that require complex data extraction. When choosing a technique, consider the website’s architecture and the type of data you need, as this will influence your scraping strategy.

Data Extraction Tutorial: Step by Step

To effectively extract data from a website, begin by identifying your target data and the webpages where it’s located. Create a plan outlining the data points you want to collect and assess the site’s structure. Next, install necessary libraries like Beautiful Soup for HTML parsing or requests for making HTTP calls. After you set up your environment, write a basic script to make a request to the desired URL and retrieve the HTML content.

Once you have the HTML, the next step is to parse the content to find the relevant data using CSS selectors or XPath expressions. Store the extracted data into a convenient format, such as CSV or JSON, for further analysis. Finally, consider automating your script if you need to collect data regularly, but always remember to monitor for changes in the site’s structure and abide by scraping protocols.

Handling Dynamic Content in Web Scraping

Dynamic content loaded via JavaScript poses a unique challenge in web scraping. Traditional methods may fail to capture data from such sites if the content doesn’t appear in the initial HTML response. To effectively scrape these types of websites, developers often employ tools like Selenium or Playwright, which can simulate a real user by rendering the JavaScript and allowing access to the fully loaded content.

Another approach is observing the network requests made by the site. Many sites load data via APIs that can be called directly to fetch JSON responses. By identifying these requests in the browser’s developer tools, it’s possible to bypass the difficult HTML parsing and retrieve clean, structured data. Mastering these techniques is crucial for achieving high success rates in web scraping projects involving dynamic sites.

Using Proxies in Web Scraping

When conducting web scraping, the use of proxies can help prevent bans and IP blocking by the target website. Proxies act as intermediaries between your scraping script and the website, allowing you to mask your actual IP address. Rotating proxies, in particular, can be effective in distributing requests across several addresses and reducing the likelihood of triggering anti-scraping measures.

However, it’s essential to choose reliable proxy providers, as some may not be effective against more robust anti-bot technologies. Additionally, consider the performance and speed of your proxies, since this can affect the overall efficiency of your scraping operations. By integrating proxies into your web scraping strategy, you can achieve a more sustainable and less restricted data extraction process.

Tools and Libraries for Effective Web Scraping

Several tools and libraries are available to simplify the web scraping process. Python is widely regarded for its versatility, with libraries such as Beautiful Soup, Scrapy, and Requests being popular choices among developers. Beautiful Soup allows for easier parsing of HTML documents, while Scrapy is an all-in-one framework designed to handle large scraping tasks efficiently. Requests is excellent for making simple HTTP requests.

Additionally, for users who prefer a more visual approach, tools like Octoparse and ParseHub provide user-friendly interfaces that allow users to extract data without extensive programming knowledge. These tools enable point-and-click scraping and often come with built-in features to handle pagination, data cleansing, and exporting to various file formats. Selecting the right tool will depend on your specific needs, including complexity, data volume, and technical expertise.

Ethics and Best Practices in Web Scraping

While web scraping can be incredibly useful for data collection, it comes with ethical considerations that must not be ignored. Always conduct a thorough analysis of the website’s terms and conditions to ensure the legality of your scraping activities. Consider reaching out to website owners for permission to scrape their sites, which can foster goodwill and possibly lead to collaboration opportunities.

Implementing best practices in your scraping endeavors, like respecting rate limits and avoiding redundant requests, helps maintain the health of the targeted sites. Ethical scraping not only protects you from legal backlash but also mitigates the risk of overloading servers and disrupting the user experience. Adopting a responsible approach to web scraping should be a fundamental aspect of any scraper’s methodology.

Troubleshooting Common Web Scraping Issues

Despite a well-planned scraping project, issues may still arise during the scraping process. Common problems include encountering CAPTCHAs that block automated requests, receiving 404 error messages when URLs change, or being served outdated content. To tackle CAPTCHAs, developers may have to incorporate human-in-the-loop systems or leverage CAPTCHA-solving services.

Furthermore, if you notice that a site structure has changed, leading to broken scraping scripts, a proactive script maintenance strategy can help. Regularly reviewing and updating your scripts to account for structural changes is important in ensuring the integrity of your data extraction. Keeping logs of errors and request responses can also provide valuable insights when debugging your scraping activities.

Frequently Asked Questions

What is web scraping guidance and why is it important?

Web scraping guidance refers to the strategies and techniques used to extract data from websites effectively. It is essential because it helps individuals and businesses gather information from various online sources to make informed decisions.

How to scrape data from websites safely while following best practices?

To scrape data safely, adhere to a website’s terms of service, respect robots.txt files, and avoid overloading servers with requests. Utilize web scraping tools that implement rate-limiting features and handle exceptions gracefully to ensure compliance and reduce risks.

What are some effective web scraping techniques for beginners?

Effective web scraping techniques for beginners include using libraries like BeautifulSoup or Scrapy in Python, employing browser automation tools like Selenium, and utilizing APIs to access structured data more easily. Starting with smaller projects can help build your skills.

Can you provide a brief overview of a data extraction tutorial?

A data extraction tutorial typically covers the following steps: selecting target web pages, identifying the data elements to extract using HTML structure, utilizing a web scraping tool or code library, and then exporting the retrieved data into a usable format like CSV or JSON.

What tools are recommended for learning how to scrape data efficiently?

Recommended tools for learning how to scrape data include Python libraries such as BeautifulSoup for parsing HTML, Scrapy for comprehensive scraping projects, and Selenium for dynamic website scraping. Each tool has its strengths depending on your specific needs.

Is there a legal way to perform web scraping without violating site policies?

Yes, to perform web scraping legally, always check the website’s terms of use and robots.txt file for permissions. If allowed, focus on extracting publicly available data while respecting usage limitations to avoid potential legal issues.

How to handle websites that use JavaScript for dynamic content when scraping?

When dealing with websites that employ JavaScript, you can use browser automation tools like Selenium that render JavaScript like a regular browser. Alternatively, you can try API endpoints or look for data in the page’s source that does not rely on JavaScript.

What precautions should I take when scraping websites to avoid IP bans?

To avoid IP bans while scraping, use rotating proxies, implement delays between requests, and limit the number of requests sent to a single site in a given timeframe. These practices help mimic human browsing behavior and reduce the risk of detection.

What are the best practices for organizing and storing scraped data?

Best practices for organizing scraped data include categorizing it into relevant folders, using descriptive filenames, and storing in structured formats like CSV or database systems. This organization facilitates easy access and analysis later.

Where can I find resources to improve my web scraping skills and knowledge?

Resources to improve your web scraping skills include online courses on platforms like Coursera or Udemy, blogs that discuss web scraping case studies, GitHub repositories showcasing scraping projects, and forums such as Stack Overflow for community support.

| Key Points |

|---|

| Web scraping involves extracting data from websites, which can be useful for various applications. |

| Access to external sites may be restricted, so it’s important to understand limitations. |

| Guidance on web scraping techniques can help individuals learn how to scrape efficiently and ethically. |

Summary

Web scraping guidance is essential for anyone looking to effectively gather data from the internet. While access to websites like NYTimes.com may be restricted, there are many methods and best practices for scraping data responsibly. Understanding these techniques can empower users to extract valuable information while adhering to legal and ethical standards.