Web Scraping: Essential Practices and Tools to Know

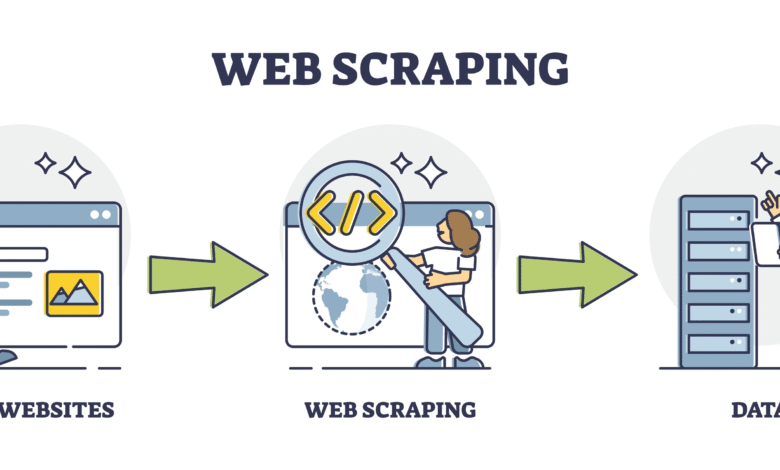

Web scraping is a powerful technique that allows users to extract vast amounts of data from websites efficiently. By utilizing various web scraping tools, individuals and businesses can gather valuable information, from market trends to competitor analysis. However, it’s essential to engage in ethical web scraping to respect website terms of service and avoid legal repercussions. Understanding web scraping best practices will help you conduct data extraction effectively while minimizing risks. Employing diverse web scraping techniques, such as automatic data collection or APIs, can significantly enhance your data-driven strategies.

Data mining from web pages, often referred to as internet harvesting or web data extraction, plays a crucial role in today’s digital landscape. This process enables organizations to collect insights from online content that fuel decision-making and innovation. Handling this retrieval effectively requires knowledge of various methodologies and tools tailored for optimal performance. As with any data gathering technique, adopting ethical standards is vital to ensure compliance and respect for data ownership. Understanding the nuances of best practices in this arena will arm you with the strategies necessary for successful data acquisition.

Understanding Ethical Web Scraping Practices

Ethical web scraping involves the responsible and legal gathering of data from websites while respecting their terms of service. It’s crucial for web scrapers to understand the legal implications and to seek permission when necessary. This approach not only protects the scraper from potential legal actions but also ensures that the website owners feel their rights are respected. Utilizing ethical practices fosters a collaborative digital environment, which can lead to more reliable data sharing in the future.

In addition to adhering to legal guidelines, ethical web scraping entails using techniques that minimize the impact on the target website’s performance. For instance, implementing time delays between requests can prevent overwhelming the server, which may result in downtime or a blocked IP address. Furthermore, ethical web scrapings often involve identifying the proper channels to access data, such as using public APIs where available, rather than resorting to direct scraping methods that could be seen as intrusive.

Common Web Scraping Tools for Data Extraction

Web scraping tools have emerged as vital assets for data extraction, facilitating the process of gathering information from various sources quickly and efficiently. Popular tools like Beautiful Soup and Scrapy are widely used in the Python ecosystem, allowing users to navigate HTML structures to pull specific data. These tools provide powerful abstractions that make it simpler to extract information without requiring in-depth knowledge of web protocols.

Moreover, user-friendly tools like Octoparse and ParseHub cater to non-programmers, offering point-and-click interfaces that streamline data extraction tasks. By utilizing these platforms, users can focus on collecting valuable data without getting bogged down by coding complexities. This accessibility expands the options for businesses looking to analyze market trends and customer feedback, highlighting the critical role that web scraping plays in modern data-driven decision-making.

Best Practices for Effective Web Scraping Techniques

To ensure successful web scraping, following best practices is essential. This includes respecting the website’s robots.txt file, which indicates which parts of the site can be crawled and which cannot. Scrapers should always check this file before initiating their scraping activities to avoid legal troubles. Additionally, maintaining a low request frequency and evading detection mechanisms can also help in preserving access to the websites being scraped.

Another best practice involves structuring the collected data appropriately for storage and analysis. Whether the end goal is to build datasets for machine learning or for market analysis, having a well-defined schema significantly enhances the usability of the extracted data. Regularly updating scraping protocols in response to changes in target websites is similarly important, as site layouts and technologies evolve over time.

The Importance of Legality in Web Scraping

The legality of web scraping is a critical discussion in today’s digital landscape, as it directly influences how businesses and researchers approach data extraction. Numerous high-profile legal cases have established precedents on what constitutes lawful versus unlawful scraping practices. Companies must be vigilant in ensuring compliance with copyright laws and platform terms of service to mitigate the risk of legal repercussions.

Additionally, the evolving regulations concerning data privacy, such as the GDPR in Europe, have introduced further complexities. Businesses, therefore, need to stay informed about legal standards surrounding data collection and privacy to avoid unintentional breaches. This not only protects them legally but also builds ethical credibility in their data practices, contributing positively to their brand image.

Leveraging Web Scraping for Market Analysis

Web scraping has become a fundamental technique in market analysis, allowing businesses to gather insights about competitors, customer preferences, and emerging market trends. By extracting data from various sources, businesses can make informed decisions regarding product development and marketing strategies. For example, analyzing competitor pricing through scraped data helps companies adjust their strategies to remain competitive.

Furthermore, web scraping aids in gathering customer feedback from social media and review platforms, offering businesses a comprehensive view of consumer sentiment. This data can be vital for shaping business strategies, improving customer experiences, and enhancing product features based on real-world feedback. Therefore, investing in effective web scraping techniques can significantly enhance a company’s market adaptability and innovation.

Web Scraping and Data Visualization

Data visualization plays a pivotal role in enhancing the value derived from web scraping efforts. After gathering extensive datasets, transforming this data into visual formats such as graphs and charts makes it easier to understand trends and patterns. By using tools like Tableau or Power BI, organizations can create insightful dashboards that tell stories through data drawn from web scraping efforts.

Moreover, leveraging visualization techniques can lead to more proactive decision-making. For instance, visual representations of competitor pricing or market changes allow businesses to quickly grasp critical shifts and respond effectively. Thus, integrating web scraping with data visualization not only amplifies data’s analytical capabilities but also maximizes its strategic impact across organizations.

Challenges in Web Scraping and Solutions

While web scraping offers vast benefits, it also presents several challenges that practitioners must navigate. One common issue is website changes that disrupt scraping scripts, as modifications to page layout or structure can lead to data extraction failures. To combat this issue, scrapers should continuously monitor and update their extraction processes to adapt to any alterations in the source website.

Additionally, many websites implement anti-scraping measures, such as CAPTCHAs and rate limiting, to deter automated scraping. Some solutions include employing proxies to disguise the origin of requests and using CAPTCHA-solving services when necessary. This approach not only protects the scraper’s IP address but also increases the success rate of data extraction, allowing for a more seamless scraping experience.

Future Trends in Web Scraping Technologies

As technology continues to evolve, so too do the techniques and tools available for web scraping. With advancements in artificial intelligence and machine learning, future web scraping technologies are expected to be more automated and efficient. These innovations could streamline the data extraction process further, allowing users to gather vast amounts of information with minimal manual intervention.

Moreover, the rise of cloud-based solutions for scraping will likely enhance accessibility and scalability for businesses of all sizes. Cloud architectures allow companies to execute scraping tasks without needing to invest heavily in their own IT infrastructure. As these technologies develop, they will come with new ethical considerations and regulatory requirements, reinforcing the importance of adhering to best practices in web scraping.

Web Scraping Resources for Beginners

For those new to web scraping, there are a plethora of resources available to help them get started. Online tutorials, such as video courses on platforms like Udemy or Coursera, provide a comprehensive introduction to various web scraping tools and techniques. These resources often include hands-on projects that allow learners to practice their skills in real-world scenarios.

Additionally, the community surrounding web scraping is quite active, with forums and discussion groups available on platforms such as Reddit or Stack Overflow. Engaging with these communities can provide valuable insights, troubleshooting tips, and peer support as novices embark on their web scraping journeys. As individuals build their expertise, they’ll be better equipped to leverage web scraping effectively in professional settings.

Frequently Asked Questions

What is web scraping?

Web scraping is a technique used to extract large amounts of data from websites. This process involves fetching a web page and extracting relevant information, typically through automation using web scraping tools.

What are the ethical considerations in web scraping?

Ethical web scraping involves respecting the website’s terms of service, ensuring that data is collected without violating privacy, and minimizing the impact on the target websites’ performance during the scraping process.

What are some popular web scraping tools?

Some popular web scraping tools include Beautiful Soup, Scrapy, and Selenium. These tools help automate data extraction and simplify the process of web scraping.

What are the best practices for web scraping?

Web scraping best practices include reviewing the website’s robots.txt file, adhering to rate limits to avoid overloading servers, using user-agent headers appropriately, and ensuring that data scraping adheres to legal guidelines.

How can data extraction be performed using web scraping?

Data extraction through web scraping can be performed using programming languages like Python, along with libraries such as Beautiful Soup or Scrapy, to automate data retrieval and processing from web pages.

What are some common web scraping techniques?

Common web scraping techniques include HTML parsing, DOM parsing, and API scraping. These methods vary in complexity and can be chosen based on the structure of the target website and the data required.

Is web scraping legal?

The legality of web scraping can vary by jurisdiction and depends on the website’s terms of service. Always review these terms and consider ethical guidelines to ensure compliant web scraping practices.

What are the risks associated with web scraping?

Risks of web scraping include potential legal issues, IP blocking by target servers, and the possibility of misusing the extracted data. It’s crucial to implement ethical web scraping practices to mitigate these risks.

Can web scraping be used for competitive analysis?

Yes, web scraping can be used for competitive analysis by extracting data on pricing, product offerings, and market trends from competitor websites. However, it is essential to follow ethical and legal web scraping practices.

How do I choose the right web scraping technique for my project?

Choosing the right web scraping technique depends on factors such as the structure of the target website, the volume of data to be extracted, and your technical skill level. Evaluate each method to find the best fit for your web scraping needs.

| Key Point | Explanation |

|---|---|

| Website Scraping Limitations | Some websites, like the New York Times, have terms of service that restrict scraping. |

| Legal Considerations | Scraping can lead to legal issues if done against a website’s policies. |

| Alternative Assistance | Summarizing information or answering questions based on general knowledge is possible. |

Summary

Web scraping is a powerful technique used for extracting data from websites, but it’s essential to be aware of the limitations and legal considerations involved. While I can assist in summarizing or providing general knowledge, it’s vital to respect the terms of service of various websites like the New York Times. Always ensure you’re complying with legal standards while engaging in web scraping activities.