Web Scraping: A Guide to Ethical Data Extraction

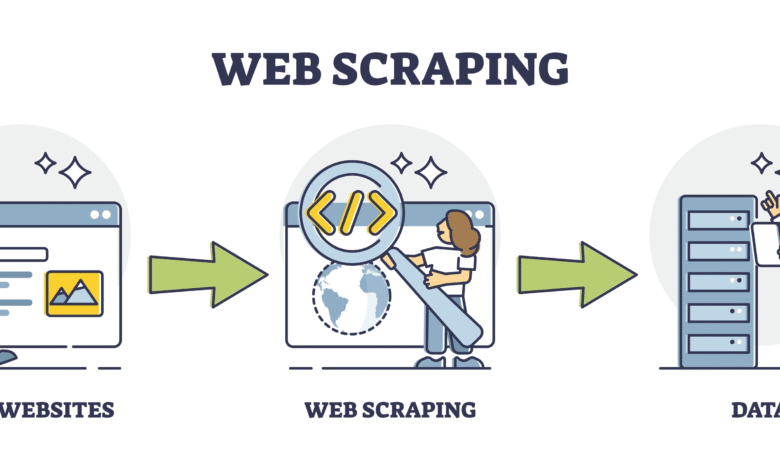

Web scraping is a powerful technique used to extract valuable information from websites, transforming the way we access and utilize online data. By employing tools like BeautifulSoup, developers can easily perform HTML parsing to retrieve relevant content from various web pages. This process, often referred to as data scraping or content extraction, allows users to gather insights from the vast ocean of information available on the internet. However, while extracting data, it’s crucial to practice ethical web scraping by respecting the site’s terms of service, including compliance with robots.txt and copyright laws. Embracing responsible scraping not only enhances the reliability of data but also fosters a sustainable web environment.

When we talk about harvesting information from online platforms, various terms may come to mind including data harvesting and web mining. This digital practice enables users to collect and analyze data from multiple sources, unlocking a wealth of knowledge previously hidden within the depths of web pages. Utilizing tools and libraries for parsing HTML, such as the widely-adopted BeautifulSoup, streamlines this process further, allowing for efficient extraction of essential content. The importance of adhering to ethical standards in this field cannot be overstated, as responsible practices ensure the protection of intellectual property and comply with digital regulations, fostering a healthier online ecosystem.

The Importance of Ethical Web Scraping

Ethical web scraping is a crucial practice in the digital age, where data is more accessible than ever. It refers to the methodology of extracting data from websites while adhering to their terms of service and respecting the privacy of the content owners. This approach not only helps maintain a good relationship with website developers but also protects users’ proprietary information. Websites often put restrictions in place through their robots.txt files, and abiding by these guidelines is an essential part of ethical web scraping.

Furthermore, adherent practices ensure that the data collection process does not negatively impact the website’s performance or user experience. Ethical web scraping involves considerations such as rate limiting to prevent server overloads and ensuring that the data being collected is used responsibly. By following ethical standards, developers can contribute to a fair digital environment while still benefiting from the vast amount of information available on the internet.

Frequently Asked Questions

What is web scraping and how is it related to data scraping?

Web scraping is the process of extracting data from websites using automated techniques. It falls under the broader category of data scraping, which involves gathering information from various sources. Effective web scraping techniques often utilize HTML parsing to identify and retrieve relevant data, such as articles or images.

How does HTML parsing work in web scraping?

HTML parsing in web scraping involves analyzing the structure of a web page’s HTML code to extract desired content. By using libraries like BeautifulSoup in Python, developers can navigate the document tree, locate elements, and retrieve specific data points, making it easier to scrape content efficiently.

What tools can I use for ethical web scraping?

For ethical web scraping, tools like BeautifulSoup, Scrapy, and Selenium can be used. It is essential to use these tools responsibly by adhering to the website’s terms of service and ethical guidelines, ensuring that scraping does not violate any rules or disrupt the target site.

What are the ethical considerations in web scraping?

Ethical web scraping requires respect for a website’s terms of service, the rights of content creators, and compliance with robots.txt files. It’s important to scrape responsibly, avoiding excessive requests that could overload a server and to consider copyright laws when using scraped content.

Can I scrape news articles from websites like the New York Times?

While it may be tempting to scrape news articles from sites like the New York Times, it is crucial to check their terms of service and comply with legal guidelines. Many news sites have specific restrictions on automated data extraction, so it is advisable to explore their APIs or RSS feeds for legitimate access to their content.

What are common use cases for content extraction through web scraping?

Common use cases for content extraction include gathering product prices from e-commerce sites, aggregating news articles or reviews, and compiling data for research purposes. Web scraping can automate these processes, enabling users to collect large sets of data efficiently.

Is web scraping legal?

The legality of web scraping depends on various factors, including the target website’s terms of service, local laws, and the method of data collection. Always review these legal guidelines before proceeding with web scraping to ensure compliance and avoid potential legal issues.

How can I avoid being blocked while scraping a website?

To minimize the risk of being blocked while scraping, employ techniques such as rotating user agents, limiting request frequency, using proxies, and adhering to the robots.txt file of the site. Respecting the website’s crawling rules will help maintain access and avoid IP bans.

| Key Point | Details |

|---|---|

| Valuable Articles | The page may contain articles on various topics like current events, politics, culture, science, and technology. |

| Direct Search | It’s advisable to search directly on the New York Times website (nytimes.com) for the latest articles. |

| Web Scraping Guidelines | Web scraping requires permission and adherence to the website’s terms of service. |

| Compliance | Ensure compliance with robots.txt and copyright laws when scraping data. |

| Technological Tools | Use libraries like BeautifulSoup in Python for scraping and data extraction. |

| Ethical Considerations | Consider the ethical aspects of web data scraping to prevent misuse. |

Summary

Web scraping is a crucial technique for gathering data from various websites, including news articles and research material. When scraping content, it’s important to follow the website’s terms of service and legal restrictions to avoid potential issues. By using effective tools like BeautifulSoup, you can extract valuable information while ensuring compliance with ethical standards.