MRI Safety Guidelines: How to Prepare for Your Screening

MRI safety guidelines are crucial for ensuring a safe and successful imaging experience. The significance of these guidelines cannot be overstated, as they help prevent incidents that could result from the strong magnetic fields generated during Magnetic Resonance Imaging. Notably, improper attire or carrying metallic objects into the MRI screening room can pose serious risks, including injury or compromised image quality. Patients must adhere to recommendations such as avoiding metal-infused clothing and any personal items like keys and jewelry that can interfere with the MRI process. To further enhance your safety and comfort, understanding what not to wear for MRI and following essential MRI safety tips is fundamental.

When discussing the precautions necessary for undergoing Magnetic Resonance Imaging, it is essential to consider MRI screening precautions and the importance of safe MRI attire. Enterprises and healthcare professionals emphasize the avoidance of objects, including metal items, that can cause hazards within the imaging space. Patients should be aware of potential risks associated with various wearables and personal belongings that may contain metallic elements. Moreover, understanding what not to bring into an MRI room plays a significant role in maintaining a secure environment. Safety measures not only protect patients but also contribute to the overall efficacy of the imaging process.

Understanding MRI Safety Guidelines

When preparing for an MRI, it’s crucial to familiarize yourself with MRI safety guidelines to ensure a safe screening experience. These guidelines outline essential protocols that patients and medical professionals must follow to avoid accidents and injuries. For example, all metallic objects such as jewelry, watches, and hairpins must be removed before entering the MRI room. The strong magnetic field can attract these objects with significant force, posing a safety risk to both the patient and the MRI equipment.

Additionally, MRI safety guidelines emphasize that even small items like cell phones and keys should not be brought into the examination room. These items can become dangerous projectiles, potentially harming individuals present in the area. It is important for patients to communicate openly about any implanted medical devices or devices they may have, as specific safety precautions may be needed for those undergoing MRI scans.

Essential MRI Screening Precautions

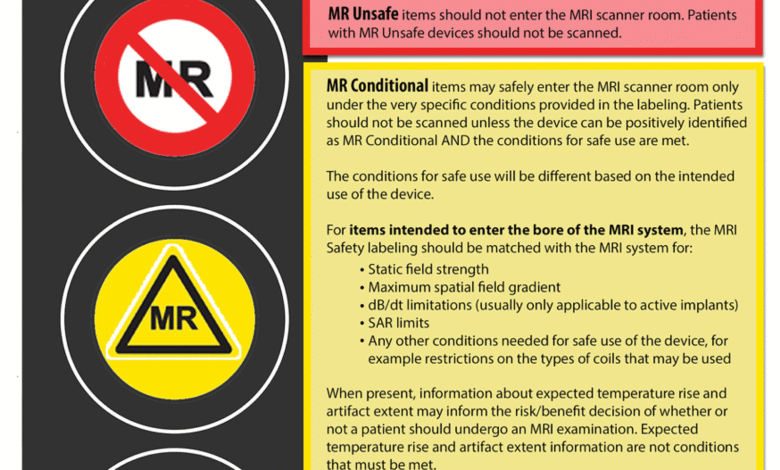

Before your MRI screening, taking the necessary precautions is essential for a safe and successful procedure. One critical aspect is to follow detailed MRI screening precautions provided by medical professionals. These precautions include ensuring any metallic body implants, like pacemakers or aneurysm clips, are reported to the MRI technician. Patients should carry their medical identification cards to inform the team about any implanted devices and confirm that they are compatible with MRI technology.

Moreover, patients should avoid taking any personal items into the MRI room that may contain metallic components. Regular items such as metal hair accessories, keys, and coins can pose risks and should always be left outside the room. Following these MRI screening precautions can significantly reduce the risk of accidents and ensure the procedure runs smoothly.

What Not to Wear for an MRI

Choosing the appropriate attire ahead of an MRI is pivotal for ensuring your safety during the procedure. While it may seem trivial, what not to wear for an MRI can significantly affect the outcome of your scan. Clothing that includes metal, like zippers, buttons, or any embellishments, can interact unfavorably with the MRI machine’s magnetic field. Patients are encouraged to wear loose-fitting clothing made of cotton or linen, which is both safe and comfortable.

It is also advised to avoid wearing any kind of jewelry or accessories, including earrings and bracelets, before the scan. These items can not only interfere with the imaging process but can also pose physical safety risks. In addition, specific garments that might be fashionable in casual settings—like athletic wear incorporating metallic fibers—should also be avoided as they can lead to burns or distorted imaging results.

Safe MRI Attire: What to Wear

When preparing for your MRI appointment, knowing what to wear is key to ensuring a smooth and safe experience. Safe MRI attire typically includes loose-fitting clothing, particularly made from non-metallic fabrics such as cotton or linen. These choices minimize the risk of interference with the MRI machine, ensuring clear imaging and avoiding any potential hazards during the procedure.

Patients are often advised to wear pajamas or a simple gown provided by the facility, which is designed to be free of metal components. It is prudent to inquire if the MRI facility offers gowns without metallic threads, further ensuring safety during the scan. Wearing appropriate safe MRI attire not only prioritizes your safety but also protects the delicate MRI equipment from inadvertent damage that could impact its functioning.

Critical MRI Safety Tips to Follow

Implementing MRI safety tips can significantly enhance your experience and ensure you are well-prepared for the procedure. A vital tip includes arriving at the facility with a clear understanding of any medical devices you have. Always inform the MRI technician of any implanted devices, as this information is critical in assessing your eligibility for the scan. Each device has different compatibility with the MRI, and being transparent can help prevent any complications.

Additionally, make it a point to leave all personal items behind, especially those that contain metal. Items such as cell phones, credit cards, and even makeup products should be removed as they may disrupt the imaging process or get damaged due to the magnet’s intensity. These MRI safety tips significantly reduce risks and enhance the overall safety and efficacy of your MRI experience.

Avoiding Metal Objects in MRI Areas

Metal objects pose significant risks when in proximity to MRI machines due to their strong magnetic fields. Understanding the importance of avoiding metal objects in MRI areas cannot be stressed enough. Heavy equipment and small personal items alike can become dangerous projectiles if brought into the MRI suite. Therefore, patients must heed advisories and instructions carefully, as these rules are in place to protect everyone in the vicinity.

In addition to personal items, larger metallic equipment, such as certain medical devices or vehicles, should always be kept out of the MRI environment. Staff are trained to ensure that these protocols are enforced for the safety of the patients and technicians alike. Taking the time to ensure that the area is free from metal objects will protect not only the equipment from damage but also the safety of all personnel involved.

Discussing Implants Before Your MRI

When scheduling your MRI, it’s essential to discuss any implants or medical devices with your healthcare provider beforehand. Certain implants can affect MRI scans, and some devices may even become dangerous when exposed to the strong magnetic field of the MRI. This discussion should include any orthopedic implants, stimulators, or devices used for medical interventions to assess their compatibility with MRI procedures.

Moreover, healthcare professionals have a duty to ensure that patients are aware of the potential risks associated with their implants during an MRI. For example, some devices might require specific settings or technological accommodations during the scan to protect the patient effectively. Ensuring this dialogue occurs will mitigate risks and enhance overall safety during an MRI examination.

Removing Personal Belongings Before the MRI

One of the most crucial steps in preparing for an MRI is removing personal belongings before entering the scanner room. It cannot be understated how important it is to declutter the MRI area of any metallic or electronic items to secure patient safety. Accessories, such as straps, watches, or anything else that could contain metal, must be left in a designated area outside the MRI suite.

The rationale behind this guideline is straightforward—metal objects can not only cause injury to the patient or staff but can also severely interfere with the imaging results. Patients should be vigilant and follow all guidance regarding what they can and cannot bring into the MRI facility, which is a steadfast approach to ensuring a safe and effective scanning process.

Preparing the Mindset for an MRI Procedure

Preparing mentally for an MRI procedure is just as important as logistical preparations. Understanding the process and knowing what to expect can significantly reduce anxiety and lead to better outcomes. Take the time to familiarize yourself with the MRI procedure, including how long it will take and any sounds you might hear. By educating yourself about the experience, you’ll feel more in control and calm during the scan.

Moreover, practicing relaxation techniques, such as deep breathing or visualization, can be beneficial as you get closer to your appointment. A calm mindset reduces tension and helps ensure that you remain still during the scan, which is crucial for obtaining clear images. By prioritizing mental preparation alongside physical preparedness, you can ensure a more pleasant MRI experience.

Frequently Asked Questions

What are the MRI safety guidelines regarding MRI screening precautions?

MRI safety guidelines emphasize the importance of removing all metallic objects before entering the MRI room to prevent accidents. Patients should avoid bringing items such as jewelry, watches, and any clothing with metal fasteners or fibers. It’s also essential to discuss any medical implants or devices with the MRI technician beforehand.

What not to wear for MRI procedures according to MRI safety tips?

Patients should not wear any clothing that contains metallic threads, zippers, buttons, or snaps for MRI procedures. Additionally, avoid jewelry, piercings, and watches, as these can interfere with the MRI’s magnetic field and pose safety risks.

What are the safe MRI attire options recommended by MRI safety guidelines?

MRI safety guidelines recommend wearing loose-fitting cotton or linen clothing for safe MRI attire. Items like pajamas or nightgowns are ideal, while compression wear and any clothing with metal embellishments should be avoided to prevent burns and compromised image quality.

What should I keep in mind regarding metal objects MRI safety guidelines?

To follow MRI safety guidelines, ensure that all metallic objects, including keys, coins, and personal electronics like cell phones, are left outside the MRI room. Such items can become dangerous projectiles due to the MRI’s strong magnetic field.

What are common MRI screening precautions that patients should know?

Common MRI screening precautions include informing the technician about any implanted medical devices, strictly adhering to guidelines regarding what to wear and bring, and ensuring all metallic items are removed to prevent interaction with the MRI’s magnetic field.

What items are listed as what not to bring into an MRI according to MRI safety guidelines?

According to MRI safety guidelines, patients should not bring heavy items like oxygen tanks, small items such as keys and coins, and personal items including cell phones and makeup with metallic particles into the MRI room to avoid safety hazards.

How can I prepare for an MRI and follow MRI screening precautions?

To prepare for an MRI and adhere to MRI screening precautions, consult with your doctor about any implants, arrive wearing safe attire without metallic components, and ensure to leave all unnecessary items like jewelry and personal electronics outside the MRI room.

What are some important MRI safety tips to remember before my appointment?

Important MRI safety tips include disclosing all medical history related to implants or devices, wearing non-metal clothing, removing all jewelry, and ensuring that no metal objects are brought into the MRI area to ensure safety during the procedure.

What guidance is provided on wearables and MRI safety guidelines?

MRI safety guidelines state that patients should avoid wearables with any metal, including jewelry, hair clips, and even clothing with metallic threads. Instead, opt for 100% cotton or linen garments without metallic attachments to ensure safety during the scan.

How should patients handle medical devices before an MRI as per MRI safety guidelines?

Patients must disclose any medical devices, such as pacemakers or implants, to the MRI technician prior to the procedure. MRI safety guidelines specify that certain devices may restrict the MRI process, and understanding these interactions is crucial for patient safety.

| Item Type | Items to Avoid |

|---|---|

| Wearables | Clothing with metallic threads or fibers, zippers, buttons, jewelry, piercings, watches, hairpins, tattoos with metal-based ink |

| Medical Devices | Hearing aids, oxygen tanks, pacemakers, aneurysm clips, cochlear implants, implanted devices |

| Personal Items | Keys, cell phones, coins, makeup with metallic particles, scissors, credit/debit cards |

Summary

MRI safety guidelines are crucial for ensuring a safe and effective MRI screening process. Patients must be aware of the items they bring into the MRI room, as certain metallic objects can pose serious hazards. Following these guidelines not only protects patients but also ensures the integrity of the MRI equipment. It is essential to remove all metallic items before entering the MRI area and to consult with technicians about any implanted devices. By adhering to these safety protocols, patients can contribute to a safe MRI experience.