Seed Oils: The Hidden Dangers in Your Food Supply

Seed oils have become a contentious topic in the culinary and health communities, especially as Americans strive to understand what they consume amid the Make America Healthy Again movement. Often derived from high-heat extraction processes, these oils, commonly found in processed food ingredients, have garnered attention for their potential health risks. HHS Secretary Robert F. Kennedy Jr. has gone so far as to describe them as “unknowingly poison[ing]” the population, sparking concern over the so-called “hateful eight oils”: corn, canola, and soy, among others. As conversations grow around the dangers of seed oils, many people are starting to question whether these unhealthy cooking oils are worth including in their diets. With increasing awareness of the implications of these oils, it’s clear that a closer examination of our dietary choices is essential for a healthier future.

Plant-based cooking oils, frequently labeled as seed oils, have made their way into numerous households, often without much thought about their impact on health. These fats, derived from seeds like sunflower and safflower, are prevalent in many processed food ingredients and restaurant dishes, raising eyebrows in the quest for a healthier lifestyle. As discussions about the adverse effects of certain oils elevate, terms like “harmful seed oils” bring focus to the specific varieties that should be avoided. Understanding the nuances of these oils, including the controversial “hatefully eight,” facilitates a deeper exploration of how our choices in cooking fats could influence overall well-being. It’s vital to distinguish between beneficial oils and those that contribute to health issues, especially when grease can often hide in the shadows of everyday meals.

The Rising Awareness of Harmful Seed Oils

In recent years, Americans have become more conscientious about the ingredients in their food, driven by health movements like Make America Healthy Again. One particular ingredient coming under scrutiny is seed oils, which are prevalent in many processed and packaged foods. This awakening has been emphasized by figures such as HHS Secretary Robert F. Kennedy Jr., who has sounded alarm bells on the toxicity of these oils. Often, individuals are unaware that many of these seed oils, used widely in the food supply, can be detrimental to health.

Many consumers are beginning to delve deep into the nutritional value and processing methods of their food items, leading to a growing distrust of seed oils. The term ‘hateful eight oils,’ coined by Dr. Cate Shanahan, highlights specific seed oils that are particularly concerning: corn, canola, cottonseed, soy, sunflower, safflower, rice bran, and grape seed. These oils, often touted for their convenience in cooking, pose risks that many are only starting to realize, including the potential for creating harmful toxins during the cooking process.

Understanding the Dangers of Seed Oils in Processed Foods

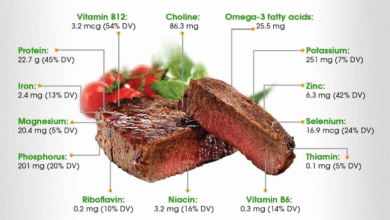

The extraction process of seed oils plays a critical role in their potential health hazards. Most of these oils undergo high-heat and high-pressure processing, which can introduce neurotoxins like hexane, a chemical solvent used during oil extraction. Moreover, this processing often strips the oils of essential nutrients, leaving behind a substance that lacks any nutritional benefit. As Dr. Shanahan points out, the oils that have been subjected to such intensive processing come devoid of vital nutrients such as choline and phospholipids, which are essential for brain and cellular function.

The prevalent use of these harmful seed oils in processed foods further compounds the issue. Fast foods, snacks, and even some cooking oils in restaurant and hospital settings often incorporate these unhealthy oils. Such widespread availability makes it increasingly challenging for health-conscious individuals to avoid them. The shift towards recognizing the detrimental effects of these oils aligns with growing research linking them to increased health risks, including the potential for chronic illnesses and even cancer, making consumers more vigilant about their dietary choices.

The Controversy Surrounding Seed Oils and Health Claims

In the face of mounting criticism, some health organizations continue to defend the use of seed oils, arguing that they can be part of a healthy diet. The American Heart Association (AHA) published a statement to assuage public fears, suggesting that the negative connotations associated with seed oils are overblown. According to their viewpoint, the fear of seed oils should not overshadow the importance of considering the larger picture, which includes other problematic ingredients prevalent in ultraprocessed foods.

This debate has created a divide in public opinion, with some experts supporting the benefits of seed oils while others, like Dr. Shanahan, warn of their potential hazards. Critics argue that the fatty acids in seed oils do indeed convert to toxins during cooking, leading to inflammation and chronic health problems. This ongoing discourse reveals a complex relationship between nutrition, public health advocacy, and the food industry’s influence on dietary guidelines.

Identifying the Hateful Eight Seed Oils

Dr. Cate Shanahan’s identification of the ‘hateful eight seed oils’ has become a focal point in discussions surrounding unhealthy cooking oils. These oils include corn, canola, cottonseed, soy, sunflower, safflower, rice bran, and grape seed. Each of these oils shares a commonality in that they undergo extensive processing, a step that introduces harmful substances into our food supply. The public is encouraged to become familiar with these oils, as they are often hidden components within processed foods that many consumers might not readily recognize.

Understanding which oils fall into this category is essential for making informed dietary choices. Many people unknowingly consume foods made with these seed oils on a daily basis, whether in snacks, salad dressings, or meal preparations. By educating themselves on the harmful effects of the hateful eight oils, consumers can take proactive steps to reduce their intake, focusing instead on healthier alternatives like olive oil, avocado oil, or coconut oil that are known for their beneficial properties.

The Extraction Process: Unveiling the Hidden Risks

The extraction process of seed oils is crucial to understanding why many of them are deemed harmful. Often, these oils are produced through high heat and chemical treatments, which not only strip away beneficial nutrients but also create toxic byproducts. The presence of hexane is a prime example of a neurotoxin that can result from the extraction process. Consumers should be aware that not all oils undergo the same extraction methods, and some may provide a healthier profile depending on how they are processed.

Furthermore, understanding how seed oils react when subjected to heat is vital for cooking practices. Oils high in polyunsaturated fatty acids can break down, releasing harmful compounds that are detrimental to health. Many consumers mistakenly believe that all plant-based oils are healthy, but the reality is that the refining treatments they undergo can significantly impact their nutritional value. Increasing awareness about these extraction methods can lead individuals to seek out oils that are minimally processed and better for long-term health.

Seed Oils and Their Role in Chronic Illnesses

Recent studies have linked the consumption of certain seed oils with a heightened risk of chronic illnesses, particularly regarding cardiovascular health and inflammation. The polyunsaturated fats found in many seed oils can lead to oxidative stress and an imbalance in omega fatty acid ratios in the body, potentially paving the way for diseases such as heart disease and diabetes. As more individuals strive to prioritize their health, understanding the potential health consequences of seed oil consumption has never been more critical.

The narrative surrounding health and nutrition continues to evolve, particularly in how it relates to the ingredients in our diet. Health advocates emphasize the importance of reevaluating the role of seed oils in daily cooking and consumption. By making informed choices and steering clear of harmful seed oils, individuals can significantly impact their health outcomes and lower their risk of developing chronic conditions.

Cook With Care: Choosing Healthier Alternatives

With a growing awareness of the negative impact of seed oils, many individuals are looking for healthier alternatives to cook with. Oils such as olive oil, avocado oil, and coconut oil not only provide better nutritional profiles but also avoid the harmful processing associated with seed oils. These alternatives are rich in beneficial compounds that support overall health, making them a wiser choice in the kitchen.

In addition to the health benefits provided by these alternative oils, they are also more versatile in cooking applications. For instance, olive oil is well-regarded for its flavor enhancement while being stable at moderate cooking temperatures. Similarly, avocado oil has a high smoke point, making it suitable for high-heat cooking without the formation of harmful byproducts. Exploring these healthier options can transform meal preparation and contribute positively to a balanced diet.

The Myth of Seed Oils: Separating Fact from Fiction

As the debate over seed oils continues, it is important to distinguish between myths and scientific facts regarding their health impacts. Many claims regarding the adverse effects of seed oils can be sensationalized, leading to confusion and misinformation among consumers. While some health experts defend the use of seed oils, it is essential to consider the body of research that highlights potential health risks associated with their high consumption.

Separating fact from fiction requires critical evaluation of the sources of information available about seed oils. Consumers should ask themselves questions regarding the origins of the oils they use and the processes they undergo before reaching their kitchens. An informed approach allows individuals to understand better which oils contribute to better health outcomes and which should be limited or avoided entirely.

Future Perspectives on Seed Oils and Nutrition

Looking ahead, the dialogue surrounding seed oils and nutrition is likely to evolve as more research emerges. The quest for healthier eating will prompt continuous scrutiny of oil-based ingredients in processed foods. As consumers become more educated about the sourcing and processing of oils, there could be a shift towards transparency in the food industry regarding ingredient usage, especially concerning seed oils.

Future nutritional guidelines may reflect the growing awareness of the harmful effects of certain seed oils, leading to recommendations that promote healthier cooking practices. As such discussions advance, there is potential for a revolution in public health strategies that prioritize not only the avoidance of harmful seed oils but also the encouragement of wholesome, nutrient-rich alternatives to foster overall well-being.

Frequently Asked Questions

What are the harmful seed oils that should be avoided?

The harmful seed oils that you should avoid are often referred to as the ‘hateful eight seed oils.’ These include corn oil, canola oil, cottonseed oil, soy oil, sunflower oil, safflower oil, rice bran oil, and grape seed oil. These oils are considered unhealthy due to their extraction processes and high levels of polyunsaturated fats that can become toxic when heated.

Why are some seed oils considered unhealthy cooking oils?

Some seed oils are considered unhealthy cooking oils due to their processing methods. They are often extracted using high heat and chemicals, which can introduce neurotoxins like hexane and strip away essential nutrients. This not only makes them less beneficial, but also increases the risk of generating harmful substances when these oils are heated.

What are the dangers of seed oils in our diet?

The dangers of seed oils include their association with increased risks of various health issues such as colon cancer. These oils are commonly found in processed foods and can lead to chronic inflammation and other health problems when consumed regularly due to their unhealthy fatty acid composition.

Are there any benefits to using seed oils like sesame or flaxseed oil?

Yes, not all seed oils are harmful. Oils like sesame and flaxseed oil are considered beneficial, as they contain healthy fats and nutrients. Unlike the hateful eight seed oils, these oils are often processed differently and provide health benefits such as omega-3 fatty acids and antioxidants.

How can I identify unhealthy processed food ingredients related to seed oils?

To identify unhealthy processed food ingredients, look for products containing the hateful eight seed oils: corn, canola, cottonseed, soy, sunflower, safflower, rice bran, and grape seed oils. Check the ingredient list for these oils and any high levels of sugars or additives, which often indicate that the food is less nutritious.

What do health organizations say about the safety of seed oils?

Health organizations like the American Heart Association have defended the use of seed oils, suggesting that there is no need to avoid them and highlighting that the real health concerns are related to overall diet and consumption of ultraprocessed foods rather than seed oils themselves.

Is it possible to eliminate harmful seed oils from my diet?

Yes, it is possible to eliminate harmful seed oils from your diet by reading labels carefully, opting for whole, unprocessed foods, and choosing healthier cooking oils, such as olive oil, avocado oil, or other oils that are not part of the hateful eight list.

What are some alternatives to harmful seed oils for cooking?

Alternatives to harmful seed oils include healthy oils such as olive oil, avocado oil, coconut oil, and butter. These options provide better nutritional profiles and are less likely to become toxic when heated.

| Key Point | Description |

|---|---|

| Seed Oils Defined | Plant-based oils often used in processed foods, categorically defined. |

| The Hateful Eight | The eight most harmful seed oils to avoid are corn, canola, cottonseed, soy, sunflower, safflower, rice bran, and grape seed. |

| Health Concerns | Linked to potential health risks, including an increased risk of colon cancer. |

| Nutritional Consequences | Refining removes essential nutrients like choline and lecithin necessary for bodily functions. |

| Controversy | Health agencies like the AHA defend seed oils, suggesting they may not be harmful. |

Summary

Seed oils have become a focal point in discussions of dietary health, particularly in light of growing concerns about their ingredients. As outlined by health experts, certain seed oils, especially those categorized as the ‘hateful eight’, are regarded as detrimental to health due to their processing methods and nutritional drawbacks. Despite some health organizations promoting seed oils as safe, the debate continues, highlighting the importance of scrutinizing ingredient lists in our food choices for maintaining optimal health.