How to Summarize Information Effectively: Tips & Techniques

Understanding how to summarize information is an essential skill for effective communication and learning. It involves distilling important concepts and ideas into concise, manageable formats that capture the essence of the original text. Mastering various summarizing techniques can enhance your ability to retain knowledge and convey it to others efficiently. Whether you’re writing summaries for academic purposes or just trying to grasp the key points from a lengthy article, effective summarizing tips can make all the difference. In this guide, we’ll explore practical methods for information summarization, ensuring you can present details clearly and accurately.

In today’s fast-paced digital age, the ability to condense complex information into brief summaries is invaluable. This process, often referred to as distillation or abstraction, involves identifying and articulating the core messages within extensive content. By utilizing strategic summarization techniques, individuals can improve their note-taking, study habits, and communication skills. Writers and learners alike benefit from mastering how to craft concise synopses that encapsulate essential ideas without losing context. Join us as we delve into effective methods for writing summaries that resonate and inform.

The Importance of Summarizing Information

Summarizing information is a key skill that enhances understanding and retention of content. In our fast-paced world, we are often inundated with an overwhelming amount of data, and the ability to distill essential points into concise summaries can be incredibly valuable. Not only does it aid in processing information efficiently, but it also allows individuals to communicate key insights effectively to others. By mastering the art of summarization, one can improve their academic performance, professional communication, and even personal understanding of complex subjects.

Effective summarizing techniques help in honing critical thinking and analytical skills. When you summarize, you evaluate what is truly important in a text and discard irrelevant information. This not only ensures you focus on the main ideas but also enhances your overall comprehension. Moreover, practicing summarization can lead to improved writing abilities as you learn to convey ideas succinctly without losing their essence. Whether for study purposes, work presentations, or casual discussions, being able to summarize information is an invaluable asset.

Effective Summarizing Techniques

Employing effective summarizing techniques involves understanding the content deeply before attempting to condense it. One popular method is the ‘rule of three,’ which suggests that when summarizing, you should identify and focus on the three primary points or arguments of the text. This technique not only simplifies the information but also makes it more memorable. Additionally, utilizing bullet points for lists or key takeaways can enhance clarity and ease of understanding, especially in written summaries.

Another technique is to paraphrase rather than copy, as it encourages deeper engagement with the material. When writing summaries, make sure to include keywords that capture the essence of the text. This is particularly important for academic and professional writing, as it ensures that your summary retains the original context. Moreover, practicing summarization regularly can help improve speed and efficiency in extracting crucial information from texts, making you more adept at handling diverse types of content.

How to Summarize Information Effectively

Knowing how to summarize information effectively is a skill that can be developed with practice and intentionality. Start by actively reading or listening to the material, identifying the main ideas and supporting details. Highlighting or taking notes can help you remember these points during the summarization process. Once you have gathered your thoughts, attempt to rewrite the main concepts in your own words. This technique, known as paraphrasing, is essential for ensuring that you understand the information and can articulate it clearly.

After drafting your summary, it’s crucial to review it for clarity and conciseness. An effective summary should be significantly shorter than the original text while still communicating its primary message. Pay attention to the flow, ensuring that the information is logically organized and coherent. Utilizing transitions between your points can greatly enhance readability and comprehension. This method of summarization not only benefits the writer but also aids the reader in grasping essential information quickly, which is especially valuable in today’s information-saturated environment.

Writing Summaries for Academic Purposes

Writing summaries for academic purposes requires a clear understanding of the original material and the ability to convey its essence succinctly. When summarizing academic texts, it is essential to maintain an objective tone and focus on the content’s arguments, methodologies, and findings. Begin by thoroughly reading the material and marking key points. This preparation will save time and increase the effectiveness of your summarization. A well-crafted academic summary will typically include the text’s thesis, main arguments, and conclusions, providing a comprehensive overview for readers.

In academic settings, citations are crucial even in summaries. Depending on your institution’s guidelines, you may need to provide appropriate references for the summarized content. This not only credits the original authors but also enriches your summary by connecting it with broader academic dialogues. Ensure that your summary is not merely a collection of points but rather a coherent narrative that reflects the source material’s intentions and relevance to your field of study. Ultimately, mastering this aspect of writing will enhance your academic work and help you communicate complex ideas effectively.

Summary Techniques for Business Communication

In business communication, effective summarization can streamline information sharing and decision-making processes. When crafting summaries for reports, meetings, or presentations, focus on delivering clear, actionable insights that your audience can easily grasp. Avoid unnecessary jargon and instead, opt for straightforward language that succinctly conveys the key points. An effective business summary should highlight essential data, proposed actions, and potential ramifications, allowing stakeholders to make informed decisions quickly.

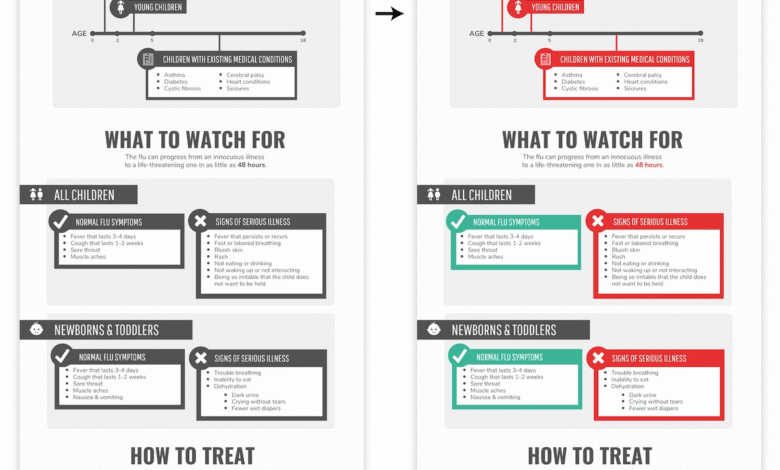

Utilizing visual aids such as charts or bullet points can enhance the impact of your business summaries. Visual representations of data often communicate information more efficiently than lengthy text, enabling your audience to digest content swiftly. Additionally, adapting your summaries to the specific needs of your audience, whether they are executives, team members, or clients, increases their relevance and effectiveness. By honing your summary skills in a business context, you can ensure that important messages don’t get lost in translation and that your communication remains efficient and impactful.

Tips for Writing Concise Summaries

Writing concise summaries is an essential skill that can enhance both academic and professional writing. Begin by focusing on the main idea of the text, stripping away any extraneous details that do not contribute to the overall message. Try to limit your summary to a few key sentences that embody the essence of the original material. This practice not only saves time for both the writer and reader but also ensures that the most relevant information is prioritized.

Another tip for creating concise summaries is to familiarize yourself with various summarization formats. Depending on the content type, whether it’s a scientific article, business report, or narrative story, the summary format may differ. Understanding the specific requirements for each format allows you to tailor your approach accordingly, making your summaries more effective. Regular practice and reflection on your summarization strategies will further refine your skills, ensuring that your summaries are not only concise but also comprehensive.

Using Technology for Information Summarization

In today’s digital age, leveraging technology for information summarization is becoming increasingly popular. Numerous software tools and applications exist that can assist in condensing large volumes of text into manageable summaries. These tools utilize algorithms to identify key sentences and themes, providing a quick overview of the material without manual effort. However, while technology can enhance the summarization process, it is essential to review generated summaries critically to ensure they accurately reflect the original content.

Moreover, using technology does not replace the need for personal summarization skills. It is more advantageous to use these tools as a supplement to your understanding rather than a complete solution. Combining your analysis with automated summaries can give you a robust understanding and save time. This synergy between human critical thinking and technological efficiency is key for professionals and students alike, allowing for improved productivity while maintaining high standards of comprehension.

Challenges in Information Summarization

Despite its importance, information summarization comes with challenges that can hinder effective communication. One major hurdle is the tendency to overly simplify complex ideas, which can result in a loss of critical information. When faced with dense academic articles or detailed reports, distilling such content into a summary without losing its depth can be difficult. This balance between brevity and thoroughness requires practice and careful consideration of which elements are truly essential.

Another challenge is the potential for bias in summarization. Personal interpretations can inadvertently influence the way content is summarized, leading to misrepresentation of the original message. To combat this, it’s essential to approach the summarization process with an objective lens, focusing solely on the material without letting personal opinions cloud the interpretation. Regularly engaging with a diverse array of texts and practicing summarization can also help develop a more balanced perspective, ensuring accurate and effective communication.

Enhancing Summarization Skills through Practice

Enhancing your summarization skills is an ongoing process that significantly benefits from consistent practice. Set aside time each week to summarize different types of content, whether they be articles, videos, or lectures. By diversifying the material you summarize, you can learn how to adapt your techniques based on the format and complexity of the original content. Each summary session is an opportunity to refine your ability to capture main ideas while remaining accurate and concise.

Incorporating feedback from peers or mentors can also accelerate your summarization skills. Sharing your summaries with others allows for fresh perspectives on how effectively you conveyed the main points. Constructive criticism can guide you in adjusting your approach and improving clarity. Additionally, considering how others summarize similar content can provide new strategies and insights, fostering an environment of collaborative learning that enhances everyone’s summarization abilities.

Frequently Asked Questions

What are effective summarizing techniques for condensing information?

Effective summarizing techniques include identifying the main ideas, eliminating unnecessary details, and using your own words. Start by reading the material thoroughly, then highlight key points and phrases. Organize your thoughts before writing the summary to ensure clarity and conciseness.

How can I improve my information summarization skills?

To improve your information summarization skills, practice regularly by summarizing different types of texts. Focus on extracting essential concepts and rephrasing them. Utilizing tools like outlines and bullet points can also help structure your summaries more effectively.

What are some effective summarizing tips for writing concise summaries?

When writing concise summaries, focus on the purpose of the text and only include crucial details that support the main ideas. Keep sentences clear and avoid jargon. Additionally, revise your summary to ensure it reflects the original content accurately but in a more succinct form.

What should I include in an effective summary?

An effective summary should include the primary argument or thesis, key points that support that argument, and any conclusions drawn by the author. Ensure that your summary is independent of the original text while still capturing its essence and purpose.

How does writing summaries help with understanding information?

Writing summaries helps consolidate information by forcing you to process and reinterpret the content in your own words. This active engagement enhances comprehension and retention, making it easier to recall details in the future.

What are the best practices for summarizing complex information?

Best practices for summarizing complex information include breaking down the content into manageable parts, using visual aids like charts or diagrams, and simplifying language without losing key concepts. Always focus on clarity to ensure the summary is accessible to your audience.

How can summarizing help in academic writing?

Summarizing is crucial in academic writing as it allows you to distill large amounts of information into concise paragraphs that support your arguments. A well-crafted summary demonstrates your understanding of the source material and helps you integrate evidence into your own work effectively.

What are the common mistakes to avoid when summarizing information?

Common mistakes to avoid when summarizing include including too much detail, failing to capture the main idea, and copying phrases directly from the source without rephrasing. Always aim for originality and clarity in your summary.

| Key Points |

|---|

| The assistant cannot access external websites for content extraction. |

| The assistant can help summarize information and provide insights. |

| User questions can be answered based on publicly available knowledge. |

Summary

How to summarize information effectively involves distilling complex data into essential points. It requires identifying main ideas, trimming excess details, and clearly presenting findings. By focusing on the core message, you can convey critical information succinctly, making it easier for your audience to understand and retain key concepts.