Memorial Day Murph: Complete the Ultimate Fitness Challenge

Memorial Day Murph has evolved into a national fitness challenge that combines grit, honor, and remembrance, set against the backdrop of a solemn day dedicated to our fallen heroes. Every year, fitness enthusiasts across the country engage in this grueling workout, originally inspired by the training regimen of Navy SEAL Lt. Michael Murphy, who sacrificed his life during Operation Red Wings. Known as “the Murph,” this military workout consists of a one-mile run, followed by 100 pull-ups, 200 push-ups, 300 air squats, and finishing with another mile run. Participants often add a 20-pound weighted vest, embracing the challenge as a tribute to those who fought bravely. Completing the Memorial Day fitness challenge is more than just a workout; it symbolizes the strength and resilience of the human spirit, ensuring that the legacy of heroes like Lt. Murphy lives on.

The Memorial Day fitness event, widely recognized as the Murph challenge, invites individuals to push their physical limits while commemorating the valor of fallen soldiers. This rigorous exercise routine, rooted in military training, is not merely about fitness but serves as a powerful reminder of the sacrifices made for freedom. Many participants adapt the exercises to suit their own capacities, creating a personal connection to the significance of this day. By engaging in this military-inspired workout, people honor the memory of Lt. Michael Murphy and others who have made the ultimate sacrifice. The Murph challenge transforms a day of remembrance into an inspiring opportunity to celebrate life and resilience through fitness.

Understanding the Memorial Day Murph Challenge

The Memorial Day Murph challenge stands as a paramount tribute to Navy SEAL Lt. Michael Murphy, who exemplified the valor and sacrifice of military heroes during his service. Each year, on Memorial Day, countless individuals engage in this rigorous fitness challenge as a means of honoring not only Murphy’s legacy but also the sacrifices made by all service members. The Murph workout is not only a physical test but also a profound reminder of the dedication and honor that military personnel embody. Those participating in the workout are encouraged to reflect on Murphy’s story and the respect he garnered for his courage during Operation Red Wings.

The typical structure of the Murph includes a one-mile run, followed by 100 pull-ups, 200 push-ups, and 300 air squats, before completing another mile run. This intense regimen encapsulates both strength and endurance, making it a comprehensive military workout designed to push individuals to their limits. While many attempt the workout as prescribed, others find solace in modifying the routine, acknowledging the importance of personalizing the challenge to suit their fitness level. Ultimately, the goal remains unchanged: to honor those who have sacrificed for the country while also engaging in a meaningful and physically demanding activity.

The History and Legacy of Lt. Michael Murphy

Navy SEAL Lt. Michael Murphy’s life was marked by extraordinary bravery and a commitment to his fellow service members, particularly highlighted during Operation Red Wings. His selfless actions saved the life of fellow SEAL Marcus Luttrell, making Murphy a symbol of heroism and sacrifice. The establishment of the Murph workout memorializes his spirit and commitment to rigorous training as a means of preparation for the tough realities of military operations. This workout not only serves as a tribute but also as an inspiration, motivating participants to push their physical and mental limits in remembrance of such valor.

Murphy’s legacy is further perpetuated through the annual participation in the Murph challenge on Memorial Day. Thousands embrace this opportunity as a way to connect with the military community and honor those who have fallen. The collective effort of participants serves to cement Murphy’s ideals in the hearts and minds of new generations. The traditions surrounding this workout demonstrate how sport and fitness can foster a sense of community, solidarity, and remembrance among those who choose to accept the challenge, thereby ensuring that Lt. Michael Murphy’s memory remains a vital part of American history.

Embracing the Murph workout on Memorial Day allows individuals to cherish and uphold the values that Lt. Murphy embodied—courage, sacrifice, and a commitment to excellence. While some may view the challenge as a grueling physical feat, it transcends mere fitness; it is about coming together as a community to honor those who exemplified the highest ideals of service to their country.

Adapting the Murph Workout for All Fitness Levels

While the Memorial Day Murph is rooted in tradition, adaptability is key to making the workout accessible to everyone, regardless of fitness level. Many individuals may feel daunted by the full volume of the Murph, but modifications allow each participant to engage meaningfully in this military-inspired workout. For example, substituting pull-ups for assisted variations or opting for knee push-ups can help individuals maintain the spirit of the challenge while participating in a manner that is within their capabilities. The fitness community encourages a personalized approach, reminding participants that the essence of the workout lies in the intent to honor those who’ve sacrificed for their country.

Moreover, individuals may choose to break the repetitions into manageable sets. Dividing the 100 pull-ups, 200 push-ups, and 300 air squats into smaller cycles not only eases the physical burden but also fosters a sense of accomplishment as each segment is completed. This adjusted approach ensures everyone can partake in some capacity, reinforcing the notion that the Murph is about effort, perseverance, and camaraderie. Regardless of how one completes the challenge—be it through competitive spirit or personal benchmarks—what remains crucial is the shared dedication to remember those brave enough to serve.

The Broader Impact of the Murph on the Fitness Community

The Memorial Day Murph has catalyzed a significant cultural movement within the fitness community, encouraging individuals to engage in rigorous workouts while also fostering bonds through shared values of respect and honor. As local gyms and fitness enthusiasts adopt this challenge, it has grown into an event that not only pays homage to Lt. Michael Murphy but also promotes awareness of the Navy SEAL community and their sacrifices. This convergence of fitness and remembrance has led to an increase in charitable efforts and fundraising events aimed at supporting veterans and active-duty military personnel.

Participating in the Murph on Memorial Day goes beyond mere physical exertion; it symbolizes solidarity, elevating the experience into a collective act of respect and remembrance. The challenge serves as a rallying point for people across demographics and skill levels, encouraging dialogue about the importance of fitness, mental health, and the sacrifices made by service members. As communities come together to honor this tradition, not only do they celebrate physical fitness but also cultivate a deeper understanding of the resilience and courage that military personnel embody.

Preparing for the Memorial Day Murph Challenge

Preparation is essential when gearing up for the Memorial Day Murph, especially for those who may be undertaking this military workout for the first time. Understanding the components of the workout is key: the combination of distance running and high-repetition strength exercises requires not just physical readiness but also mental fortitude. Individuals are encouraged to train in advance, gradually building up stamina and strength through practice runs and workouts simulating the Murph’s requirements. This proactive approach helps mitigate injuries and optimizes performance on the day of the event.

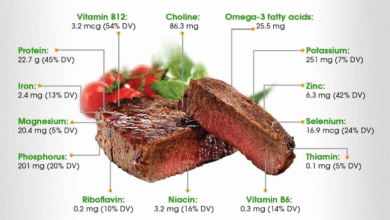

Moreover, proper nutrition and hydration play crucial roles in preparing for the Murph. Fueling the body with the right nutrients prior to the workout can enhance endurance and recovery, while staying hydrated ensures optimal performance. As participants develop their training plans, incorporating variations of the workout leading up to Memorial Day can help in familiarizing themselves with the movements and pacing needed for success. Ultimately, thorough preparation can transform the challenge into not just a test of physical capability but a testament to determination and honor.

The Meaning Behind Completing the Murph Challenge

Completing the Memorial Day Murph signifies more than just finishing a tough workout; it encapsulates a spirit of unity, remembrance, and respect for those who lost their lives while serving in the military. Participants often reflect on the values embodied by Lt. Michael Murphy as they engage in this labored yet rewarding experience. It is a moment to honor sacrifice through sweat, echoing the ethos of perseverance displayed by heroes like Murphy. Each minute of exertion serves as a tribute, reminding individuals of the true cost of freedom and the honor bestowed upon those who protect it.

Additionally, the collective experience of completing the Murph fosters a powerful sense of community among participants. Whether they’re in gyms, at home, or in parks, a shared commitment to complete the challenge can create bonds that go beyond fitness, unifying individuals in a common goal of respect and remembrance. As people rally together, they not only celebrate the memories of those who have fallen but continue to build a legacy where fitness meets valor. The Murph stands as a testament to resilience, ensuring that Lt. Murphy’s dedication is never forgotten.

Why the Murph Workout is Unique

The Murph workout is distinct in its ability to combine a variety of physical challenges into a single session, making it a comprehensive test of overall fitness. This military workout incorporates strength training and cardio, forcing athletes to tap into various physical capacities. The blend of bodyweight exercises like pull-ups, push-ups, and air squats, coupled with running, demands not only strength but also stamina and endurance. Its unique structure ensures that participants engage multiple muscle groups, providing a full-body workout that many traditional routines lack.

Furthermore, the historical context of the Murph workout adds to its uniqueness. Developed as a tribute to Lt. Michael Murphy, the workout symbolizes something larger than personal fitness goals. It evokes a sense of purpose and camaraderie among participants, uniting them under the shared mission of honoring fallen heroes. The emotional weight linked to each repetition serves as a powerful motivator, transforming the Murph from a standard workout into a heartfelt tribute. This remarkable intertwining of physical challenge and emotional connection sets the Murph apart in the fitness world.

Joining the Murph Movement: Community and Commemoration

Joining the Memorial Day Murph movement offers individuals the chance to engage in a powerful act of commemoration and community. This event invites participants—from seasoned athletes to newcomers—to embrace the call of the Murph workout as a way to both challenge themselves physically and connect with others who share the same dedication to honor military sacrifices. Many gyms and fitness centers around the country organize group workouts, fostering a spirit of unity and encouraging countless individuals to participate, regardless of their fitness levels.

Taking part in such a communal experience creates a lasting impact, as the act of pushing through the Murph together can forge friendships and solidarity among participants. In many cases, the workout acts as a conduit for individuals to share personal stories, encourage one another, and celebrate the honor of military heroes posthumously. As this movement continues to grow, it not only preserves the memory of Lt. Murphy and his fellow service members but also unites communities across America in a shared commitment to respect and remember those who have served.

Frequently Asked Questions

What is the Memorial Day Murph workout?

The Memorial Day Murph workout is a military fitness challenge that consists of a one-mile run, 100 pull-ups, 200 push-ups, 300 air squats, and another one-mile run. This challenge is named in honor of Navy SEAL Lt. Michael Murphy and is performed by thousands of people across the nation on Memorial Day.

How did the Memorial Day Murph workout originate?

The Memorial Day Murph workout originates from Lt. Michael Murphy’s training during his time in SEALs. Murphy and his SEAL teammate, Kaj Larsen, would perform this intense workout together, originally calling it ‘Body Armor.’ It honors Murphy’s legacy as a fallen hero.

What should I expect from the Memorial Day fitness challenge?

Expect a rigorous workout that pushes your physical limits during the Memorial Day fitness challenge. The Murph workout is designed to challenge participants with its combination of strength and cardio exercises, and it can be scaled based on individual fitness levels.

Can I modify the Memorial Day Murph if I’m a beginner?

Yes, modifications for the Memorial Day Murph are encouraged. Beginners can break down the repetitions or substitute pull-ups with jumping or assisted variations. The key is to challenge yourself in honor of those who have made the ultimate sacrifice.

Why is the Murph workout significant on Memorial Day?

The Murph workout is significant on Memorial Day as it honors the memory of Lt. Michael Murphy, who made the ultimate sacrifice during Operation Red Wings. Participating in the workout is a way to remember and pay tribute to fallen heroes while engaging in a physically demanding challenge.

Do I have to wear a weighted vest for the Memorial Day Murph?

No, wearing a weighted vest for the Memorial Day Murph is optional. While Murphy often completed the workout with a 20-pound vest, it is not a requirement, especially for those new to the workout or seeking to adapt it to their fitness levels.

What type of fitness mindset should I have when approaching the Murph workout?

When approaching the Murph workout, embrace a mindset of perseverance and determination. The workout is meant to be challenging, reflecting the sacrifices made by veterans. Focus on completing the workout as a tribute to those who served.

How can the Memorial Day Murph workout be a community event?

The Memorial Day Murph workout can be a community event by bringing people together to collectively honor Lt. Michael Murphy and fallen soldiers. Many gyms organize group workouts, fostering a sense of camaraderie and support while engaging in this meaningful fitness challenge.

Is there a set way to complete the Memorial Day Murph workout?

There is no set way to complete the Memorial Day Murph workout. Participants can choose their own pacing, how they break up the exercises, and whether they incorporate modifications, allowing everyone to engage in the challenge irrespective of fitness level.

What is the historical context behind the Memorial Day Murph?

The Memorial Day Murph has a historical context tied to the heroism of Lt. Michael Murphy, who died in service during Operation Red Wings in 2005. The workout serves as both a physical challenge and a means to honor his legacy and the sacrifices of military personnel.

| Key Point | Details |

|---|---|

| Memorial Day ‘Murph’ | A fitness challenge honoring Navy SEAL Lt. Michael Murphy, taking place every Memorial Day. |

| Purpose of the Challenge | To remember and honor those who sacrificed their lives in service, specifically Lt. Michael Murphy. |

| Workout Structure | Involves a one-mile run, 100 pull-ups, 200 push-ups, 300 air squats, followed by another one-mile run. |

| Weighted Vest | Participants often wear a 20-pound weighted vest, but it’s optional. |

| Modifications Allowed | Participants can modify the workout, such as breaking down exercises or substituting pull-ups. |

| Legacy of Lt. Michael Murphy | Murphy’s heroism during Operation Red Wings is honored through ‘the Murph’ workout. |

| Cultural Impact | The Murph has fostered a cultural movement within the fitness community, promoting hard work and resilience. |

Summary

Memorial Day Murph is not just a fitness challenge; it is a powerful tribute to those who have made the ultimate sacrifice for their country. By participating in this grueling workout, individuals across America join together to honor the legacy of Lt. Michael Murphy and all fallen heroes. The Murph serves as a poignant reminder of courage, sacrifice, and community, blending physical fitness with deep respect for those who served. Everyone, whether a seasoned athlete or a beginner, can participate in their own way, making it an inclusive challenge that celebrates resilience and honor on Memorial Day.