Scraping Website Content: Techniques for Efficient Data Extraction

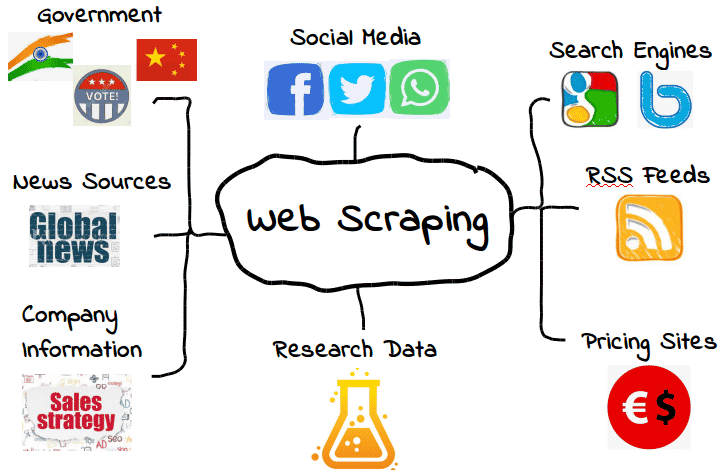

Scraping website content has become an essential practice for businesses and developers looking to gather valuable data from the web efficiently. This process, often referred to as web scraping, involves extracting information from various online sources and transforming it into usable formats. By leveraging web scraping techniques, users can facilitate data extraction, enabling them to collect and analyze website data with ease. Whether for market research or competitive analysis, mastering the art of HTML content extraction allows professionals to stay ahead in today’s data-driven landscape. As such, understanding how to effectively scrape website content is crucial for anyone looking to harness the power of information available online.

Website data collection is a growing field that encompasses a variety of methods for acquiring information from online sources. Commonly, this practice is known as data harvesting or content extraction, allowing users to capture significant amounts of data in a structured manner. Techniques employed in this realm can range from simple automated scripts to sophisticated web crawlers designed to navigate multiple pages seamlessly. The importance of efficient website data extraction cannot be overstated, as it opens doors to insights that can drive strategic decisions across industries. Furthermore, the ability to systematically gather web information enhances research capabilities and fosters innovation.

Understanding Web Scraping Techniques

Web scraping is a sophisticated method used to extract data from websites. This process revolves around the utilization of various web scraping techniques, which may include tools and libraries designed to automate data collection from web pages. By employing programming languages such as Python and libraries like Beautiful Soup or Scrapy, users can efficiently navigate HTML structures, allowing them to locate and extract relevant information with precision.

The beauty of web scraping lies in its ability to handle a wide array of data extraction tasks. Whether it involves gathering product prices from e-commerce sites or harvesting news articles from media outlets, understanding these techniques is crucial. Exploring methods such as crawl scraping, where the entire website is accessed systematically, can help in collecting substantial data sets that are otherwise challenging to compile manually.

The Importance of Data Extraction

Data extraction plays a pivotal role in transforming unstructured web content into usable information for analysis. It allows organizations to harness valuable insights from diverse sources, thereby enhancing their decision-making processes. By leveraging data extraction strategies, companies can track market trends, customer behavior, and competitive landscapes effectively.

Furthermore, effective data extraction practices can lead to optimized operational efficiencies. For instance, businesses can automate the monitoring of competitor pricing or market offerings, leading to better strategic positioning. Reliable data extracted through advanced web scraping techniques ensures that the information obtained is not only accurate but actionable, which is essential in today’s data-driven environment.

Website Data Collection Strategies

Website data collection is integral for businesses and researchers alike, providing a foundation for data analysis and insights. Employing systematic approaches to collect data from the web can lead to significant improvements in data quality and accuracy. Utilizing automated website scraping tools enables users to streamline the data collection process, capturing large volumes of data in less time.

Additionally, focusing on ethical data collection strategies is vital in maintaining compliance with legal regulations. Scraping websites should be approached with caution, ensuring that the terms of service of the targeted sites are respected. By prioritizing ethics in data collection, organizations can build positive reputations and prevent potential legal repercussions while still benefiting from comprehensive insights.

HTML Content Extraction Techniques

HTML content extraction is a core element of web scraping, as it involves parsing the structural language of websites to retrieve meaningful information. Understanding the Document Object Model (DOM) is essential, as this model represents the structure of web pages. By employing libraries like Beautiful Soup, users can navigate through HTML tags to find the desired data efficiently.

Effective HTML content extraction requires a keen attention to detail, as many websites are designed differently. Mastering techniques such as XPath and CSS selectors can significantly enhance one’s ability to pinpoint specific data points within a webpage. With these tools, web scrapers can extract text, images, links, and other elements, providing a comprehensive dataset for further analysis.

Challenges in Scraping Website Content

Despite its advantages, scraping website content comes with its own set of challenges. Websites may implement anti-scraping technologies, such as CAPTCHAs or IP blocking, to thwart automated data collection processes. This can hinder access to vital information and require web scrapers to adapt and refine their techniques continually.

Furthermore, maintaining data accuracy and timeliness can be a struggle. Websites frequently update their layouts and structures, which can break scraping scripts and require ongoing maintenance. Therefore, staying updated with both technological advances and the evolving landscape of target websites is critical for successful scraping endeavors.

Ethics and Legal Considerations in Web Scraping

In the realm of web scraping, ethics and legal considerations play a significant role in shaping best practices. It is essential for web scrapers to be aware of the legal landscape surrounding data collection, as some websites explicitly prohibit scraping through their terms of service. Ignoring these prohibitions can lead to legal consequences, including lawsuits or bans from accessing specific sites.

Moreover, ethical considerations should guide the decision-making process of those involved in web scraping. Practicing responsible scraping by limiting the frequency and volume of requests to a server, and respecting robots.txt files, demonstrates a commitment to ethical practices. This ensures that the integrity of the data is maintained while upholding the rights of website owners.

Tools and Resources for Efficient Web Scraping

To streamline the web scraping process, a plethora of tools and resources are available for both beginners and advanced users. Popular frameworks like Scrapy and Beautiful Soup provide comprehensive functionalities for effective data extraction from HTML documents. Additionally, browser extensions such as Web Scraper offer user-friendly interfaces for straightforward data collection.

Moreover, leveraging cloud-based scraping tools can enhance the efficiency of data gathering. These platforms not only provide high-speed scraping capabilities but also offer advanced features like scheduling and proxy management, allowing users to bypass limitations and access large datasets seamlessly. By utilizing these technologies, individuals and organizations can significantly improve their data mining operations.

Maximizing Efficiency in Data Collection

Maximizing efficiency in data collection is crucial for organizations seeking to leverage information from web sources. Implementing batch scraping techniques allows users to collect data in bulk, significantly reducing the time spent on manual extraction. Moreover, integrating automated workflows with scheduling capabilities can ensure the continuous collection of updated data without human intervention.

Additionally, employing strategies such as parallel scraping can further enhance efficiency. By utilizing multiple threads to scrape data simultaneously, organizations can dramatically shorten the amount of time required to gather extensive datasets. This method is particularly useful for large-scale projects that require timely data for analysis.

Future Trends in Web Scraping

The landscape of web scraping is continuously evolving, driven by advancements in technology and changes in data availability. Future trends are expected to include increased automation through artificial intelligence and machine learning, allowing for smarter and more adaptive scraping techniques. These technologies will enhance the ability to discern relevant data from a myriad of web sources.

Moreover, as businesses increasingly recognize the value of big data, the demand for sophisticated scraping solutions is likely to grow. Innovations such as headless browsing and advanced machine learning models will empower users to extract insights from complex web environments effectively. Keeping abreast of these trends will be essential for organizations aiming to harness the power of web data to drive their success.

Frequently Asked Questions

What is web scraping and how does it relate to extracting information?

Web scraping is the process of programmatically extracting information from websites. It involves using web scraping techniques to collect data from HTML content, enabling users to gather vast amounts of website data efficiently.

Is web scraping legal for collecting website data?

The legality of web scraping website data varies by website and jurisdiction. It’s important to review a website’s terms of service and robots.txt file before engaging in data extraction to ensure compliance.

What tools are commonly used for HTML content extraction?

Popular tools for HTML content extraction include Python libraries like Beautiful Soup and Scrapy, which facilitate web scraping by simplifying the parsing of HTML and data extraction processes.

How can I use web scraping techniques for data extraction?

To use web scraping techniques for data extraction, you typically start by selecting a target website, then implement code to access and parse the HTML content, finally storing the extracted data in a desired format like CSV or JSON.

What are the ethical considerations for website data collection via scraping?

When collecting website data via scraping, it’s essential to consider ethical implications, such as respecting copyright laws, avoiding overloading servers with requests, and adhering to data privacy regulations.

Can web scraping be used for real-time data collection?

Yes, web scraping can be employed for real-time data collection, allowing users to automatically gather updated information from websites on a regular basis, which is especially useful for market research and competitive analysis.

What challenges might arise during website data collection?

Challenges during website data collection can include handling CAPTCHAs, navigating dynamic content, dealing with rate limiting by websites, and ensuring data accuracy and consistency after extraction.

How do I ensure accurate data extraction from a website?

To ensure accurate data extraction from a website, implement robust error handling in your scraping code, validate the extracted data against predefined criteria, and keep track of changes in the website’s structure.

What are some common uses for extracted data from web scraping?

Extracted data from web scraping is commonly used for market research, price comparison, sentiment analysis, lead generation, and content aggregation, providing valuable insights across various industries.

What programming languages are best for web scraping and data extraction?

Python is widely regarded as the best programming language for web scraping and data extraction due to its comprehensive libraries, ease of use, and strong community support. However, other languages like JavaScript and R are also used depending on specific project requirements.

| Key Point | Details |

|---|---|

| Access to External Websites | Cannot access websites like nytimes.com directly. |

| Scraping Content | Need specific HTML or article details to extract info. |

| Help Provided | Can summarize or extract information from provided content. |

Summary

Scraping website content can be challenging, especially since you cannot directly access websites such as nytimes.com. However, by providing specific HTML content or details from articles, one can still extract useful information effectively. This process involves understanding the structure of web pages and using appropriate tools to retrieve the desired data.