Scraping Content: Ethical Methods for Data Extraction

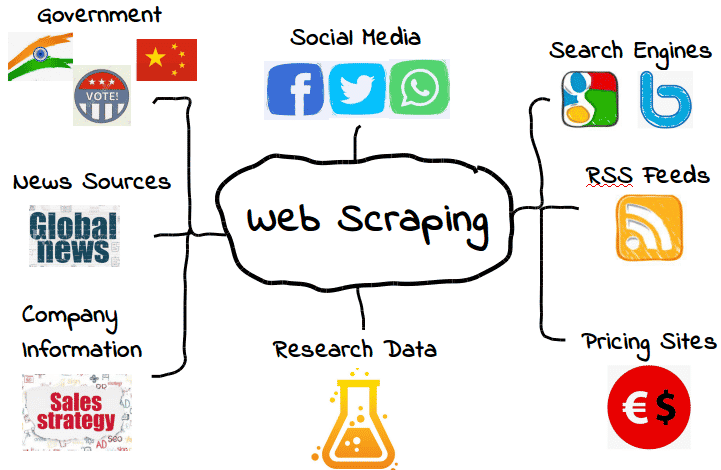

Scraping content has become an essential practice for gathering valuable information in today’s digital landscape. Techniques such as web scraping allow users to extract data from websites like the New York Times, providing insights that can drive decision-making and enhance research. However, it is crucial to approach content scraping ethically to avoid any potential violations of terms of service. This article will explore various data scraping techniques and discuss the importance of ethical web scraping practices to ensure a seamless and responsible approach to website data extraction. As we dive into this topic, we will provide valuable tips for effectively utilizing web scraping tools to optimize your data gathering process.

When we talk about retrieving information from online resources, a variety of terms come into play, including data harvesting, web scraping, and information extraction. These methods enable individuals and organizations to collect and analyze data from a multitude of websites, including renowned sources like the New York Times. Understanding how to harness these powerful data scraping techniques can significantly impact research projects and business strategies. However, it’s vital to navigate the complexities of this process ethically, ensuring compliance with website policies and legal frameworks. This discussion will illuminate the foundational concepts of content gathering and the nuances involved in the ethical extraction of data across the web.

Understanding Web Scraping and Its Implications

Web scraping is a powerful technique used to extract data from websites. However, it’s essential to understand the legal implications surrounding this practice. Specifically, scraping content from websites like the New York Times may violate their terms of service, which can lead to potential legal disputes. Ethical web scraping involves ensuring compliance with a website’s rules, which includes respecting robots.txt files and usage limitations.

Furthermore, ethical web scraping practices promote a balanced relationship between data collectors and website owners. By adhering to these guidelines, web scrapers can avoid penalties and maintain a good reputation. It’s vital to consider the ethical landscape of data scraping techniques before engaging in web scraping activities, ensuring that the process respects the rights of content owners.

Data Scraping Techniques Explained

There are various data scraping techniques that are commonly used today. Techniques can range from simple HTML parsing to more advanced methods such as using APIs or browser automation tools like Puppeteer and Selenium. Each method has its own advantages and limitations, depending on the website’s structure, the type of content being scraped, and the volume of data needed.

Choosing the right data scraping technique can significantly impact the efficiency and legality of the process. For instance, API scraping is often the most compliant method, as it allows users to access data directly from a website’s database with permission. In contrast, scraping HTML might be riskier if a website’s terms prohibit it, underscoring the importance of understanding the context and rules around data extraction.

The Importance of Ethical Web Scraping

Ethical web scraping stands at the forefront of data collection practices today. As the digital landscape evolves, so do the guidelines that govern how data is sourced and compiled. Ethical practices include obtaining consent when required and avoiding methods such as aggressive scraping that can overload servers, leading to service disruptions.

Implementing ethical web scraping not only fosters trust with content providers but also contributes to the overall integrity of the data collection industry. The responsibility lies with data scrapers to adhere to these practices, ensuring their activities do not infringe on the rights of content creators or violate legislative standards surrounding data usage.

Exploring Website Data Extraction Methods

Website data extraction methods vary widely, depending on the intended use and available tools. Popular methods include screen scraping, where data is extracted directly from the visual representation on the screen, and HTML parsing, which involves analyzing the website’s underlying code. Each method has its specific use cases, advantages, and challenges.

For businesses and researchers, the choice of website data extraction method can affect the quality and accuracy of the data collected. Scrapers must consider not only the technical aspects of their chosen extraction method but also the feasibility of obtaining and utilizing data in a legally and ethically sound manner.

Legal Considerations in Scraping Content

When engaging in web scraping, particularly of established publications like the New York Times, it’s crucial to consider the legal ramifications. Many websites explicitly state their data usage policies, and failing to comply could lead to legal repercussions. Scrapers must familiarize themselves with copyright laws and the specific terms of service of any website they plan to scrape.

Ignoring these legal considerations not only risks legal action but can also tarnish a scraper’s reputation. Data scraping should always be approached with caution, ensuring that all activities are within the boundaries of the law and ethical standards. Understanding the laws surrounding data extraction can protect scrapers and promote responsible data use.

Security Challenges in Web Scraping

Security challenges present a significant hurdle in the web scraping process. Many websites employ robust security measures, such as CAPTCHA systems, to prevent unauthorized scraping of their content. This implies that significant technical knowledge and resourcefulness are often required to bypass these hurdles, raising the stakes for would-be scrapers.

Moreover, frequent changes to website structures can lead to broken scrapers, necessitating regular updates to scraping scripts. Scrapers need to take these challenges into account, ensuring that their approaches are adaptable and resilient against evolving security measures and underlying web technologies.

Best Practices for Effective Scraping

To conduct web scraping successfully, it’s important to follow best practices that maximize efficiency and compliance. Setting appropriate scraping intervals can help ensure that the website’s server isn’t overwhelmed, while also remaining respectful of the site’s structure and data retrieval specifications.

Moreover, maintaining a clean data pipeline—by validating, cleaning, and periodically reviewing the scraped data—ensures that the output is valuable and reliable. These practices not only optimize the scraping process but also contribute to creating a more sustainable approach to data extraction.

Tools and Resources for Web Scrapers

Numerous tools and resources are available for web scrapers looking to optimize their data extraction efforts. Popular programming libraries like Beautiful Soup and Scrapy in Python provide powerful features for parsing and managing data. Additionally, services like Octoparse or ParseHub offer user-friendly interfaces for those who may not possess coding skills.

Engaging with online communities and forums can also be invaluable for web scrapers, allowing them to share insights, troubleshoot issues, and stay updated on the latest developments in web scraping tools and techniques. Leveraging these resources enhances the overall effectiveness and knowledge base within the scraping community.

Future Trends in Data Scraping

As technology advances, the future of data scraping continues to evolve. Emerging trends include the increasing use of machine learning algorithms to facilitate more intelligent data extraction and processing workflows. Such advancements can potentially streamline scraping processes, reducing the need for extensive manual oversight and adjustments.

Additionally, as data privacy regulations become stricter worldwide, web scrapers will need to adapt to remain compliant with changing legal landscapes. Ongoing dialogue about ethical web scraping practices will shape the industry, ensuring that data collectors are guided by principles that protect both data rights and ownership.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is an automated data extraction technique that enables users to collect content from websites. It involves fetching a web page’s HTML and parsing the data to extract specific information, such as articles or product prices. Various data scraping techniques can enhance the extraction process, allowing for efficient collection of necessary data.

Are there any ethical considerations when performing web scraping?

Yes, ethical web scraping is crucial to ensure compliance with a website’s terms of service. It involves respecting a site’s robots.txt file, limiting request rates to avoid overloading servers, and ensuring that the data extracted does not infringe on copyrights or privacy. Ethical practices help maintain a positive relationship between data scrapers and content owners.

Can I scrape content from the New York Times website?

Scraping content from the New York Times or any other specific website may violate their terms of service, as it often requires unauthorized access to their servers. It’s essential to review the site’s scraping policies before attempting to extract any website data.

What are some common data scraping techniques?

Common data scraping techniques include HTML parsing, web crawling, and API integration. HTML parsing involves extracting data directly from the source code of a webpage, while web crawling automates navigation through web pages, gathering data as it goes. Utilizing APIs, when available, is often the most ethical and efficient approach for website data extraction.

What tools can I use for ethical web scraping?

Numerous tools facilitate ethical web scraping, including Python libraries like Beautiful Soup and Scrapy, as well as browser extensions such as Web Scraper. These tools allow users to extract information responsibly, adhering to ethical considerations by controlling request rates and adhering to robots.txt guidelines.

How can I summarize web scraping content efficiently?

To summarize web scraping content efficiently, focus on extracting only the most relevant data points and utilizing tools that automate the process. Data visualization techniques can also aid in presenting summarized data clearly, enhancing understanding without overwhelming the audience.

Is website data extraction legal?

Website data extraction legality varies by jurisdiction and is generally determined by a site’s terms of service and copyright laws. Always review a website’s terms before scraping to ensure compliance and avoid legal issues.

| Key Points |

|---|

| Unable to scrape content from specific websites like The New York Times due to server access limitations and terms of service restrictions. |

| Can provide summaries or general information on similar topics if specific interests are shared. |

Summary

Scraping content from websites like The New York Times is a sensitive topic that involves issues of access and copyright. It’s important to understand that many websites have specific terms of service that prohibit unauthorized scraping. Instead, focusing on summaries or general information can provide valuable insights without violating these regulations.