Extract HTML Content: Tools and Techniques Explained

Extracting HTML content is a crucial process for anyone looking to analyze and repurpose information available on the web. Whether you’re a developer or a researcher, understanding how to extract content from HTML can streamline your workflow and enhance data-driven decisions. By utilizing various HTML scraping techniques, you can efficiently retrieve relevant data from websites, enabling you to gather insights and information quickly. There are numerous web content extraction tools available that make this task even easier, often requiring minimal coding knowledge. In this guide, we’ll explore effective strategies for analyzing HTML content, ensuring you gain the most from your web scraping endeavors.

When it comes to retrieving data from the internet, one often encounters terms such as web page content extraction, data scraping, and information retrieval from web documents. These methods allow users to pull specific data points from HTML structures, facilitating more informed analysis and decision-making. Tools and techniques designed for parsing HTML provide a gateway to understanding website information, making it significantly easier to collect and utilize web-based content. By engaging with these practices, users can ensure that they maximize their web data collection efforts, regardless of their technical background. Whether you are interested in market research or academic studies, mastering these techniques will empower you to extract and analyze the rich landscape of online content.

Understanding HTML Content Extraction

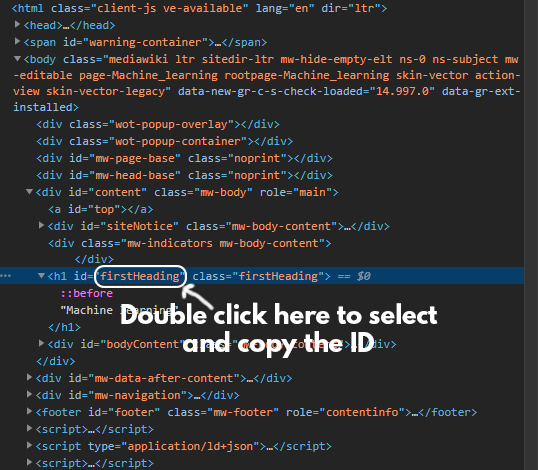

HTML content extraction is the process of retrieving useful information from HTML documents available on the web. This involves analyzing the structure of HTML to identify key elements such as headings, paragraphs, images, and links. The goal is to extract valuable data for further analysis, web development, or research purposes. Familiarity with HTML tags, attributes, and DOM (Document Object Model) can significantly improve one’s ability to extract relevant content efficiently.

To successfully extract HTML content, you need a strategic approach that combines both technical knowledge and the right tools. Beginners might find it useful to start with web scraping tools that offer user-friendly interfaces, which can automate the extraction of content from multiple pages. Once comfortable, you can dive deeper into writing scripts in languages like Python, leveraging libraries such as Beautiful Soup or Scrapy, which demand a better understanding of coding and HTML structure.

HTML Scraping Techniques for Beginners

When it comes to HTML scraping techniques, there are several methods that one can employ. One of the most straightforward techniques is using browser extensions that allow users to point and click on the content they want to extract. This method is particularly advantageous for those unfamiliar with coding. Extensions like Web Scraper and Data Miner simplify the process, converting points of interest on a webpage into structured data that can be exported.

For more advanced users, using programming languages such as Python or JavaScript provides a powerful toolkit for scraping. These languages allow the creation of automated scripts that can handle various web pages, including those with dynamic content. Techniques such as utilizing libraries for parsing HTML or making HTTP requests enable users to not only scrape data but also analyze and integrate it into existing databases or applications seamlessly.

Essential Web Content Extraction Tools

There are numerous web content extraction tools available that cater to different needs and levels of experience. For non-technical users, web-based scraping services like Import.io or Octoparse offer an intuitive interface that allows for easy data extraction without requiring programming knowledge. These tools often come with features that allow users to schedule scraping tasks and handle pagination, facilitating the extraction of large volumes of data.

On the other hand, developers looking for greater flexibility may prefer using libraries in programming languages. For instance, Beautiful Soup in Python allows for easy navigation, searching, and modification of the parse tree, making it an invaluable resource for HTML content extraction. By integrating these libraries with API calls or storing extracted data into CSV files, users can create robust data pipelines.

Analyzing HTML Content for Valuable Insights

Analyzing HTML content goes beyond mere extraction; it involves interpreting the data to uncover insights that can inform decision-making. This process can include assessing the structure and metadata of HTML documents to evaluate their SEO effectiveness or content relevance. By analyzing headings, image alt texts, and anchor text, businesses can refine their online content strategy to align with user search intentions.

Furthermore, web analytics tools can be paired with HTML analysis to track how users interact with extracted data. By gathering metrics on page visits and engagement rates, one can assess the effectiveness of the extracted content and make data-driven adjustments. This comprehensive approach to HTML content extraction and analysis can significantly enhance a website’s performance in search engine rankings.

Best Practices for Effective HTML Scraping

To ensure successful HTML scraping, adhering to best practices is essential. First, always check a website’s robot.txt file and terms of service to understand the site’s scraping policy. Respecting the rules set by website owners can save you from legal trouble and contribute to ethical web scraping practices. Additionally, managing request rates to avoid overwhelming servers is crucial; setting time intervals between requests can prevent temporary bans.

Another best practice is to focus on extracting structured data. This means identifying patterns within the HTML document that can be used consistently across different pages. Utilizing tools that allow for the extraction of data in formats such as JSON or XML can make it easier to integrate the data into applications and databases while maintaining its usability.

Choosing the Right Method for Content Extraction

Selecting the right method for content extraction largely depends on the type of project and the volume of data needed. For small projects, manual extraction using browser tools may be sufficient. However, for larger datasets, automated scraping with programming scripts is typically more efficient. Evaluating the complexity of the data can help you choose whether to use simple point-and-click tools or to write customized scripts.

Additionally, the complexity of the website being scraped should factor into your decision. If the pages are built with JavaScript-heavy frameworks, traditional scraping might fail, and you may need to utilize headless browsers or services that render JavaScript. Choosing the right extraction method ensures that your time and resources are utilized effectively, leading to more successful data integration.

Troubleshooting Common HTML Scraping Issues

Despite the effectiveness of HTML scraping techniques, users often encounter common issues. One popular problem is the presence of CAPTCHAs or anti-bot measures that prevent automated access. Understanding how to bypass these obstacles responsibly through techniques like browser emulation can make a significant difference. Training your scraping tool to recognize and navigate around these barriers is essential for continued success in data extraction.

Another common issue arises from the inconsistency of HTML structures across different pages of a website. Regularly updating your scraping scripts to accommodate any changes in the HTML layout is crucial. It’s a good practice to build robust error-handling within your scripts that can alert you to changes or failures in the data extraction process, thus allowing for timely adjustments.

Ethical Considerations in Web Scraping

As web scraping becomes more prevalent, ethical considerations cannot be overlooked. Always ensure that scraping practices align with legal standards and respect intellectual property rights. Unauthorized scraping can lead to legal consequences and damage relationships with web content providers. Understanding the balance between data access for legitimate purposes and respecting the rights of content owners is essential for any web scraper.

Moreover, transparency in data collection practices can help build trust with users and website administrators. Sharing information about what data is being collected and how it will be used fosters a collaborative environment that benefits both parties. Ethical scraping practices contribute not only to the integrity of your project but also to a more sustainable web environment.

Future of HTML Content Extraction

The future of HTML content extraction is poised for transformation, driven by advancements in AI and machine learning. These technologies promise to automate and enhance the capabilities of web scraping tools, enabling more efficient and intelligent data extraction processes. As algorithms become increasingly proficient in recognizing patterns and making decisions based on data, the accuracy and relevancy of extracted content are expected to improve significantly.

Moreover, as privacy regulations tighten, the importance of scraping ethically and responsibly will continue to rise. Developers and businesses will need to stay updated on legal frameworks and data protection practices. The evolution of web technologies, including server-side rendering and APIs, further complicates the landscape, requiring scrapers to adapt and innovate continually to effectively meet evolving user demands.

Frequently Asked Questions

How to extract content from HTML effectively?

To extract content from HTML effectively, you can use various HTML scraping techniques such as utilizing libraries like Beautiful Soup in Python, which allows you to parse HTML documents and navigate the document tree to extract the desired elements. Alternatively, JavaScript-based tools like Puppeteer enable you to automate browsing and scrape dynamic web pages.

What are some common HTML scraping techniques?

Common HTML scraping techniques include using regular expressions to identify patterns within HTML code, employing DOM manipulation to access elements via JavaScript, and leveraging web scraping frameworks like Scrapy. Each of these techniques can help in extracting structured data from unstructured HTML content.

What tools are available for web content extraction?

There are numerous web content extraction tools available, including Octoparse, ParseHub, and WebHarvy. These tools often come with user-friendly interfaces for non-coders and offer powerful features for sorting, filtering, and exporting the extracted HTML data.

How to analyze HTML content for critical data?

Analyzing HTML content for critical data involves identifying specific HTML tags that contain the desired information, such as headings, paragraphs, and links. Tools like XPath or CSS selectors can be used alongside scraping libraries to accurately locate these elements and extract their text or attributes.

Is legal to use web scraping for extracting HTML content?

The legality of using web scraping to extract HTML content can vary based on the website’s terms of service and copyright laws. It’s essential to review the site’s terms and to consider factors like public versus private content. Always ensure compliance with ethical scraping practices.

What is the best way to handle large amounts of HTML content?

To handle large amounts of HTML content, use scalable web scraping frameworks like Scrapy that can efficiently handle multiple requests and parse large data sets. Employing techniques like data chunking or leveraging cloud services can also optimize the extraction process.

| Key Point | Details |

|---|---|

| Content Access | I cannot access external websites like the New York Times. |

| Content Scraping | I am unable to scrape content from any site. |

| Direct Assistance | I can help analyze specific HTML content provided to me. |

| Extracting Data | I can extract details like title and main content from given HTML. |

Summary

In summary, the content emphasizes that I cannot access external websites, including the New York Times, for scraping. However, if you provide specific HTML content, I can assist in extracting key details such as the title and main content. This highlights the importance of direct communication when seeking information, as external access limitations can hinder data collection.