Extract Content from a Webpage: Simple Methods Explained

Extracting content from a webpage is a vital skill in today’s digital landscape, particularly for those interested in web scraping techniques. Whether for research, data analysis, or competitive intelligence, understanding how to scrape websites effectively can save time and effort. Various content extraction tools have emerged, simplifying the process and allowing users to gather the necessary information quickly. Navigating the vast amount of data available online, it is imperative to use the right scraping content methods to ensure accuracy and relevance. In this guide, we will explore effective strategies for data extraction from the web and the best practices to maximize your results.

Harvesting information from online resources, alternatively referred to as web data extraction, has become increasingly essential for businesses and researchers alike. Techniques for acquiring data from the internet can enhance decision-making and provide insights into market trends. Employing sophisticated scraping content methods and tools enables users to efficiently gather and analyze vast amounts of information. Whether you are utilizing basic coding practices or advanced content extraction services, understanding various methodologies is key to refining your web scraping efforts. This exploration will delve into practical approaches and innovative technologies that facilitate successful web content extraction.

Understanding Web Scraping Techniques

Web scraping techniques refer to the various methods used to extract data from websites. These techniques can range from simple copy-pasting to more complex procedures that involve programming. It’s essential to understand these techniques to efficiently gather data for various applications, such as market research, competitive analysis, or even personal data management. Commonly used methods include direct HTML parsing, using APIs, and automated tools or scripts that mimic user behavior to collect information.

When exploring web scraping techniques, it’s crucial to consider the ethical implications and the legalities surrounding data extraction. Websites have terms of service that may explicitly prohibit automated data extraction. Therefore, when learning how to scrape websites, it’s essential to respect these guidelines to prevent potential legal issues. By using responsible scraping techniques, such as throttling requests and focusing on publicly available data, users can ethically gather valuable insights.

Essential Content Extraction Tools

Content extraction tools are indispensable for anyone looking to pull data from the web effectively. These tools simplify the process of scraping and allow users to gather relevant information without extensive programming knowledge. Among popular tools are Octoparse, ParseHub, and WebHarvy, which offer user-friendly interfaces and powerful features for scraping data. They enable users to define data points, automate the extraction process, and export data in various formats.

In addition to standalone applications, several browser extensions facilitate easy web scraping for specific tasks. Tools such as Data Miner and Web Scraper are great options for those who need quick data extraction solutions. They are particularly useful when it comes to scraping content methods from websites that change frequently or require scraping on-the-fly. By using these content extraction tools effectively, users can significantly enhance their data collection efforts.

The Process of How to Scrape Websites Effectively

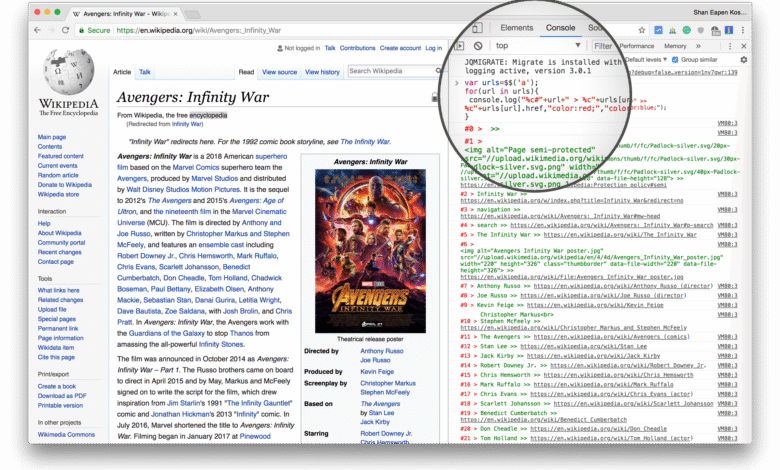

Learning how to scrape websites effectively involves understanding a few key steps and principles. First, identify the target website and define the data points you wish to extract. This could be text, images, or any other information available on the webpage. Following identification, you can utilize various scraping methods such as HTML parsing and DOM manipulation to extract the required data efficiently.

Once the data is extracted, it is vital to process and store it properly for analysis. Depending on the complexity of the data and the volume of information you are collecting, you might opt for storing data in a database or a simple CSV file. The knowledge of popular programming languages such as Python can be advantageous here, as libraries like Beautiful Soup and Scrapy are incredibly useful for automating the scraping process. Additionally, understanding data cleaning and formatting will help you prepare your scraped data for further analysis.

Exploring Data Extraction from Web for Businesses

Data extraction from web sources is a powerful tool for businesses seeking to gain insights into market trends, customer behavior, and competitor strategies. Web scraping allows companies to gather large volumes of data from various online sources, enabling them to perform comprehensive data analysis. This intelligence is helpful for making informed decisions about product development, marketing strategies, and customer engagement.

Moreover, businesses often use web scraping to monitor brand presence across different platforms, analyze competitor pricing, or track customer reviews and sentiment. By utilizing effective web scraping techniques and content extraction tools, companies can streamline their data collection processes and enhance operational efficiency. This leads to more agile business strategies and the ability to adapt quickly to changing market conditions.

Popular Scraping Content Methods

When it comes to scraping content from web pages, various methods can be applied based on the specific requirements of the data you need. Some of the most popular scraping content methods include HTML scraping, where the structure of a webpage’s HTML is directly parsed to extract information, and API scraping, which involves accessing publicly available application programming interfaces to retrieve data in a structured format.

Another method growing in popularity is browser automation, where tools like Selenium are used to automate the process of navigating websites. This is particularly useful for scraping dynamic websites that require user interaction or JavaScript rendering to display content. By mastering these scraping content methods, individuals and organizations can efficiently collect and analyze data to inform their strategies.

Ethical Considerations in Web Scraping

As web scraping becomes increasingly popular, it’s essential to understand the ethical considerations that come with it. Many websites have specific terms of service that govern how their data can be accessed and used. Violating these terms can lead to legal actions against the scraper. Therefore, it’s critical to review and adhere to these guidelines while considering the impact of scraping actions on website performance and user experience.

Furthermore, respecting robots.txt files is an important aspect of ethical scraping. These files indicate the rules that a site has set forth regarding crawling and scraping its pages. By complying with these guidelines, individuals can conduct web scraping responsibly, ensuring that their methods do not disrupt the website’s operations or infringe on privacy rights, fostering a more cooperative relationship between data collectors and content owners.

Best Practices for Web Scraping Projects

When undertaking web scraping projects, it’s crucial to implement best practices to ensure data quality and legal compliance. First, always define a clear objective and scope before starting the scraping process to avoid unnecessary data overload. This clarity will guide what information is needed, making the extraction process more efficient and focused.

Secondly, make sure to develop robust error-handling codes within your scraping scripts. Websites can change their structures or experience downtime, which can break your scraping processes. By proactively addressing these potential issues, you can create a more resilient scraping project that minimizes disruptions and maintains data integrity throughout the collection process.

Transforming Raw Data into Actionable Insights

Extracting raw data through web scraping is only the first step; transforming this data into actionable insights is where the real value lies. After data extraction, it is vital to analyze and interpret the gathered information to gain deeper understanding and make informed decisions. Techniques such as data visualization can help in this regard by making the data easier to understand and identify trends or anomalies.

Furthermore, leveraging machine learning algorithms on scraping data can reveal patterns and forecasts, providing even greater insights for businesses. This not only enhances strategic planning but also helps in optimizing operations and improving customer satisfaction. By focusing on transforming raw data into actionable insights, organizations can enhance their ability to adapt and thrive in competitive environments.

Integrating Web Scraping in Business Strategies

Integrating web scraping into business strategies can significantly improve data-driven decision making. For companies, being able to gather industry-specific data, monitor competitors, and analyze customer feedback provides a competitive edge. By incorporating accurate and timely data into their business strategies, organizations can better align their products and services with market demand.

Moreover, businesses can use insights gained from web scraping to personalize marketing efforts and understand customer needs more effectively. By analyzing customer trends and feedback, companies can innovate their offerings and refine marketing campaigns for higher engagement. Ultimately, integration of web scraping into business processes empowers organizations to become more agile and responsive to changes in the marketplace.

Frequently Asked Questions

What are some effective web scraping techniques for content extraction?

Effective web scraping techniques for content extraction include using libraries like BeautifulSoup and Scrapy in Python. These tools allow you to parse HTML and XML documents, making it easy to extract desired content from a webpage.

What tools can I use for data extraction from web pages?

There are several content extraction tools available for data extraction from web pages, such as Octoparse, ParseHub, and Import.io. These user-friendly platforms allow you to scrape websites without extensive programming knowledge.

How do I scrape websites safely and legally?

To scrape websites safely and legally, always check the site’s ‘robots.txt’ file to understand their scraping policies. Use rate limiting and avoid overloading the server when extracting content to comply with ethical web scraping practices.

What is the best method for scraping content from a webpage?

The best method for scraping content from a webpage depends on your technical skills and needs. For beginner-friendly scraping, use browser extensions like Web Scraper. For more advanced users, consider coding with Python libraries such as Requests and BeautifulSoup for greater flexibility.

Can I automate web scraping for continuous data extraction?

Yes, you can automate web scraping for continuous data extraction by using tools like Apify or scheduling scripts in Python. This allows you to regularly collect updated content from websites without manual intervention.

| Method | Description | Tools/Techniques |

|---|---|---|

| Manual Copy-Paste | The simplest way to extract content from a webpage is to manually copy the text and images you need. | Web Browser, Text Editor |

Summary

Extracting content from a webpage is a straightforward process that can be accomplished through various methods, offering valuable insights for users. Although direct access to specific URLs like nytimes.com is not possible, you can efficiently use manual copy-paste techniques or specialized tools to gather necessary information from online resources. This approach not only saves time but also allows you to curate the most relevant content for your needs.