Content Scraping: What You Need to Know About It

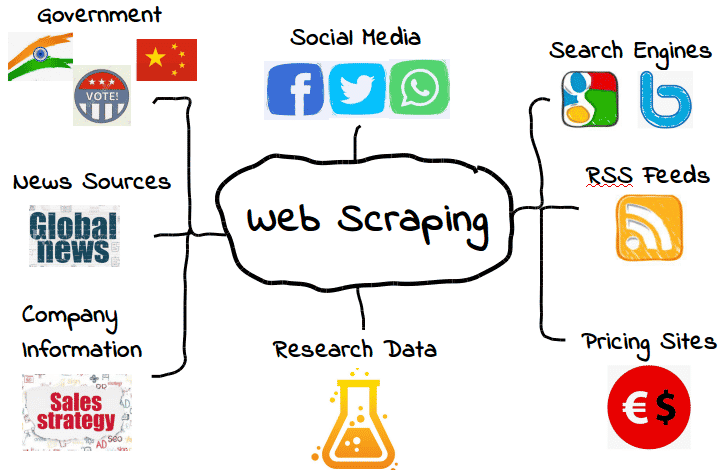

Content scraping has become an essential practice in the digital age, empowering businesses and individuals to harness valuable information from websites efficiently. By employing web scraping techniques, users can extract data effortlessly and analyze trends that can drive decision-making. However, the use of scraping tools has sparked a debate about legal issues with scraping, highlighting the fine line between ethical data extraction and unauthorized use of web content technology. Understanding these nuances is vital for anyone looking to engage in content scraping responsibly. As the digital landscape continues to evolve, mastering data extraction will undoubtedly provide a competitive edge in various industries.

When discussing the act of extracting information from online sources, it’s important to recognize alternative terms such as web harvesting and data mining. These processes involve utilizing specialized software to collect digital data, streamlining the retrieval of relevant information for analysis. As we delve deeper into this topic, it becomes crucial to address the implications of these practices, particularly concerning the legal landscape surrounding automated data collection. By exploring the various tools and technologies associated with this field, readers will gain valuable insights into the evolving world of information acquisition. Moreover, comprehending the ethical considerations involved will ensure responsible and effective use of these powerful capabilities.

Understanding Content Scraping

Content scraping, often referred to as web scraping, involves extracting data from websites using automated tools or software. This technique is widely used for various purposes, including data analysis, competitive research, and aggregating content from multiple sources. However, it’s important to understand that while scraping can be a powerful method for data extraction, it comes with legal and ethical considerations that must be addressed to avoid infringements on copyright and terms of service.

Moreover, the rise of advanced scraping tools has made it easier than ever for individuals and businesses to harvest web data efficiently. These tools can navigate through HTML structures, parse data, and compile it into usable formats like spreadsheets or databases. Despite their convenience, users must remain vigilant about compliance with legal standards, as unauthorized scraping of web content may lead to potential lawsuits or penalties from website owners.

The Role of Scraping Tools in Data Extraction

Scraping tools play a pivotal role in the data extraction process, allowing users to automate the collection of information from various web sources. Tools like BeautifulSoup, Scrapy, and Octoparse have gained popularity for their user-friendly interfaces and robust functionality. They enable users to target specific data points, streamline workflows, and save both time and resources in gathering information. Leveraging these scraping tools effectively can enhance your data strategy significantly.

However, selecting the right scraping tool depends on the specific needs of the project. Some tools excel in handling structured data while others are better suited for unstructured content scraping. Additionally, understanding the underlying web content technology can assist users in adapting their techniques to different platforms, ensuring successful data extraction without triggering any restrictions set by the website.

Legal Issues with Web Scraping

While web scraping offers numerous benefits, it is crucial to navigate the legal landscape carefully. Many websites explicitly prohibit scraping in their terms of service, and violating these terms can result in account bans or legal action. Cases like the one involving LinkedIn and hiQ Labs highlight the potential conflicts between data extraction practices and user agreements, emphasizing the need for scrapers to respect copyright and intellectual property laws.

Furthermore, legal issues may arise depending on the type of data being scraped. For instance, extracting personal information may violate privacy regulations like GDPR or CCPA. As laws governing digital content continue to evolve, staying informed about the legalities of web scraping is essential for both individuals and businesses looking to leverage this technology responsibly and ethically.

Ethical Considerations in Web Content Technology

The rapid development of web content technology raises several ethical questions for individuals and organizations engaging in website scraping. Ethical scraping practices should prioritize the rights of content creators while maximizing the utility of data extraction. Potential ethical dilemmas include the misuse of scraped content, misinformation propagation, and negative impacts on website performance due to excessive data requests.

To address these concerns, organizations should implement ethical scraping policies that respect the original source of the data. This includes giving proper attribution where required, using data responsibly, and ensuring that scraping activities do not disrupt the normal functionality of the sites being accessed. By building an ethical framework around web scraping, businesses can foster trust and maintain positive relationships with content providers.

Best Practices for Effective Web Scraping

To ensure efficient and legal web scraping, following best practices is vital. First, it’s crucial to review the target website’s robots.txt file, which outlines which parts of the site can be scraped. Adhering to this file can help mitigate legal risks and maintain web etiquette. Additionally, employing rate limiting and respecting the site’s bandwidth prevents system overloads and demonstrates considerate scraping behavior.

Another best practice involves utilizing APIs, when available, as they can provide structured access to data without the complexities associated with web scraping. This not only aligns with many sites’ policies but also often results in cleaner, more reliable data than what might be extracted through scraping. Ultimately, a thoughtful approach to scraping can enhance data integrity while upholding legal and ethical standards.

The Future of Web Scraping and Data Extraction

As technology continues to evolve, the future of web scraping and data extraction appears promising yet complex. Innovations in artificial intelligence and machine learning are expected to enhance scraping tools, making them more efficient and capable of handling large datasets with improved accuracy. These advancements will likely expand the applications of web scraping, allowing users to gain deeper insights from vast arrays of online content.

However, the growth of anti-scraping technologies and stricter regulations poses challenges for the future landscape. Websites are increasingly adopting measures to combat unauthorized scraping, such as CAPTCHAs and IP blocking. Staying abreast of these developments and adjusting scraping strategies accordingly will be essential for anyone involved in data extraction. Balancing technological advancements with ethical considerations will ultimately shape the future of web scraping.

Choosing the Right Scraping Strategy

Navigating the complex world of web scraping requires a well-considered strategy. Whether you’re seeking to gather real-time data for market analysis or simply looking to compile historical information, defining your goals will dictate the choice of tools and methods used. A clear strategy tailored to the specific type of content can lead to better data quality and relevance.

Moreover, it’s prudent to evaluate the available scraping methods, such as manual scraping, automated scripts, or using existing services. Each method has its strengths and weaknesses, and the right choice will depend on the desired outcome, resource availability, and the legal constraints surrounding the content being targeted. A systematic approach to selecting a scraping strategy will enhance your project’s overall success.

Data Privacy and Scraping Ethics

Data privacy is a growing concern in the field of web scraping and content extraction. It is imperative for scrapers to be aware of data protection regulations such as GDPR and CCPA, which regulate how personal data can be collected and used. Failure to comply can lead to significant legal ramifications, emphasizing the importance of ethical practices in web scraping.

As a best practice, organizations should prioritize user consent and transparency in their data scraping processes. Effective communication about how and why data is being scraped reinforces trust and promotes accountability. By prioritizing ethical concerns related to data privacy, individuals and businesses can safeguard their operations while contributing to a more responsible approach to data collection.

Leveraging Scraping Tools for Competitive Analysis

Leveraging scraping tools for competitive analysis can provide businesses with invaluable insights into market trends and consumer behavior. By collecting and analyzing data from competitors’ websites, businesses can identify product pricing strategies, promotional campaigns, and customer feedback. This strategy helps organizations remain agile in their market approach while informing their business decisions.

However, it is crucial to approach competitive scraping with caution. Ethical guidelines must be adhered to, ensuring that the gathered data does not infringe on proprietary information or violate any legal agreements. By being mindful of these parameters, businesses can harness the power of data extraction for competitive analysis effectively without stepping into a legal grey area.

Frequently Asked Questions

What is content scraping and how is it related to web scraping?

Content scraping refers to the process of extracting data or content from websites, which is a primary function of web scraping. Web scraping uses various techniques and tools to gather information from multiple online sources, enabling users to compile data efficiently for analysis or research.

What are popular scraping tools for effective data extraction?

There are several popular scraping tools for data extraction, including Beautiful Soup, Scrapy, and Octoparse. These tools simplify the web scraping process by allowing users to gather information from web pages easily, automate data collection, and manage large-scale scraping tasks.

What are the legal issues with scraping content from websites?

Legal issues with scraping content from websites can arise from copyright infringement, violation of terms of service, and unauthorized access to data. It’s crucial to understand a website’s policies regarding automated scraping to avoid potential legal consequences.

How does web content technology impact content scraping practices?

Web content technology, such as APIs, HTML/CSS, and JavaScript frameworks, influences content scraping practices by dictating how data is structured and presented on web pages. Understanding these technologies helps scrapers extract data more effectively and adapt to changes in web design.

Is content scraping ethical or should it be avoided?

The ethics of content scraping depend on the intent and method used. Ethical scraping involves respecting a website’s terms of service and copyright laws, while scraping without permission can lead to legal and ethical dilemmas. Always seek consent when possible.

Can content scraping tools handle dynamic web pages?

Yes, many advanced content scraping tools can effectively handle dynamic web pages. Technologies like Selenium and Puppeteer are designed to interact with JavaScript-rendered content, allowing for accurate data extraction from sites that heavily rely on client-side scripting.

| Key Points |

|---|

| Content scraping refers to the process of extracting information from websites. |

| Certain websites, like nytimes.com, have policies against content scraping to protect their data. |

| It’s important to respect a website’s terms of service when considering content scraping practices. |

| There are legal implications involved in scraping content from protected sites. |

Summary

Content scraping can be a useful tool for gathering information from various online sources; however, it is essential to note that some websites, like nytimes.com, prohibit such practices. Always adhere to the terms of service for any site you consider scraping content from to avoid legal repercussions.