Content Scraping: How to Extract Information Effectively

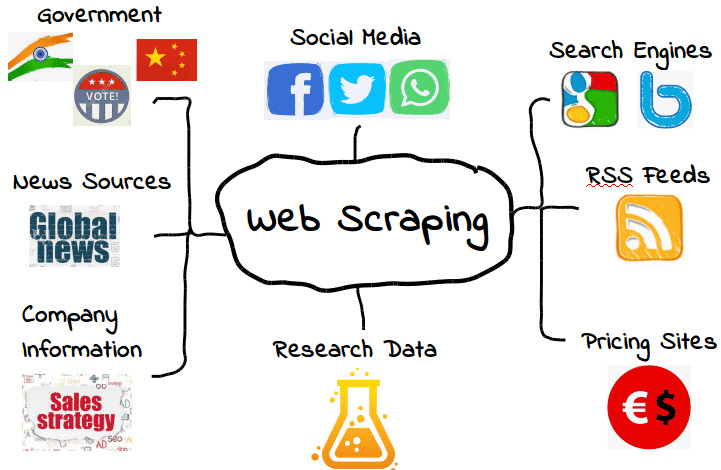

Content scraping is an essential technique used by many businesses and developers to gather valuable information from various online sources. By employing web scraping methodologies, you can seamlessly extract data and analyze content that is pivotal for decision-making. This process not only enhances information retrieval but also facilitates effective content analysis to understand market trends better. With numerous scraping tools available today, harnessing the power of content scraping has never been easier, allowing users to efficiently collect insights from the vast online landscape. As a result, leveraging content scraping can significantly improve your data-driven strategies and business intelligence.

Also referred to as data harvesting or web extraction, content scraping is a widely adopted method for acquiring large volumes of data from websites. It serves as a valuable resource for extracting pertinent information that can inform various business decisions and analyses. This practice enables users to gather intelligence from competing platforms, turning raw data into actionable insights. By utilizing advanced scraping techniques and tools, analysts can uncover trends and patterns within the digital sphere that drive market success. Overall, the effective use of data extraction methodologies plays a crucial role in enhancing understanding and responsiveness in today’s competitive landscape.

Understanding Content Scraping

Content scraping is an automated method used to extract information from websites. This technique is popularly utilized in various fields, including data journalism, market analysis, and academic research. By using scraping tools, one can efficiently gather large amounts of data from multiple web pages without manual intervention, thus saving time and resources. In essence, content scraping allows you to automate the information retrieval process, making it easier to analyze trends and patterns hidden within extensive online data.

Despite its many advantages, content scraping comes with its own set of challenges, particularly regarding ethical and legal considerations. Many websites have measures in place to prevent scraping and protect their intellectual property. Consequently, effective data extraction requires not only the right scraping tools but also adherence to the terms of service of the target site. Understanding these limitations is crucial, as bypassing them can lead to penalties, including IP bans and potential legal action.

The Role of Web Scraping Tools in Data Extraction

Web scraping tools are essential for efficiently extracting data from online sources. These tools use various techniques to collect and organize information, enabling users to perform content analysis quickly and accurately. Popular web scraping tools, such as Beautiful Soup, Scrapy, and Selenium, provide users with functionalities that can handle complex websites and extract structured data in formats like CSV or JSON. This structured data can then be used for further analysis or integrated into applications to derive insights.

Moreover, optimal data extraction often involves selecting the right web scraping tool based on specific project requirements. For instance, if you’re looking to scrape dynamic websites built on JavaScript frameworks, tools that can simulate a browser environment may be necessary. On the other hand, for simpler HTML sites, lightweight tools can suffice. Combining the right scraping tools with proper techniques enhances the efficiency of data collection processes, leading to more accurate and timely information retrieval.

Effective Strategies for Content Analysis

Content analysis involves systematically examining text data to identify patterns, themes, or trends. When leveraging web scraping capabilities for content analysis, the extraction of relevant data must be coupled with effective analytic strategies. Techniques such as sentiment analysis, topic modeling, or keyword frequency analysis can provide deeper insights into the data collected. By employing these methods, researchers can transform raw data into valuable information that supports decision-making and understanding of market trends.

Additionally, implementing robust methodologies for content analysis enhances the reliability of findings. Analysts must design their approaches to account for biases and variations present in the data. Combining quantitative and qualitative analyses can improve the overall evaluation of the scraped content. Thus, utilizing various analytical frameworks alongside data extracted from web scraping endeavors leads to more comprehensive insights and a richer understanding of the subject matter.

Legal Considerations in Information Retrieval

When engaging in information retrieval through content scraping, it’s imperative to understand the legal landscape. The legality of scraping content from websites can vary significantly based on the site’s terms of service, copyright laws, and local regulations. Websites may have explicit prohibitions against automated data extraction, and disregarding these can lead to serious consequences. Therefore, a thorough review of each target website’s policy on content scraping is essential to ensure compliance and avoid potential legal repercussions.

Moreover, even when scraping is technically feasible, ethical considerations should guide your practices. This includes respecting the privacy of individuals whose data may be scraped and considering the potential impact of your work on the target site’s operations. Employing ethical scraping practices not only supports a sustainable approach to information retrieval but also reinforces the credibility of your data collection efforts. It is crucial to strike a balance between utilizing available data and adhering to ethical guidelines.

Best Practices in Data Extraction

Implementing best practices in data extraction can significantly enhance the quality and reliability of the information gathered through web scraping. One fundamental practice is ensuring that the scraping process is non-intrusive to the target websites; this means limiting the frequency of requests to avoid overloading servers. Additionally, using user-agent headers and making requests mimic a typical user can help in evading detection mechanisms employed by websites to block automated scraping.

Another best practice is structuring your data collection process to capture only relevant information. Defining clear objectives and focusing on specific data points not only streamlines the scraping process but also improves the overall quality of the dataset. Furthermore, employing data validation techniques post-extraction is vital to ensure accuracy and integrity before any analysis takes place. By adhering to these best practices, you can improve the effectiveness and ethicality of your data extraction efforts.

Leveraging Scraping Tools for Competitive Analysis

Competitive analysis is a crucial component of strategic business planning, and web scraping can provide a significant edge in this area. By utilizing scraping tools, companies can gather data on competitors’ pricing, product offerings, marketing strategies, and customer reviews. This information can help businesses make informed decisions and tailor their strategies according to market trends and competitor activities. Thus, the ability to efficiently collect and analyze such data is invaluable for staying competitive in today’s dynamic market.

Furthermore, scraping tools enable real-time monitoring of competitor websites, allowing businesses to stay ahead of changes in pricing, promotional offers, or new product launches. By establishing alerts or automated scraping systems, companies can receive immediate updates on competitor activities. This level of awareness fosters proactive decision-making, enabling businesses to quickly adapt their strategies in response to evolving market conditions. Consequently, leveraging scraping tools for competitive analysis is not just beneficial, but essential for maintaining a competitive advantage.

The Importance of Ethical Scraping Practices

As the practice of web scraping continues to grow, so does the conversation around its ethical implications. Ethical scraping involves acting responsibly while harvesting data, ensuring that the rights of content owners are respected, and not exploiting their information without permission. Businesses and researchers must be mindful of their scraping activities, especially concerning the type and sensitivity of the data being collected. By adhering to ethical standards, organizations can build trust with their audience and contribute to a more responsible data ecosystem.

Moreover, ethical scraping practices can prevent potential backlash from website owners who might view scraping as a threat. Proactively reaching out for permissions, using APIs where available, and minimizing server load are among the best strategies to practice responsible data scraping. By fostering a culture of ethical data extraction, organizations can create a sustainable model that benefits both them and the content providers they depend on for information.

Future Trends in Web Scraping and Data Extraction

Looking ahead, the landscape of web scraping and data extraction is set to evolve significantly due to advancements in technology and changes in regulatory frameworks. As artificial intelligence and machine learning become more integrated into data processing, scraping tools will likely become more sophisticated, offering enhanced capabilities in terms of data extraction, parsing, and analysis. This technological progression will enable users to extract larger volumes of data with greater accuracy and efficiency.

Conversely, as web scraping gains popularity, many companies are implementing stricter measures to protect their content from unauthorized scraping. This trend may lead to the development of more advanced anti-scraping technologies, necessitating continuous innovation in scraping methods. Staying informed about these trends, both in terms of technology and regulations, will be critical for anyone engaged in web scraping activities as they navigate the future of data extraction.

Frequently Asked Questions

What is content scraping and how is it used in web scraping?

Content scraping refers to the automated process of extracting information from websites. In web scraping, various scraping tools are employed to gather data, enabling users to analyze content, compile information, and retrieve relevant details without manually visiting each site.

What are the best tools for data extraction in content scraping?

Some of the best tools for data extraction in content scraping include Scrapy, Beautiful Soup, and Octoparse. These tools facilitate efficient data scraping processes, allowing users to perform content analysis and automate information retrieval from multiple websites.

Is content scraping legal and ethical in terms of information retrieval?

The legality and ethics of content scraping often depend on the website’s terms of service and local laws. While web scraping for personal use on publicly available data is generally accepted, commercial scraping without permission may violate copyright laws or terms of service.

How can I perform content analysis using web scraping techniques?

To perform content analysis using web scraping techniques, you can use a combination of scraping tools to collect data, followed by analytical software to evaluate the extracted information. This can include analyzing text patterns, frequency of terms, or sentiment analysis on the scraped content.

What challenges might I face when using scraping tools for content scraping?

When using scraping tools for content scraping, challenges may include handling dynamic content, navigating anti-scraping technologies, or ensuring compliance with legal restrictions. It’s essential to choose the right tool based on the specific site structure and data needs.

Can I automate information retrieval through content scraping, and if so, how?

Yes, you can automate information retrieval through content scraping by using advanced scraping tools that allow you to set up scheduled tasks. With tools like Selenium or Puppeteer, you can configure scripts to run at specific intervals, automatically gathering the desired data.

What are some common applications of content scraping and data extraction?

Common applications of content scraping and data extraction include price comparison, market research, lead generation, and academic research. Businesses and researchers often use these techniques to gather large datasets for analysis and decision-making.

How do I get started with web scraping for content analysis?

To get started with web scraping for content analysis, identify your data needs, choose a suitable scraping tool, and familiarize yourself with the tool’s features. Begin with small-scale scraping projects, gradually expanding as you gain more experience.

| Key Points | Details |

|---|---|

| Content Scraping Definition | Content scraping is the process of extracting data or information from websites. |

| Limitations | Access to external websites like the New York Times is restricted, preventing direct content scraping. |

| Alternatives | Users can manually extract information or utilize tools designed for content analysis. |

| Need for Specific Articles | For assistance, users should provide specific articles or points for analysis. |

Summary

Content scraping is a method to gather information from various online sources. Though limitations exist when accessing certain websites directly, such as the New York Times, individuals can still analyze and extract information manually or through specialized software. Providing specific articles or points of interest can help in receiving targeted guidance on how best to approach content scraping.