Content Scraping: Ethical Considerations and Alternatives

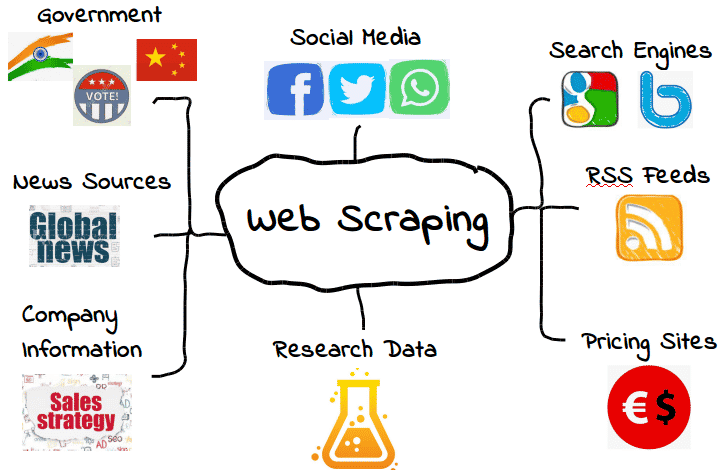

Content scraping has emerged as a powerful tool in the realm of data extraction, enabling researchers, marketers, and developers to collect valuable information from various web sources. By employing techniques related to web scraping, users can efficiently harvest data while adhering to ethical content sharing practices. This process is not just about gathering raw data; it often includes web content summarization, helping to distill information into digestible formats. However, it’s crucial to navigate the landscape carefully, as many websites guard their proprietary information fiercely. Understanding the nuances of content scraping can unlock vast resources for analysis and marketing strategies.

In today’s digital age, the technique of web data harvesting, often referred to as content scraping, constitutes a significant method for collecting online information. Many industries leverage this practice to accumulate insights and streamline their operations by tapping into the wealth of content available on the internet. This can range from aggregating news articles to compiling product reviews, all while being mindful of the ethical implications of data usage. Employing various data extraction techniques, businesses can create value through informed decision-making without infringing on copyright laws. By reimagining how we approach this practice, we can embrace a more responsible framework in our quest for knowledge and innovation.

Understanding Content Scraping and Its Implications

Content scraping, often referred to as web scraping, is the automated process of extracting vast amounts of data from websites. This technology allows individuals and businesses to gather information efficiently and analyze trends. However, scraping content directly from certain websites, such as nytimes.com, can raise significant ethical concerns, particularly regarding proprietary information. Companies generally protect their content, and unauthorized extraction can lead to legal repercussions.

In addition to legal risks, ethical content sharing is a crucial consideration. Many websites invest considerable resources into creating high-quality content and expect to retain control over how it is used. It’s essential for individuals and organizations engaging in data extraction to adhere to established guidelines, respect copyright laws, and consider the implications of their actions on the original content creators.

Alternatives to Direct Content Scraping

While direct scraping may seem like a quick solution for obtaining content, several alternative approaches can facilitate ethical data use. For instance, web content summarization offers a way to distill information without directly copying from the source. By synthesizing essential points from articles into summaries, one can share insights while giving proper credit and avoiding copyright infringements.

Another alternative is leveraging APIs provided by websites. Major establishments often provide application programming interfaces that allow developers to access certain data in a controlled and legal manner. By using APIs for data retrieval, businesses can acquire valuable information without infringing on proprietary rights, ensuring compliance with ethical standards in digital content sharing.

The Role of LSI in Data Extraction

Latent Semantic Indexing (LSI) plays a pivotal role in enhancing web scraping and data extraction processes by improving the relevance of information retrieval. It helps in understanding the contextual relationship between terms, allowing scrapers to find more useful and related content on the web. By utilizing LSI techniques, content extractors can pinpoint the most relevant data snippets that align closely with their research objectives, making the data collection process much more effective.

Moreover, LSI assists in refining search queries in a way that aligns with user intent. When utilizing web scraping tools, incorporating LSI keywords related to the desired content area can yield better search results. This not only enhances the accuracy of data extraction but also ensures that the data obtained conveys an in-depth understanding and covers the topic comprehensively, contributing to better information aggregation.

Legal Risks Associated with Web Scraping

One of the major challenges of web scraping is navigating the legal landscape. Many websites have terms of service that explicitly prohibit the automated collection of their content. When these terms are violated, it can lead to significant consequences, including lawsuits and financial penalties. It’s crucial for anyone considering data extraction to familiarize themselves with the legal implications and adhere to established guidelines to mitigate these risks.

Furthermore, understanding the nuances of copyright law is vital for those engaged in content scraping. Proprietary information is often protected under copyright, and misusing this can result in claims of intellectual property theft. In light of this, seeking permission from content owners or using open-source data becomes a prudent path for individuals and companies looking to leverage online content ethically.

Ethical Considerations in Digital Data Sharing

Ethical considerations are increasingly important in the realm of digital data sharing and web scraping. As the internet continues to expand, so does the responsibility of users to share and utilize content respectfully. Practicing ethical content sharing means prioritizing the rights of original creators and ensuring they receive acknowledgment for their work. This is particularly important in sectors where content creation is a primary income source.

Moreover, fostering a culture of transparency in how data is shared and used can greatly benefit online communities. By respecting proprietors’ rights and providing proper attribution, individuals and organizations can cultivate trust and goodwill, creating an environment where content sharing thrives ethically. Such practices not only protect creators but also enhance the credibility and legitimacy of the sharers.

The Impact of Web Scraping on SEO Strategies

Web scraping can significantly influence search engine optimization (SEO) strategies. By extracting relevant data, businesses can gain insights into competitor strategies, keyword usage, and overall market trends. This information can inform content creation efforts, helping to optimize web pages for better search engine rankings and improved visibility. However, it’s essential that this data is used ethically and responsibly to maintain compliance with search engine policies.

Additionally, by employing web scraping to analyze the performance of specific keywords and topics, marketers can fine-tune their SEO strategies. Incorporating LSI keywords not only boosts visibility in search results but also aligns content with user searches, driving higher engagement. Balancing effective data extraction with ethical practices is key to achieving lasting success in digital marketing efforts.

Data Extraction Tools and Their Applications

In the realm of content extraction, various data extraction tools have become invaluable to researchers and businesses alike. These tools range from simple browser extensions to complex software applications capable of handling large-scale data retrieval. Utilizing such tools effectively can streamline the process of gathering information while also allowing for greater accuracy in the data collected. Many of these tools offer functionalities that enable users to filter content, making it easier to focus on specific data points.

Moreover, the applications of data extraction tools extend beyond mere collection. They can provide users with analytics and reporting features, enabling a deeper understanding of the extracted content. For instance, businesses can analyze customer sentiment from social media scraping or track competitor pricing strategies. Implementing these tools strategically can yield significant insights that drive informed decision-making and growth.

Best Practices for Ethical Content Extraction

Engaging in ethical content extraction requires a commitment to transparency and respect for content ownership. Best practices such as seeking permission before scraping content, providing clear attribution, and ensuring compliance with terms of service can safeguard against potential legal issues. By following these guidelines, businesses and individuals can mitigate risks associated with data extraction while fostering positive relationships with content creators.

Additionally, users should educate themselves about copyright laws and ethical sharing standards to better navigate the digital landscape responsibly. Engaging with content through ethical practices not only protects original creators but also contributes to a healthier online ecosystem, where innovation and creativity are nurtured. As a result, respecting proprietary information should be at the forefront of any web scraping or data extraction initiative.

Future Trends in Web Scraping Technologies

The future of web scraping technologies looks promising, with advancements in artificial intelligence and machine learning set to revolutionize the field. These technologies can enhance the efficiency and accuracy of data extraction processes, allowing for more sophisticated algorithms to process and interpret information. As these capabilities evolve, businesses will benefit from faster and more reliable data gathering methods, unlocking new potential in market analysis and research.

Moreover, with a shift towards greater data transparency and user privacy concerns, future web scraping tools will likely incorporate robust ethical guidelines. This will necessitate adherence to regulations such as GDPR and CCPA, ensuring that data practices align with legal standards. As technology continues to develop, the balance between innovative data extraction and ethical responsibility will be crucial for achieving sustainable success in the digital age.

Frequently Asked Questions

What is content scraping and how does it relate to web scraping?

Content scraping, often referred to as web scraping, is the automated process of extracting data from websites. It involves gathering web content to analyze or repurpose it, but care must be taken to respect proprietary information and copyright laws.

Is it legal to perform web scraping for data extraction?

The legality of web scraping for data extraction depends on various factors, including the website’s terms of service and the type of content being scraped. It’s crucial to ensure that you are not infringing on proprietary information or copyright when performing scraping activities.

How can I ethically share scraped content?

Ethical content sharing can be achieved by summarizing the scraped information rather than copying it directly. This allows you to extract valuable insights without violating copyright laws or misusing proprietary information. Always credit original sources when possible.

What techniques can be used for web content summarization?

Web content summarization techniques include natural language processing, keyword extraction, and summarization algorithms. These methods help distill the main ideas from extensive content, providing concise insights without infringing on proprietary information.

What are the risks of scraping proprietary information from websites?

Scraping proprietary information can lead to legal repercussions, such as copyright infringement claims. Websites often protect their data, and unauthorized extraction can damage relationships with the content owners and lead to penalties.

How can I use data extraction tools for content scraping safely?

To use data extraction tools for content scraping safely, familiarize yourself with each tool’s capabilities and limitations. Always review the target website’s robots.txt file and terms of service to ensure compliance with data usage policies and copyright laws.

What are the best practices for ethical web scraping?

Best practices for ethical web scraping include obtaining permission when necessary, respecting a website’s robots.txt guidelines, limiting the frequency of requests to prevent server overload, and never scraping proprietary content without explicit permission.

Can I automate the process of web scraping for content extraction?

Yes, automating web scraping for content extraction is possible using various programming languages and libraries, such as Python with Beautiful Soup or Scrapy. However, ensure that your automation adheres to ethical guidelines and legal standards.

What is the difference between content scraping and data mining?

Content scraping focuses on extracting information from web pages, while data mining involves analyzing large datasets to discover patterns and insights. While both use similar techniques, their goals and methodologies differ significantly.

| Key Point | Explanation |

|---|---|

| Content Scraping Restrictions | Direct content scraping from proprietary websites like nytimes.com is not allowed. |

| Assistance Offered | Alternatives include summarizing articles, discussing topics, or aiding research. |

| User Engagement | Users are encouraged to provide text or specific requests for help. |

Summary

Content scraping can be a complex activity, and it is important to understand the limitations involved. While I cannot directly scrape content from nytimes.com or other proprietary sources, I can support you by providing insights and assistance in various ways. If you have any specific articles or topics you’d like to discuss, feel free to share the text, and I would be happy to help!