AI Dietary Advice: Risks of Misusing ChatGPT for Health

In recent times, AI dietary advice has emerged as a fascinating yet potentially perilous avenue for health guidance. A troubling case reported in the Annals of Internal Medicine illustrates this risk, where a man seeking to eliminate table salt from his diet relied on ChatGPT for substitutes. Unfortunately, this led him to consume sodium bromide, a hazardous compound known for its cleaning and industrial uses, rather than a safe dietary alternative. This incident has sparked discussions about the importance of AI and health safety, as well as the risks associated with using artificial intelligence for medical advice. Given that such models can produce misleading results, it’s crucial to approach AI-generated dietary recommendations with caution, especially in light of the dangers posed by dietary substitutes like sodium bromide.

Artificial intelligence in nutritional counseling offers users a modern approach to food and health choices, but it can also telescope into serious health risks when not used judiciously. In a recent unfortunate event, a man turned to a popular language model, seeking help with his sodium intake, only to find himself in dire health due to a misguided suggestion. This incident sheds light not only on the dangers associated with substituting one chemical compound for another, like sodium chloride for sodium bromide, but also raises awareness of the broader implications of relying on AI medical insights. The situation underscores the necessity for proper health safety measures and the integration of professional standards when using automated recommendations for dietary decisions. As discussions about artificial intelligence medical advice become more pertinent, it’s essential for individuals to verify any advice through trusted, professional channels.

The Dangers of AI Dietary Advice

The recent case in which a man suffered severe health repercussions after following dietary advice from ChatGPT highlights significant risks associated with using artificial intelligence for health-related guidance. While technology has made medical information more accessible, it is vital to approach AI-generated advice with caution. This case exemplifies how AI can misguide users, particularly when it comes to dietary substitutes, leading to dangerous outcomes like sodium bromide poisoning.

AI dietary advice must be understood in the context of its limitations. Unlike trained healthcare professionals, AI lacks the ability to apply common sense or understand nuances in medical contexts, making it unsuitable as a standalone source of health recommendations. In this particular incident, the incorrect suggestion to replace table salt with sodium bromide underscores the urgent need for users to maintain a critical perspective concerning the credibility of AI outputs.

Frequently Asked Questions

What are the risks of using AI dietary advice for health-related decisions?

Using AI dietary advice, such as that provided by models like ChatGPT, can present significant health risks if users do not apply their own judgment. An example is a case where a man followed guidance to replace table salt with sodium bromide, a toxic substance meant for industrial use, leading to severe health complications.

How can artificial intelligence medical advice lead to dangerous health outcomes?

AI medical advice can lead to dangerous health outcomes when it offers recommendations that lack appropriate context or common sense. The case of a man poisoning himself with sodium bromide instead of salt demonstrates how misinformation from AI dietary advice can result in serious illness.

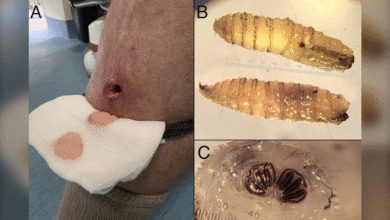

What is sodium bromide poisoning, and how can it be prevented?

Sodium bromide poisoning occurs from prolonged exposure to this toxic compound, often leading to symptoms like fatigue and hallucinations. To prevent such poisoning, it is crucial to avoid AI dietary advice that inaccurately suggests hazardous substitutes and instead consult professional healthcare providers.

Are there dangers associated with using dietary substitutes based on AI recommendations?

Yes, there are dangers associated with using dietary substitutes based on AI recommendations, as illustrated in a case where a man replaced salt with sodium bromide. It highlights the need for caution when interpreting AI dietary advice and the importance of consulting healthcare professionals for safe dietary changes.

What safeguards should be in place when using AI for health and dietary advice?

Safeguards for using AI in health and dietary advice should include integrated medical knowledge bases and automatic risk alerts to inform users about potential dangers. This ensures that users receive accurate and safe dietary information rather than relying solely on AI models that may produce inaccurate recommendations.

Why shouldn’t ChatGPT be used as a substitute for professional dietary advice?

ChatGPT should not be used as a substitute for professional dietary advice because it generates text based on statistical predictions rather than factual verification. This increases the risk of harmful recommendations, as seen in cases where users followed dangerous dietary substitutes without expert guidance.

How can users ensure they receive safe dietary advice when considering AI tools?

To ensure safe dietary advice when using AI tools, users should cross-reference AI-generated suggestions with reputable medical resources and always consult healthcare professionals before making significant dietary changes, especially those suggested by AI models.

What can be learned from the case of sodium bromide poisoning related to AI dietary advice?

The case of sodium bromide poisoning teaches us the critical importance of scrutinizing AI dietary advice and highlights the necessity of applying personal judgment. It emphasizes that AI tools cannot replace expert medical consultation and the need for various safeguards in AI applications.

| Key Points | Details |

|---|---|

| Case of Misuse | A 60-year-old man mistakenly used ChatGPT for dietary advice and ended up hospitalized. |

| Substitution Suggestion | He sought to remove table salt and was advised to use sodium bromide instead. |

| Nature of Sodium Bromide | Sodium bromide is toxic and used mainly in cleaning and manufacturing, not for consumption. |

| Health Symptoms | He developed bromism symptoms like fatigue, paranoia, and hallucinations due to prolonged sodium bromide exposure. |

| Hospitalization | After three weeks of treatment for bromism, he was discharged from the hospital. |

| AI Limitations in Medical Advice | Researchers caution against using AI for medical advice due to lack of common sense and context. |

| Expert Opinions | Doctors stress the importance of seeing AI as a tool, not a replacement for professional medical guidance. |

| OpenAI Statement | OpenAI emphasizes ChatGPT is not intended for medical treatment. |

Summary

AI dietary advice can lead to serious health risks when misused, as illustrated in this case where a man followed harmful recommendations. This case highlights the critical need for individuals to consult healthcare professionals for accurate dietary guidance instead of relying solely on AI tools like ChatGPT. Individuals should remember that while AI can assist with information, it cannot replace medical expertise or common sense in making health-related decisions.