Web Scraping Guidelines: Your Essential October 2023 Guide

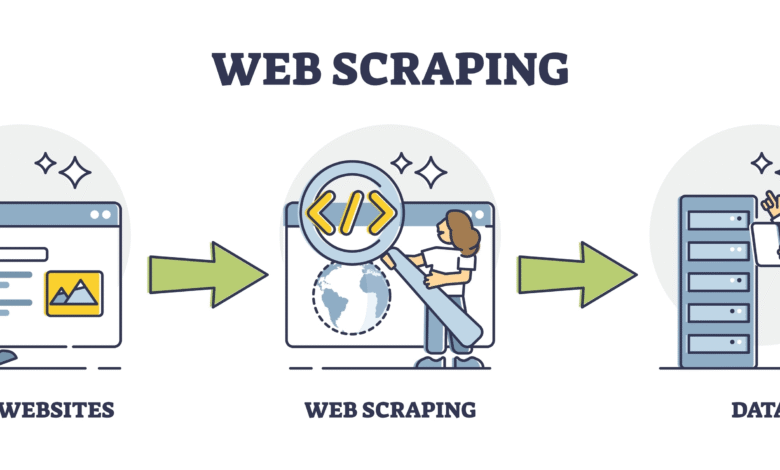

When diving into the world of web scraping guidelines, it’s essential to understand the ethical and legal frameworks that govern automated data extraction. Utilizing Python web scraping techniques can streamline the process, allowing users to gather valuable information efficiently. These data scraping techniques are particularly beneficial for scraping news articles, helping to keep you updated with the latest trends and developments. However, adhering to scraping guidelines ensures that you respect website policies and minimize the risk of being blocked or facing legal repercussions. By following these best practices, you can harness the power of web scraping while remaining compliant and responsible.

Exploring the fundamentals of data harvesting, particularly through the lens of automated content retrieval, can significantly enhance how you gather online information. This process, often referred to as data gathering techniques, plays a crucial role in accessing dynamic content like news and articles from various platforms. Understanding data extraction rules is paramount to avoid potential pitfalls and to protect both the scraper and the source website. As you venture into this technology, employing effective methods and adhering to established standards will not only optimize your data collection but will also maintain good relationships with content providers. Thus, embracing best practices in this field is beneficial for anyone looking to utilize web data responsibly.

Understanding Web Scraping with Python

Web scraping has emerged as a crucial technique for extracting information from websites. By utilizing Python, one of the most popular programming languages for automation, developers can effortlessly implement data scraping techniques to gather data from various sources. With libraries like BeautifulSoup, Scrapy, and Selenium, Python facilitates the creation of robust scraping scripts that can navigate complex web pages, handle dynamic content, and even mimic user interactions.

In the realm of data scraping, Python stands out for its simplicity and versatility. Whether you are scraping news articles, processing data from e-commerce sites, or gathering public records, the language offers a variety of tools to streamline these processes. By combining Python’s data handling capabilities with libraries designed for web scraping, you can collect and analyze data with unparalleled efficiency, making your workflows significantly more productive.

Best Practices for Scraping News Articles

When it comes to scraping news articles, it’s essential to adhere to best practices that ensure your scraping activities remain ethical and legal. First and foremost, always check the website’s Terms of Service for any restrictions on scraping. Many news organizations provide APIs to access their articles legally, and utilizing these APIs can save time and prevent potential legal issues. If scraping directly, it’s prudent to respect the site’s robots.txt file, which may outline the parts of the site that can be crawled.

Moreover, when implementing data scraping techniques for news content, make sure to optimize your scripts to minimize server load. This can be achieved by adding delays between requests, limiting the number of concurrent connections, and filtering unnecessary data. Additionally, always consider the ethical implications of your scraping; for instance, attribute the source of the information correctly and avoid scraping excessive amounts of data that might disrupt the service for other users.

Essential Web Scraping Guidelines

Web scraping guidelines are crucial for anyone looking to engage in automated data extraction responsibly. These guidelines help to foster a good relationship between developers and website owners. Always ensure compliance with legal restrictions, and prioritize websites that allow scraping either explicitly or through their API services. Understand that improper scraping can lead to IP bans, legal battles, or even data misuse.

In addition to adhering to legal frameworks, it’s vital to have robust error handling in your scraping scripts. Not all web pages are structured the same way, and changes in the HTML layout can break your scraper. Incorporating an adaptable parsing logic will help manage unexpected changes, ensuring continuous access to relevant information without constant manual intervention. By following these web scraping guidelines, you contribute to sustainable practices within the web scraping community.

Leveraging Automated Data Extraction Techniques

Automated data extraction through web scraping is revolutionizing how businesses and individuals access critical information. With Python and its powerful libraries, automating the process of gathering data can save hours of manual work. Using frameworks like Scrapy allows users to build complex data extraction systems that can run asynchronously, fetching multiple pages simultaneously and compiling data efficiently.

Furthermore, utilizing automated data extraction techniques provides an edge in data analysis. By scraping a variety of data points, such as news articles, product prices, or customer reviews, one can aggregate and analyze this information to derive meaningful insights. Automation not only speeds up the collection process but also enhances accuracy, ensuring that the data gathered is both timely and relevant for strategic decision-making.

Common Challenges in Web Scraping

While web scraping is a powerful tool, it comes with its own set of challenges. One prominent issue is dealing with CAPTCHAs and other forms of bot detection that websites implement to thwart automated requests. Developing scraping scripts that can bypass these security measures requires advanced techniques and tools, and it’s important to do so ethically and legally.

Additionally, web page structures can vary greatly, making it difficult to create universal scraping scripts. What works for one website may not work for another, so understanding HTML and DOM manipulation is crucial. Often, you might need to update your scraping logic regularly to accommodate changes in webpage layouts, which can be both time-consuming and complex.

Effective Strategies for Data Cleaning Post-Scraping

Once data has been scraped, a critical next step is data cleaning. This process involves validating the integrity of the data, removing duplicates, and ensuring that the information is formatted correctly for analysis. Using Python, one can easily leverage libraries like Pandas to automate the cleaning and processing of the scraped data, allowing for a smoother transition into analysis.

Data cleaning not only improves the usability of the data but also enhances the accuracy of subsequent analysis. Standardizing formats, such as date and time representations, ensures consistency across datasets, facilitating insights that can drive business decisions. Implementing effective data cleaning strategies can significantly boost the overall quality of the information extracted from web scraping efforts.

Tools and Libraries for Effective Web Scraping

Selecting the right tools and libraries is foundational in successful web scraping endeavors. Python offers a robust set of libraries, including BeautifulSoup for parsing HTML and XML, Scrapy for building scraping frameworks, and Requests for managing HTTP requests. Each of these libraries provides unique functionalities that cater to different aspects of web scraping, ensuring that developers have everything they need to harvest information effectively.

Moreover, leveraging tools like Selenium allows for scraping dynamic content generated by JavaScript, which is increasingly common in modern web applications. By combining these tools, developers can create comprehensive scraping solutions that are capable of handling a wide variety of data extraction tasks. Understanding the strengths and use cases of each library is essential for optimizing the web scraping process.

Ethics of Web Scraping and Data Usage

The ethics of web scraping is an increasingly important conversation in the digital age. As data privacy concerns rise, practitioners must navigate the moral implications of data extraction carefully. Respecting copyright laws and privacy rights of individuals is key; scraping personal information from websites without permission can lead to severe legal repercussions.

Moreover, even public data should be treated with care. Ethical scraping would involve fair usage that does not overwhelm the servers of the data source or harm its operations. Engaging with web scraping requires a commitment to ethical standards which can help ensure that data practices are responsible and respectful of the information’s origin.

Future Trends in Web Scraping Technologies

As technology evolves, so too does the landscape of web scraping. With advancements in artificial intelligence and machine learning, future scraping tools may become increasingly sophisticated, enabling more intelligent retrieval of structured data. These technologies could lead to the automation of complex decision-making processes based on the scraped data, optimizing business operations and strategies.

Moreover, the rise of big data will likely necessitate more efficient scraping methodologies to handle vast amounts of information. Developers will need to stay ahead of trends, such as the increasing use of APIs and web services that may come into play. Understanding these future trends can provide web scraping professionals with the insight necessary to adapt and thrive in an ever-changing digital environment.

Frequently Asked Questions

What are the best web scraping guidelines for beginners?

When starting with web scraping, particularly with Python web scraping, it’s crucial to follow specific web scraping guidelines. These include respecting the website’s robots.txt file, which indicates whether you can scrape the site, and ensuring you are compliant with copyright laws and terms of service. Additionally, using polite scraping techniques—like adding delays between requests to avoid overloading the server—is also recommended.

How can I ensure my automated data extraction is ethical?

To ensure your automated data extraction is ethical, adhere to web scraping guidelines that emphasize user privacy, data ownership, and compliance with legal restrictions. Always check a site’s terms of service before scraping and consider reaching out for permission if necessary. Utilizing ethical scraping practices not only protects your integrity but also fosters positive relationships with data providers.

What tools and libraries are best for Python web scraping?

The most popular tools and libraries for Python web scraping include BeautifulSoup, Scrapy, and Requests. BeautifulSoup is excellent for parsing HTML and XML documents and allows for easy navigation and extraction of data. Scrapy is a powerful framework that can handle automated data extraction at scale. Requests is great for sending HTTP requests with minimal effort. Combining these tools with adherence to scraping guidelines will help you effectively extract data.

What common mistakes should I avoid in data scraping techniques?

Common mistakes in data scraping techniques include ignoring the robots.txt file, which indicates what can be scraped, and failing to handle dynamic content properly. Another mistake is scraping too aggressively, leading to IP bans. Follow scraping guidelines by implementing respectful scraping practices, such as randomized intervals between requests and user-agent rotation to mimic human behavior.

How do scraping news articles fit into web scraping guidelines?

Scraping news articles requires careful consideration of web scraping guidelines, especially regarding copyright laws and site-specific terms of use. Many news sites have restrictions on automated data extraction to protect their content. Always ensure you have permission or are scraping articles that are legally permissible under fair use, while also considering licensing when necessary.

Can I scrape data from any website using automated data extraction?

No, you cannot scrape data from any website freely using automated data extraction. Many websites have specific scraping guidelines that dictate how their data can be accessed. Always check the robots.txt file and the site’s terms of service to ensure compliance. If a site prohibits scraping, respect those guidelines to avoid legal repercussions.

What are the legal implications of violating web scraping guidelines?

Violating web scraping guidelines can lead to legal implications, such as cease and desist orders or lawsuits under copyright law or the Computer Fraud and Abuse Act (CFAA) in the U.S. It’s vital to understand both the ethical standards and legal framework surrounding web scraping to protect yourself and your work. Always stay informed and consult legal advice if unsure about scraping practices.

| Key Points |

|---|

| Direct access to external websites for scraping is not possible as of October 2023. |

| Web scraping can be performed using programming languages like Python. |

| Summarization of news articles can be done based on prior knowledge. |

Summary

Web scraping guidelines are essential for understanding how to collect data from websites effectively and ethically. Since direct access to sites like nytimes.com is unavailable, it’s crucial to utilize programming languages such as Python for web scraping tasks. Additionally, leveraging existing knowledge for summarizing news articles can be a valuable strategy.