Web Scraping Techniques: A Beginner’s Guide

Web scraping techniques have emerged as essential tools for anyone looking to harness the power of the internet for data collection and analysis. Whether you’re a seasoned developer or a beginner wanting to learn how to scrape websites, understanding these methods can greatly enhance your ability to gather information efficiently. From leveraging web scraping tools to exploring various data extraction methods, the possibilities are endless. As the digital landscape expands, adopting effective web scraping practices becomes crucial for staying ahead. Dive into the world of web scraping for beginners to start unlocking valuable insights hidden across the web.

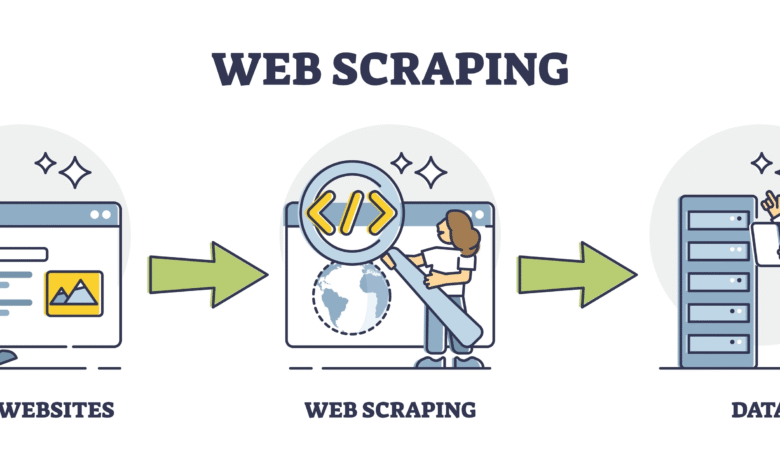

Exploring the realm of data harvesting from online sources opens up a plethora of opportunities for both individuals and businesses alike. Through automated techniques, users can efficiently gather necessary information from various sites without manual effort. This process, often referred to as web data extraction or content gathering, utilizes specialized software and coding languages to navigate and retrieve web content. By mastering these essential skills, you can streamline your research and analysis, ultimately enhancing your decision-making capabilities. Let’s delve deeper into these methodologies to understand how they can be implemented effectively.

Understanding Web Scraping Techniques

Web scraping techniques have become essential for extracting valuable information from various websites. By utilizing these methods, users can gather data efficiently, automating the extraction process without manual data entry. From simple HTML parsing to more advanced techniques like DOM manipulation, web scraping offers a range of strategies to suit different needs and levels of expertise. Whether you are a novice or an experienced programmer, understanding the fundamentals of web scraping will empower you to harness valuable data from the web.

Among various data extraction methods, web scraping is increasingly popular among businesses and developers looking to streamline their data collection processes. This technique can be applied to e-commerce sites for price monitoring, news sites for sentiment analysis, or any other domain where data-driven insights are pertinent. Familiarizing yourself with the various web scraping tools can significantly enhance your productivity, enabling you to set up automated scraping bots that can retrieve and organize large datasets swiftly.

Web Scraping for Beginners: A Comprehensive Guide

For those starting in web scraping, the journey can seem daunting. However, breaking it down into manageable steps can make the process more approachable. The first step is understanding the basics: what web scraping is and how it works. By grasping concepts such as HTML structure, HTTP requests, and response parsing, beginners can lay a solid foundation for their scraping endeavors. Additionally, there are numerous resources and tutorials available that cater specifically to newcomers in the field.

After gaining familiarity with the concepts, the next step for beginners is selecting the right tools. There are countless web scraping tools available, many of which are user-friendly and don’t require extensive programming knowledge. Popular choices include Beautiful Soup and Scrapy for Python users, while browser extensions like Web Scraper can help those less technically inclined. Knowing how to utilize these tools effectively will significantly enhance your web scraping capabilities, allowing data extraction with minimal hassle.

Choosing the Right Web Scraping Tools

Selecting the right web scraping tools is crucial to the success of your data extraction projects. There are numerous tools available, ranging from simple browser extensions to powerful programming frameworks. For instance, Beautiful Soup is a Python library that makes it easy to scrape information from HTML and XML documents. For larger projects that require efficient handling of multiple tasks, Scrapy is an excellent choice, providing a robust framework for managing spiders and intricate data pipelines.

It’s also important to consider factors such as ease of use, functionality, and community support when choosing a web scraping tool. Tools like Octoparse and ParseHub offer user-friendly interfaces with visual scraping capabilities, making them accessible for beginners. On the flip side, more advanced users might prefer tools that provide greater customization options and scalability. Understanding the strengths and weaknesses of each tool will ensure that you select the one that best fits your project requirements.

Data Extraction Methods: Best Practices

When it comes to data extraction methods, adhering to best practices is important to yield high-quality data that meets your objectives. One key practice is to map out the data you wish to collect before diving into the technical aspects. This process often involves identifying target URLs, defining data points of interest, and formulating a strategy for handling pagination or dynamic content. Taking time to plan your scraping strategy can save you hours of data cleaning and organization later.

Another best practice is to respect website terms of service and use ethical scraping techniques. Many sites prohibit scraping or impose rate limits to prevent server overload. Using a well-defined scraping schedule and considering the use of proxies can help mitigate issues while ensuring you remain in compliance with site guidelines. By following these best practices, you can perform efficient web scraping that yields reliable and usable data.

Legal Considerations in Web Scraping

Navigating the legal landscape of web scraping is imperative to avoiding potential pitfalls. While web scraping is a powerful tool for data collection, it’s essential to be aware of the legal implications associated with this activity. Websites often have specific terms of service that outline what users are allowed to do with their content. Ignoring these terms could lead to legal action, including cease and desist letters or even lawsuits, especially for commercial entities.

In addition to adhering to individual website terms, it’s also vital to consider broader legal frameworks, such as copyright law and the Computer Fraud and Abuse Act in the United States. Each region may have different regulations regarding data collection and usage. By staying informed about the legal aspects of web scraping and ensuring compliance, you can minimize risks and conduct your data extraction projects responsibly.

Advanced Web Scraping Techniques for Developers

As developers become more proficient in web scraping, they often seek advanced techniques to optimize their scraping processes. These may include techniques such as using headless browsers for rendering JavaScript-heavy sites, employing techniques to overcome anti-scraping measures (like CAPTCHAs), and utilizing machine learning algorithms to analyze and categorize scraped data. Such advanced techniques can greatly enhance the efficiency and effectiveness of your scraping efforts.

Additionally, automating web scraping tasks through scripting and scheduling with tools like cron jobs or background services can streamline the process significantly. By integrating robust error handling and logging into your scripts, developers can ensure their scraping operations run smoothly even in the face of unexpected changes in website layouts or performance issues, thus maximizing data collection output over time.

Integrating Web Scraping with Other Data Analysis Tools

Web scraping doesn’t have to operate in isolation; integrating it with other data analysis tools can yield richer insights from the scraped data. By combining data scraping with analytics platforms like Tableau or Power BI, users can create compelling visual representations of the data they collect. This integration allows for deeper dives into trends, patterns, and anomalies that might be relevant in decision-making processes.

Furthermore, utilizing scripting languages like Python or R alongside web scraping enables powerful data manipulation and analysis possibilities. Libraries such as Pandas in Python facilitate sophisticated data cleaning and transformation tasks, while R provides excellent statistical capabilities. By leveraging these languages in conjunction with web scraping, analysts can go beyond mere data collection to derive meaningful conclusions that drive business strategy.

Using APIs for Data Extraction as an Alternative to Web Scraping

While web scraping is a popular method for data extraction, using APIs presents a more structured alternative that can simplify the data collection process significantly. Many websites provide APIs that allow users to access data directly without the need for scraping. These APIs often return data in a clean, structured format like JSON or XML, making it easier to work with and reducing the need for extensive data cleaning.

API usage also typically adheres to the site’s terms of service and is less susceptible to legal complications associated with scraping. However, it’s vital to check API documentation for usage limitations, such as rate limits, to avoid potential service interruptions. When available, leveraging APIs can often be a more reliable path to data extraction and should be considered when planning data collection strategies.

Future Trends in Web Scraping and Data Extraction

The future of web scraping and data extraction is poised for significant evolution, driven largely by advancements in technology and an ever-increasing demand for data. Trends such as the integration of artificial intelligence into web scraping tools are likely to enhance capabilities, enabling more sophisticated data parsing and analysis. Machine learning algorithms can analyze vast datasets to identify patterns, making data extraction not only more efficient but also smarter.

Moreover, with growing concerns about data privacy and ethical scraping practices, the development of tools that respect user privacy while still providing valuable insights is likely to gain traction. Future web scraping initiatives may focus more on ethical data collection strategies, ensuring compliance with legislation and building trust with data providers. This shift will undoubtedly shape how businesses and individuals approach web scraping in the years to come.

Frequently Asked Questions

What are the best web scraping techniques for beginners?

For beginners, the best web scraping techniques include using web scraping tools like Beautiful Soup and Scrapy, which simplify data extraction methods by providing easy-to-use APIs for navigating HTML and XML documents. Understanding the Document Object Model (DOM) and practicing with some basic projects can also enhance your skills.

How can I scrape websites effectively using Python?

To scrape websites effectively using Python, you can utilize libraries such as Beautiful Soup for parsing HTML and requests for making HTTP requests. Begin with identifying the elements you wish to extract, write a script to fetch the webpage, and then use Beautiful Soup to retrieve the required information. Familiarize yourself with XPath or CSS selectors for targeted data extraction.

What web scraping tools are best for data extraction?

Some of the best web scraping tools for data extraction include Scrapy, which is a powerful open-source framework, and Octoparse, which is user-friendly and ideal for beginners. Other noteworthy tools are ParseHub and Import.io, which offer visual data extraction capabilities without extensive coding knowledge.

How do you automate web scraping using Python scripts?

To automate web scraping using Python scripts, you can schedule your scripts using tools like cron (Linux) or Task Scheduler (Windows). Moreover, libraries such as Selenium can be used to handle dynamic content and interactions, enabling you to scrape websites that employ JavaScript for rendering data.

What are the legal considerations when scraping websites?

When scraping websites, it’s crucial to review the site’s Terms of Service and robots.txt file to understand any restrictions. Complying with legal requirements is essential to avoid potential repercussions. Engaging with public APIs, when available, is often a safer and more compliant method for data extraction.

Can you explain how to scrape websites without getting blocked?

To scrape websites without getting blocked, consider using techniques like rotating user agents, employing proxies, and managing request rates to mimic human behavior. Additionally, tools such as Puppeteer can help manage headless browsing, thus reducing the likelihood of being detected as a bot.

What are common challenges in web scraping techniques?

Common challenges in web scraping techniques include dealing with anti-scraping measures, handling dynamically loaded content with JavaScript, and managing the changing structure of websites. Employing robust libraries and continuous monitoring can help mitigate these issues, ensuring effective data extraction methods.

How do data extraction methods vary between static and dynamic websites?

Data extraction methods for static websites often rely on simpler techniques like HTML parsing with libraries such as Beautiful Soup. In contrast, dynamic websites may require more complex approaches, including using browser automation tools like Selenium or Puppeteer to access content that is rendered after the initial page load.

What role do APIs play in web scraping for beginners?

APIs play a significant role in web scraping for beginners as they provide a structured and legal way to access data without the complications of scraping HTML. Many websites offer APIs that return data in usable formats like JSON, which simplifies the extraction process significantly. Beginners should consider using APIs wherever possible to avoid common scraping pitfalls.

| Key Point | Description |

|---|---|

| Web Scraping Limitations | One cannot directly scrape content from external websites like nytimes.com. |

| Assistance Offered | Guidance on general topics, summarization of articles, and web scraping techniques. |

Summary

Web scraping techniques are essential for collecting data from websites and automating workflows. While direct access to certain sites may be restricted, understanding the fundamentals of web scraping enables users to extract valuable information efficiently. Key techniques include using programming languages like Python with libraries such as Beautiful Soup and Scrapy, leveraging browser automation with tools like Selenium, and employing APIs when available for structured data retrieval. By mastering these techniques, you can navigate the complexities of web data extraction and bolster your data-driven initiatives.