Web Scraping: Techniques and Tools for Effective Data Mining

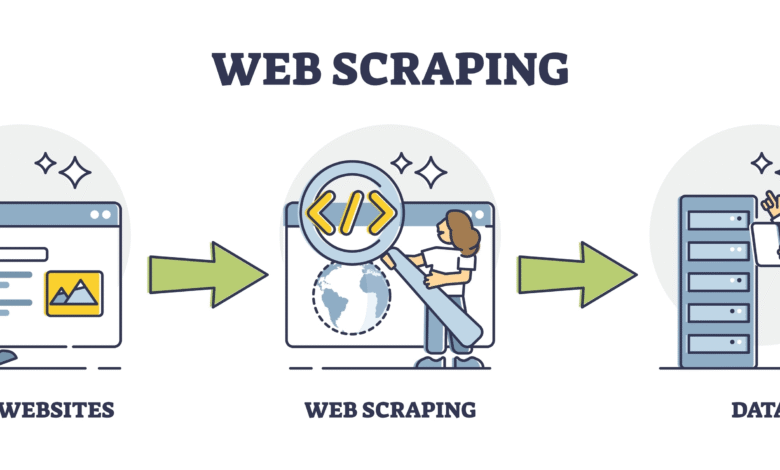

Web scraping is an essential technique used for extracting data from websites, allowing businesses and individuals to gather critical information rapidly and efficiently. This automated data collection process leverages advanced scraping tools and web crawlers to navigate through online content, transforming unstructured web data into structured formats. By utilizing web scraping, users can engage in effective data mining, tapping into valuable resources that enhance decision-making and market analysis. As digital information grows exponentially, web data extraction has become crucial for staying competitive in various industries. Whether it’s for research, price comparison, or sentiment analysis, mastering web scraping can significantly streamline data workflows and boost productivity.

Web scraping, also referred to as data harvesting or web crawling, represents a powerful method for online data acquisition. This innovative approach allows users to automate the process of gathering information from diverse web sources, thus enabling efficient data extraction. By employing web data extraction strategies, organizations can transform raw internet content into actionable insights, driving informed decision-making processes. With the rise of e-commerce and digital analytics, the significance of automated data collection cannot be overstated, making it a cornerstone of modern data strategies. Discover how these powerful scraping techniques can unlock vast stores of information that were previously untapped.

Understanding Web Scraping

Web scraping is a powerful technique used to extract information from websites. It involves automated data collection methodologies that allow users to gather vast amounts of data from the internet efficiently. However, it’s crucial to understand the ethical implications and legal boundaries of web scraping before diving into its application. Data mining practices often integrate web scraping to streamline the gathering of structured data, which can lead to invaluable insights across various industries.

The process of web scraping is typically enabled by web crawlers, which systematically browse the web to collect data from specified URLs. Scraping tools facilitate this process by automating the collection and parsing of web content into usable formats. As organizations increasingly rely on real-time data for decision-making, mastering web data extraction methods becomes essential for businesses aiming to stay competitive.

Popular Web Scraping Tools

In the domain of web scraping, a variety of tools are available that cater to different user needs, ranging from novice to expert levels. Some of the most popular scraping tools include Beautiful Soup and Scrapy, both of which are renowned for their flexibility and ease of use in Python programming. These tools allow users to build custom web crawlers to suit specific data extraction needs, making it possible to collect data from multiple sources without extensive programming knowledge.

For those seeking a user-friendly interface, tools such as Octoparse and ParseHub offer point-and-click features that simplify the web scraping process. These platforms enable users to visually select the data they need and automate the scraping process without writing any code. Furthermore, they often include options for scheduling and monitoring data collection tasks, ensuring a reliable and efficient automated data collection strategy.

Additionally, cloud-based scraping solutions, such as Apify, provide robust environments for handling large-scale data extraction projects. These tools are designed to manage high traffic and multiple web scraping tasks simultaneously, making them ideal for businesses that need to gather data from numerous websites quickly and efficiently.

Best Practices for Automated Data Collection

To effectively utilize automated data collection techniques, adopting best practices in web scraping is vital. Users should always check the terms of service of the websites they intend to scrape, ensuring compliance with legal restrictions. It’s important to implement respectful scraping practices that minimize server load and avoid excessive requests, as this can lead to IP bans or legal repercussions. Also, diversifying the scraping intervals can simulate human-like behavior and reduce the chances of being blocked.

Another essential aspect of best practices is the handling of data post-collection. Once data is scraped, it must be cleaned and structured appropriately for analysis. This often involves removing duplicates, formatting inconsistencies, and validating the accuracy of the data collected. By employing data cleansing techniques and utilizing effective data mining strategies, users can maximize the value derived from their web scraping efforts, enhancing their ability to make informed decisions based on the gathered insights.

Legal Considerations in Web Data Extraction

As web scraping becomes increasingly popular, the legal landscape surrounding it is evolving. Understanding the implications of copyright law, terms of service agreements, and data privacy regulations is essential for businesses engaged in web data extraction. Many websites explicitly state their policies regarding automated data collection, making it necessary for users to review these policies and adhere to them strictly. Ignoring these guidelines can lead to legal actions that could jeopardize a company’s reputation and financial standing.

In addition to observing copyright laws, organizations should remain vigilant about data protection regulations, such as the General Data Protection Regulation (GDPR) in Europe. Non-compliance can result in hefty fines and penalties. Therefore, engaging legal expertise to navigate the complexities of web scraping can provide a safeguard against potential violations while ensuring that data collection practices are ethical and responsible.

Integrating Machine Learning with Web Scraping

Machine learning is increasingly being combined with web scraping to enhance the efficiency and effectiveness of data extraction. By utilizing machine learning algorithms, businesses can analyze scraped data to predict trends and patterns relevant to their operations. For example, sentiment analysis can be applied to customer reviews collected through web scraping to gauge public perception of a brand or product.

Furthermore, machine learning models can be trained to optimize the web scraping process itself. Through pattern recognition, these models can identify and adapt to changes in website structures, ensuring that data is consistently collected without significant downtime. Integrating advanced analytics with traditional web scraping techniques transforms raw data into actionable insights, supporting data-driven decision-making.

Challenges Faced in Web Scraping

Despite its many advantages, web scraping is not without challenges. Websites continuously evolve, implementing measures to deter automated data extraction, such as CAPTCHAs, dynamic content loading, and IP blocking. These security features can significantly hinder the data scraping process, requiring users to develop more sophisticated techniques to bypass these barriers. Staying ahead of these challenges is crucial for successful web data extraction.

Additionally, managing large quantities of scraped data can pose its own set of issues. Data storage, processing capacity, and analysis tools can become bottlenecks if not adequately planned for. Organizations must ensure they have the necessary infrastructure and tools to handle the volume of data collected while maintaining data integrity and security throughout the process.

The Future of Web Scraping Technologies

As technology advances, so too does the future of web scraping. Emerging innovations, such as artificial intelligence and natural language processing, are set to revolutionize the scraping landscape. These technologies will enhance the ability to extract not just structured data but also unstructured data, allowing for richer insights and analysis. Moreover, integrating AI with scraping tools can automate the entire data collection process, reducing manual intervention and increasing accuracy.

Another future trend is the greater emphasis on ethical scraping practices due to increased awareness of data privacy issues. Tools and platforms may evolve to include built-in compliance features that help users navigate the legal landscape more effectively. As businesses continue to rely on web data extraction for competitive advantage, the development of more sophisticated, responsible web scraping technologies will be crucial in shaping the industry.

Web Scraping for Market Research

In today’s fast-paced business environment, web scraping plays a vital role in market research. By collecting valuable data from competitor websites, social media platforms, and forums, businesses can gain insights into market trends, consumer behavior, and product performance. This data helps companies make informed strategic decisions, forecast demand, and tailor their marketing tactics accordingly.

Moreover, web data extraction can reveal important information about pricing strategies, promotional activities, and customer feedback. By continuously monitoring and analyzing this data, businesses can adjust their offerings in real-time to maximize customer satisfaction and drive sales growth. Thus, integrating web scraping into market research strategies equips organizations with a competitive edge.

Web Scraping in E-commerce Analysis

E-commerce companies leverage web scraping to gather critical data on pricing, product availability, and customer reviews from competing retailers. This data is essential for establishing competitive pricing strategies and understanding market positioning. By scraping competitor websites, e-commerce businesses can dynamically adjust their prices to remain competitive while ensuring they attract and retain customers.

Furthermore, web data extraction helps e-commerce platforms keep track of emerging trends and customer preferences. By analyzing scraped data on popular products, sales tactics, and user feedback, companies can tailor their inventory and marketing strategies to align closely with consumer demand, ultimately leading to improved sales performance and customer loyalty.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is a technique used to extract data from websites. It involves utilizing web crawlers or scraping tools that automatically collect information from pages, transforming it into structured data suitable for analysis or storage.

Are there legal issues associated with web scraping?

Yes, web scraping can raise legal concerns, particularly related to website terms of service and copyright laws. It’s important to review the regulations surrounding automated data collection to ensure compliance while conducting web data extraction.

What are some common web scraping tools available?

There are many scraping tools available, such as BeautifulSoup, Scrapy, and Selenium. These tools are designed for web data extraction, providing various features for efficient automated data collection.

How can a web crawler be used effectively for data mining?

A web crawler can be effectively used for data mining by systematically browsing the web and gathering information based on specific criteria. This process helps organizations collect large volumes of data for analysis and decision-making.

What is the difference between web scraping and web crawling?

Web scraping refers to the process of extracting specific data from websites, while web crawling is the method used to discover and index web pages. Scraping tools often employ web crawlers to access the content needed for data extraction.

Can web scraping be done without programming knowledge?

Yes, there are user-friendly scraping tools available that do not require programming knowledge. These tools often provide graphical interfaces that allow users to set up automated data collection processes easily.

What types of data can be extracted through web scraping?

Web scraping can be used to extract various types of data, including product prices, reviews, articles, and user-generated content. This versatility makes it a powerful technique for numerous sectors, such as e-commerce and research.

Is it possible to scrape dynamic websites?

Yes, dynamic websites can be scraped using advanced scraping tools that support JavaScript rendering. These tools can interact with the website as a user would, enabling automated data collection from complex sites.

What are the best practices for ethical web scraping?

Best practices for ethical web scraping include respecting robots.txt directives, not overwhelming servers with requests, and complying with applicable laws. Always review websites’ terms of service before beginning data extraction.

How can web scraping benefit businesses?

Web scraping can significantly benefit businesses by automating data collection processes, enabling market research, tracking competitors, and gathering insights that inform strategic decisions.

| Key Point | Description |

|---|---|

| Definition | Web scraping is the automated method of extracting large amounts of data from websites. |

| Limitations | Some websites may block automated access, hindering web scraping efforts. |

| Legality | Web scraping legality can vary by jurisdiction and website terms of service. |

| Tools | Common tools for web scraping include Beautiful Soup, Scrapy, and Selenium. |

Summary

Web scraping is a vital process for data extraction from websites, allowing businesses and individuals to gather insights and information efficiently. As web technologies evolve, understanding the ethical and legal boundaries of web scraping becomes crucial. Therefore, it’s important to consider these factors to effectively leverage web scraping in your projects.