Scraping Content from Websites: Important Guidelines

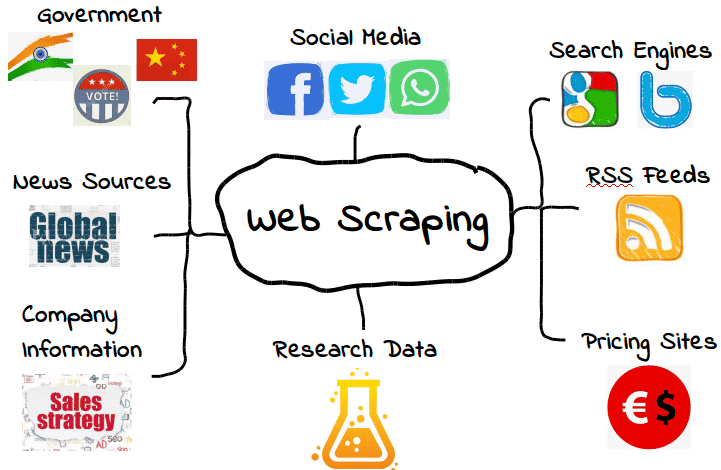

Scraping content from websites has become an essential practice for businesses and developers looking to gather valuable data from the internet. By employing various web scraping techniques, users can efficiently extract information from online sources, including news sites and product listings. However, it’s crucial to adhere to legal web scraping methods to avoid potential complications and ensure compliance with copyright laws. With the help of reliable data extraction tools, individuals can automate the process of scraping news content, turning vast amounts of online information into actionable insights. Following established web scraping guidelines will also enhance the quality and accuracy of the extracted data, making it a powerful asset for decision-making.

The process of collecting data from web pages, often referred to as data harvesting or web data mining, has gained popularity across various industries. This method involves automatically retrieving and organizing information by utilizing effective data scraping techniques. It is important to note that ethical and legal considerations play a vital role in performing such tasks, ensuring that data collectors operate within the boundaries of the law. Many platforms provide sophisticated tools that facilitate the extraction of required information while respecting the original source’s rights. As more organizations recognize the value of web-based insights, understanding the nuances of data collection and compliance becomes increasingly significant.

Understanding Web Scraping and Its Benefits

Web scraping is a powerful technique used to extract data from websites automatically. Businesses and individuals leverage web scraping to gather information that can enhance their decision-making processes. By utilizing data extraction tools, users can collect vast amounts of data from various online sources quickly and efficiently. This not only saves time but also ensures that the data is obtained systematically, allowing for better analysis and insights.

Moreover, web scraping opens up opportunities for gathering competitive intelligence. Companies can monitor their competitors’ product offerings, pricing strategies, and customer feedback by scraping relevant websites. It also facilitates research processes, enabling academic institutions and researchers to access large data sets that can aid in analyses or studies. However, it’s crucial to implement ethical scraping practices and adhere to legal guidelines to avoid potential pitfalls.

Legal Considerations in Web Scraping

When engaging in web scraping, it’s essential to understand the legal implications involved. Not all websites permit scraping, and doing so without permission can lead to legal challenges. Adhering to web scraping guidelines is paramount to ensure compliance with copyright laws and a site’s terms of service. Always check a website’s robots.txt file, which indicates whether web crawlers are allowed to access various parts of a website.

In many cases, ethical scraping involves obtaining consent from the website owner or ensuring that the data collected falls within permissible use cases. Legal web scraping is characterized by respectful practices that consider the rights of content creators. It’s advisable to consult legal experts or researchers familiar with digital rights management to ensure your scraping activities align with current laws and regulations.

Effective Web Scraping Techniques

Mastering effective web scraping techniques is crucial for achieving reliable results. Various approaches can be used depending on the complexity of the target website. For instance, simple HTML scraping can be done using tools like Beautiful Soup in Python, which allows users to parse HTML and extract relevant data effortlessly. More complex tasks may involve leveraging APIs provided by websites, ensuring a structured data flow without breaching terms of service.

In addition, employing browser automation tools like Selenium can be advantageous when dealing with dynamic websites that load content via JavaScript. This technique enables the extraction of data that traditional scrapers might miss. Combining multiple scraping methods and adapting to the specific structure of each site can significantly improve the quality and quantity of the scraped data.

Data Extraction Tools for Web Scraping

Selecting the right data extraction tools is vital for efficient web scraping. There are numerous options available, ranging from user-friendly interfaces to more sophisticated programming solutions. Platforms like Octoparse and ParseHub provide visual scraping environments that cater to users without extensive coding experience, making it accessible for everyone.

For developers, programming libraries in languages like Python – such as Scrapy, requests, and Beautiful Soup – offer powerful capabilities for custom scraping projects. These tools allow users to define rules and automate the data extraction process more flexibly. Regardless of the tools selected, a solid understanding of data formats and web technologies is crucial to maximize the efficiency and effectiveness of any web scraping endeavor.

Scraping News Content Responsibly

Scraping news content requires a delicate balance between retrieving valuable information and respecting the rights of news organizations. Journalists and researchers often seek to analyze articles, headlines, and market trends, making news sites prime targets for scraping. However, it is vital to respect copyright laws and the ethical considerations of using another entity’s content for your benefit.

Utilizing techniques that focus on summarizing or generating insights from scraped news articles instead of duplicating the content can provide value without infringing on rights. By providing critical analysis or unique perspectives, one can create original content that complies with legal web scraping practices while still benefiting from the rich information provided by news sources.

The Future of Web Scraping Technology

The landscape of web scraping technology is ever-evolving, driven by advancements in artificial intelligence and machine learning. These innovations enable more sophisticated data extraction methods that can adapt to changes in website design and formats. Additionally, AI-driven tools can analyze and categorize scraped data more efficiently, providing users with actionable insights that were previously difficult to obtain.

As more businesses recognize the importance of data-driven strategies, the demand for advanced web scraping solutions will continue to grow. Future developments may include the integration of real-time data analysis and enhanced compliance monitoring features to ensure that scraping activities remain within legal boundaries. Staying updated with emerging trends in the web scraping field is essential for anyone looking to maximize the potential of this powerful tool.

Best Practices for Ethical Web Scraping

Implementing best practices for ethical web scraping is crucial for the integrity of both the scrapers and the websites being targeted. This includes adhering to the terms of service outlined by the website, respecting the robots.txt files, and limiting the scraping frequency to avoid overloading the server. Such practices not only protect the scraper from potential legal consequences but also foster goodwill between content creators and data users.

Furthermore, keeping the data collection transparent and informing website owners about the purpose of the scraping can lead to better cooperation. Scraping responsibly also entails giving credit when using or presenting scraped data, thereby respecting the intellectual property rights of authors and creating a more ethical scraping environment.

Common Challenges Faced in Web Scraping

While web scraping can yield vast amounts of valuable information, it is not without its challenges. One of the primary issues is dealing with the changing structures of websites, which can break scrapers if not maintained continuously. As websites update their layout or employ anti-scraping techniques like CAPTCHAs or bot detection, scrapers must adapt quickly to continue their operations.

Additionally, legal challenges can arise if scrapers inadvertently gather data that is sensitive or protected by copyright. Staying informed about ongoing developments in data privacy laws and ensuring compliance with regulations like GDPR is essential for the long-term viability of web scraping activities. A proactive approach to these common challenges can help ensure successful scraping operations.

Web Scraping for Market Research

Web scraping has emerged as an invaluable tool for conducting market research, allowing businesses to gather insights on consumer behaviors, competitive analysis, and market trends. By scraping details from e-commerce sites, forums, and social media, companies can analyze customer preferences and sentiment, which are crucial for shaping their marketing strategies.

Furthermore, market researchers can aggregate data from various sources to create comprehensive reports that inform product development and pricing strategies. This data-driven approach not only enhances the accuracy of market assessments but also enables companies to stay a step ahead of their competitors by understanding evolving market dynamics.

Understanding the Technology Behind Web Scraping

To effectively implement web scraping, it is crucial to understand the technology that powers it. At its core, web scraping involves parsing HTML documents to extract useful data, which can be achieved through various programming languages such as Python, Java, and PHP. Each language offers specialized libraries and frameworks designed to streamline the scraping process and address common challenges.

In addition to programming, knowledge of web technologies such as CSS selectors, DOM structure, and HTTP requests is essential for successful scraping. By mastering these elements, data scrapers can develop more efficient scripts that yield accurate results while minimizing the risk of being detected or blocked by target websites.

Frequently Asked Questions

What are the common web scraping techniques used for data extraction?

Common web scraping techniques include HTML parsing, DOM manipulation, using APIs for data access, and utilizing automated tools to gather data from websites. Each method has its own benefits and is suitable for different types of content.

Is legal web scraping allowed, and what are the guidelines to follow?

Legal web scraping is permitted as long as you comply with the website’s terms of service, privacy policies, and applicable laws. Always check if the site has a ‘robots.txt’ file that dictates scraping rules to avoid legal repercussions.

What are some popular data extraction tools for web scraping?

Popular data extraction tools for web scraping include Beautiful Soup, Scrapy, Octoparse, and ParseHub. These tools facilitate the process of extracting data efficiently and are favored for their user-friendly interfaces and robust features.

How can I scrape news content responsibly?

To scrape news content responsibly, adhere to legal web scraping practices, obtain permissions if required, limit request frequency to avoid overwhelming the server, and respect copyright laws. Always attribute sources appropriately.

What web scraping guidelines should I follow to avoid blocks?

To avoid blocks while scraping, implement guidelines such as rotating IP addresses, using headers to mimic browser requests, adhering to rate limits, and scraping at off-peak hours. This minimizes the chances of detection and enhances your scraping capabilities.

| Key Points |

|---|

| Cannot assist with scraping content from websites like nytimes.com. |

| Encourages specific questions about New York Times articles instead. |

Summary

Scraping content from websites is a controversial practice that raises legal and ethical concerns. While it may seem like a useful way to gather information quickly, it is essential to consider the rights of content owners. Instead of scraping, seek permission or inquire about specific topics directly for better compliance with copyright laws.