Web Scraping Techniques: A Beginner’s Guide

Web scraping techniques have revolutionized the way data is gathered from the web, enabling individuals and businesses to extract valuable insights efficiently. By utilizing methodologies such as sending requests to servers and parsing HTML documents, anyone can learn how to web scrape, even with minimal programming knowledge. Popular tools for Python web scraping, like BeautifulSoup and Scrapy, offer powerful functionalities that simplify the process of extracting content from web pages. Whether you’re looking to analyze market trends or gather information for research, mastering these techniques is essential for any aspiring data enthusiast. In this guide, we will explore various tips and tutorials to enhance your web scraping skills, setting you on the path to becoming a proficient data extractor.

The art of gathering data from online resources can be referred to as web harvesting or data extraction. This practice involves utilizing specialized software or scripts to obtain information from web pages, often formatted in HTML. Various frameworks and libraries have emerged, with Python being a popular choice due to its versatility and ease of use. By engaging in practical exercises and following comprehensive guides, such as those focusing on BeautifulSoup and Scrapy, beginners and seasoned developers alike can improve their understanding of this vital skill. Given the ever-increasing reliance on data for decision-making, harnessing these tools is more important than ever.

Introduction to Web Scraping Techniques

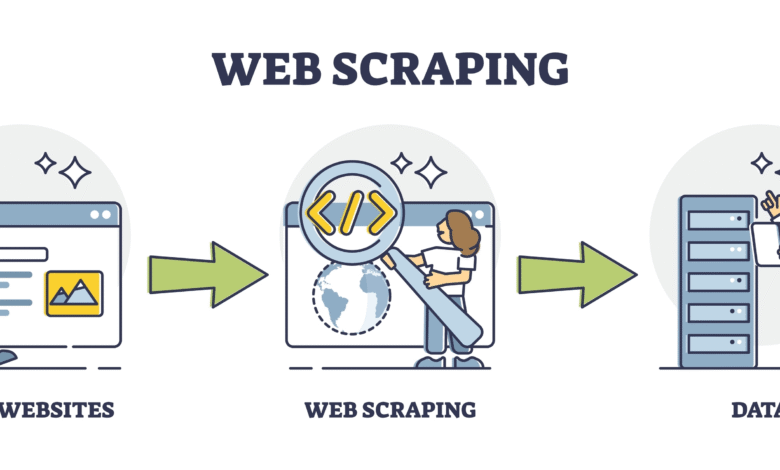

Web scraping is a powerful technique used to extract data from websites. It involves automating the process of sending requests to web servers, retrieving HTML content, and parsing it to obtain useful information. While there are various methods for achieving web scraping, using programming languages like Python has become increasingly popular due to its flexibility and the availability of libraries that simplify the process. Whether you’re sourcing data for personal projects or business analysis, understanding web scraping techniques is essential.

In this introduction, we will explore the fundamentals of web scraping, including the necessary tools and techniques to get started. By utilizing Python along with libraries such as BeautifulSoup and Scrapy, users can create powerful scripts to automate the data extraction process. As we delve deeper, we will discuss methods for efficiently navigating web structures, handling data storage, and ensuring compliance with legal aspects of web scraping.

Frequently Asked Questions

What are the most common web scraping techniques?

The most common web scraping techniques include using libraries such as BeautifulSoup for parsing HTML documents and Scrapy, a powerful framework for large-scale scraping projects. These tools allow you to extract content from web pages efficiently.

How to web scrape using Python?

To web scrape using Python, you typically begin by installing libraries like requests and BeautifulSoup. First, send an HTTP request to the desired web page, then parse the HTML content with BeautifulSoup, allowing you to extract data elements like titles, text, and links.

Can I learn a BeautifulSoup tutorial for beginners?

Yes, a BeautifulSoup tutorial for beginners would cover installing the library, making HTTP requests, and parsing HTML documents. You will learn how to navigate the markup, find specific tags, and extract the necessary information to build your scraping projects.

What is a Scrapy guide for web scraping?

A Scrapy guide for web scraping provides comprehensive instructions on how to set up a Scrapy project. It typically includes topics like defining spiders, handling requests, parsing responses, and storing scraped data. This framework is ideal for building scalable web scrapers.

How do I extract content from web pages effectively?

To extract content from web pages effectively, use a combination of web scraping techniques: send a GET request to fetch the page, parse the HTML with BeautifulSoup or Scrapy, and utilize CSS selectors or XPath to locate and retrieve the desired data accurately.

Is web scraping legal and ethical?

The legality and ethics of web scraping depend on the website’s terms of service and the way you use the data. Always check the website’s policies before scraping and respect robots.txt files to avoid legal complications.

What are some best practices for Python web scraping?

Best practices for Python web scraping include respecting website terms of service, using user-agent headers to mimic browsers, managing request rates to avoid IP bans, and implementing error handling to ensure script reliability when scraping.

How do I handle pagination while web scraping?

Handling pagination in web scraping involves identifying the pattern in the URLs of the subsequent pages, then creating a loop in your scraping script to follow these links using BeautifulSoup or Scrapy until you’ve extracted data from all pages.

| Key Point | Explanation |

|---|---|

| Web Scraping Basics | Web scraping involves sending requests to a webpage and obtaining the HTML document. |

| Tools and Libraries | Popular Python libraries like BeautifulSoup and Scrapy are commonly used for parsing HTML. |

| Extraction Process | Once the HTML is retrieved, specific data can be extracted using various parsing techniques. |

Summary

Web scraping techniques enable users to extract content from web pages efficiently. This process includes sending requests to servers, retrieving HTML documents, and utilizing libraries like BeautifulSoup or Scrapy for parsing. These tools are essential for anyone looking to automate data extraction from websites, streamlining the process of gathering information for research, analysis, or personal projects. Understanding web scraping methods is crucial for navigating and utilizing online information effectively.