Web Scraping Techniques: A Comprehensive Guide

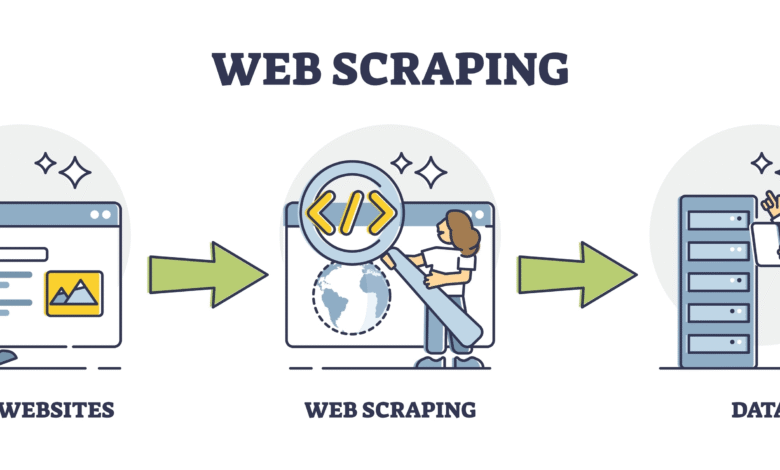

Web scraping techniques have become essential tools for data enthusiasts and businesses looking to extract valuable information from the vast expanse of the internet. With the right strategies and web scraping tools, one can efficiently gather data for analysis and decision-making purposes. Beginners often seek guidance through a web scraping tutorial, ensuring they understand the fundamentals of how to web scrape. By mastering various data extraction methods, users can automate the collection of data from multiple websites, streamlining their workflows. As the demand for data-driven insights grows, understanding web scraping techniques is more critical than ever.

In the landscape of online information retrieval, automated data collection has become a game changer for many professionals. Techniques for harvesting website data offer a plethora of options for those looking to compile insights swiftly and effectively. From leveraging specialized utilities to learning distinct data gathering strategies, the realm of digital information extraction is rich with possibilities. Many seek structured guidance through comprehensive online tutorials on web data harvesting, exploring numerous methodologies tailored to diverse needs. As the digital age continues to evolve, mastering these automation methods is paramount for anyone aiming to stay competitive.

Understanding Web Scraping Techniques

Web scraping techniques are essential for any data-oriented project, allowing users to extract information from websites efficiently. By utilizing methods such as HTML parsing or web crawling, developers can gather vast amounts of data, whether for research, competitive analysis, or personal projects. Understanding the fundamentals of how to web scrape can significantly enhance a developer’s toolkit in the digital age, as it opens up access to invaluable data that may be otherwise difficult to obtain.

One popular technique in web scraping involves employing libraries like Beautiful Soup or Scrapy in Python, which offer powerful tools for parsing HTML and XML documents. When setting up a web scraping project, it’s crucial to define the structure of the target website and the types of data you intend to extract. Knowing how to recognize HTML elements and attributes will allow for more precision in data extraction, which is fundamental to any successful web scraping endeavor.

Essential Web Scraping Tools

To effectively scrape web pages, selecting the right web scraping tools is paramount. Options like Octoparse and ParseHub offer user-friendly interfaces that assist beginners in automating the process without needing extensive programming skills. These tools take the complexity out of scripting and can be particularly beneficial when extracting data from websites with changing layouts or dense information.

For those who prefer a more code-oriented approach, tools such as Python’s Requests and Beautiful Soup are indispensable in the web scraping toolkit. They provide great flexibility and control over the scraping process, enabling users to develop custom solutions to specific web scraping challenges. Additionally, many of these tools come with built-in functionalities that adhere to website scraping policies, allowing for responsible data extraction while minimizing the risk of IP bans.

Data Extraction Methods for Effective Results

Data extraction methods are the backbone of effective web scraping. Standard methods include direct HTML scraping, APIs, and web crawling, all of which vary based on the complexity of the data and the website architecture. When considering which method to use, it’s essential to analyze the structure of the target website, as well as the volume of data needed for your project.

Automated data extraction often employs a combination of these methods to ensure comprehensive data collection. For example, using an API when available can provide more structured data faster compared to conventional web scraping. However, for websites without APIs, web scraping techniques will be necessary to gather the required datasets, making it important to stay updated on data extraction best practices.

A Beginner’s Guide to Web Scraping Tutorials

Starting with web scraping can seem daunting; however, a plethora of web scraping tutorials are available to guide beginners through their first projects. These resources often cover everything from the basics of setting up your environment to advanced techniques such as handling cookies and sessions. Ensuring you choose a tutorial that suits your skill level will foster a more productive learning experience.

As you progress in your web scraping journey, try to follow tutorials that incorporate real-world examples. Hands-on practice, combined with theoretical knowledge, solidifies your understanding and equips you with the required skills to tackle complex scraping tasks. Many online platforms even offer free courses that cover various scraping tools and languages, catering to different learning styles and preferences.

Web Scraping Best Practices and Considerations

When engaging in web scraping, adhering to best practices is crucial not only to maintain ethical standards but also to ensure the longevity of your scraping efforts. This includes respecting the site’s robots.txt file, which outlines the permissible areas for bot activity on the site. Additionally, being mindful of the frequency of your requests can help prevent overburdening the server, which could lead to your IP getting blocked.

Understanding the legal implications of web scraping is also paramount. While some data may be publicly accessible, it doesn’t necessarily mean it’s free to scrape and use. Conducting thorough research on copyright issues and terms of service for each website ensures you stay compliant, safeguarding your scraping initiatives from legal complications.

Advanced Techniques in Web Scraping

For those who have mastered the basics, exploring advanced techniques in web scraping can take your skills to the next level. Techniques such as dynamic content scraping using Selenium or Puppeteer allow you to interact with websites that use JavaScript for rendering content. This approach is essential for scraping modern web applications where static HTML cannot provide the information needed.

Another advanced method includes developing scraping frameworks that automate large scale scraping tasks. Using tools such as Scrapy, developers can build spiders that traverse websites automatically and extract data efficiently. Implementing features like data storage solutions or integrating machine learning algorithms can further enhance the power and efficiency of your web scraping projects.

Determining Ethical Approaches to Web Scraping

Navigating the ethical landscape of web scraping is essential for responsible data extraction practices. Ethical scraping involves understanding the boundaries of what is acceptable when collecting data from websites. It is crucial to analyze how the gathered data will be used and ensure it aligns with ethical standards, promoting transparency with website owners.

As a scraper, always consider the potential impact of your actions. Websites invest resources into creating their content, and scraping can inadvertently harm their operations. Strive for approaches that respect intellectual property and promote cooperation with data providers rather than conflict. This can involve reaching out to website operators for permission to gather data, leading to more collaborative relationships.

Building a Career in Web Scraping and Data Analysis

As industries increasingly rely on data-driven decisions, building a career in web scraping and data analysis presents exciting opportunities. With many businesses seeking to harness the power of public data and insights derived from competitors, professionals skilled in web scraping possess a valuable niche expertise. Pursuing certifications in data science or programming languages will enhance your qualifications and expand your employability in this field.

Additionally, participating in online communities or forums focused on data extraction can provide networking opportunities and insights from industry experts. Platforms like Stack Overflow or Reddit offer spaces to discuss challenges and share strategies, which can further develop your skill set. By immersing yourself in these environments, you can stay updated on emerging tools and techniques, ensuring your knowledge remains relevant in a rapidly evolving technical landscape.

Exploring the Future of Web Scraping Technology

The future of web scraping technology is poised for immense evolution, driven by advancements in artificial intelligence and machine learning. These innovations are transforming traditional scraping techniques into more intelligent systems that can adapt to changes in website structures autonomously. As developers, understanding how to integrate machine learning into your scraping workflows will be crucial for staying competitive in the field.

Moreover, as more businesses become aware of the advantages of big data, the demand for efficient and ethical web scraping solutions will only increase. This presents a unique opportunity for developers to contribute to the development of more sophisticated scraping tools that incorporate user-friendly interfaces, improved error handling, and real-time data processing capabilities, further revolutionizing how we collect information from the web.

Frequently Asked Questions

How to web scrape effectively without being blocked?

To web scrape effectively without getting blocked, you can employ techniques such as rotating IPs, using proxies, adhering to robots.txt files, and limiting the frequency of requests. Additionally, utilizing user-agent rotation can help mimic different browser behaviors, reducing the chances of being detected as a bot.

What are the best tools for web scraping?

Some of the best tools for web scraping include Beautiful Soup, Selenium, and Scrapy. Beautiful Soup excels at parsing HTML and XML documents, Selenium allows you to automate browsers, and Scrapy is a robust framework designed for large scale data extraction.

What are common data extraction methods in web scraping?

Common data extraction methods in web scraping include HTML parsing, DOM traversal, and API consumption. HTML parsing involves extracting data from the raw HTML source, DOM traversal allows navigation through a website’s structure, and using APIs can provide structured data directly from the source.

Where can I find a comprehensive web scraping tutorial?

A comprehensive web scraping tutorial can be found on platforms like Udemy, Coursera, or by visiting documentation sites for specific web scraping tools like Scrapy or Beautiful Soup. These tutorials usually cover the basics, advanced techniques, and best practices to ensure effective data extraction.

What are the legal considerations for web scraping techniques?

When applying web scraping techniques, it’s crucial to consider legal aspects such as copyright, terms of service of the target website, and data privacy laws like GDPR. Always check the site’s terms of use and ensure that your scraping practices comply with relevant regulations.

How do I handle dynamic content while web scraping?

Handling dynamic content during web scraping can be accomplished using tools like Selenium or Puppeteer that can render JavaScript-driven pages. These tools allow you to wait for the necessary elements to load before extracting data, making them ideal for sites that rely heavily on JavaScript.

| Key Point | Description |

|---|---|

| Limitations of Web Scraping | Certain websites, like nytimes.com, restrict automated access and scraping of their content due to legal and ethical concerns. |

| Existing Knowledge | Despite restrictions on some sites, general knowledge about web scraping techniques can still be shared. |

| General Guidance | There are many methods and tools available for web scraping that can be discussed, including web crawlers and APIs. |

Summary

Web scraping techniques are essential for gathering data from the internet efficiently. While some websites, such as nytimes.com, impose restrictions on their content to prevent scraping, there are still various general approaches and tools available. By utilizing these techniques, one can extract valuable information while navigating the complexities of website policies and legalities.